Materials scientists aim to develop autonomous materials that function beyond stimulus responsive actuation. In a new report in Science Advances, Yang Yang and a research team in the Center for Bioinspired Energy Science at the Northwestern University, U.S., developed photo-and electro-activated hydrogels to capture and deliver cargo and avoid obstacles on return.

To accomplish this, they used two spiropyran monomers (photoswitchable materials) in the hydrogel for photoregulated charge reversal and autonomous behaviors under a constant electric field. The photo/electro-active materials could autonomously perform tasks based on constant external stimuli to develop intelligent materials at the molecular scale.

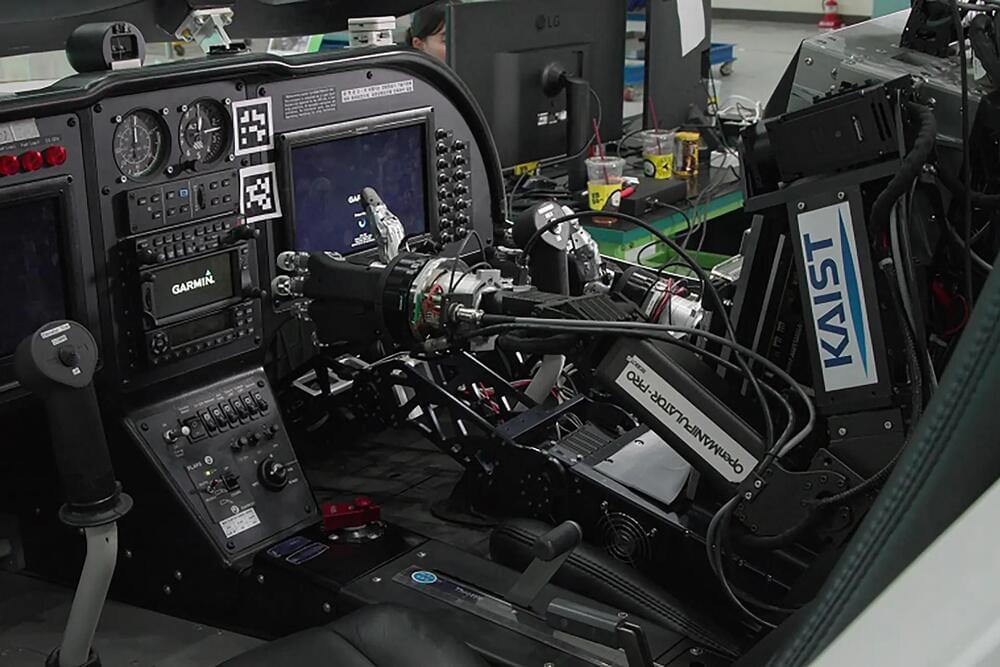

Soft materials with life-like functionality have promising applications as intelligent, robotic materials in complex dynamic environments with significance in human-machine interfaces and biomedical devices. Yang and colleagues designed a photo-and electro-activated hydrogel to capture and deliver cargo, avoid obstacles, and return to its point of departure, based on constant stimuli of visible light and applied electricity. These constant conditions provided energy to guide the hydrogel.