The experimental interface allows the patient to communicate through a digital avatar, and it’s faster than her current system.

Category: robotics/AI – Page 1,100

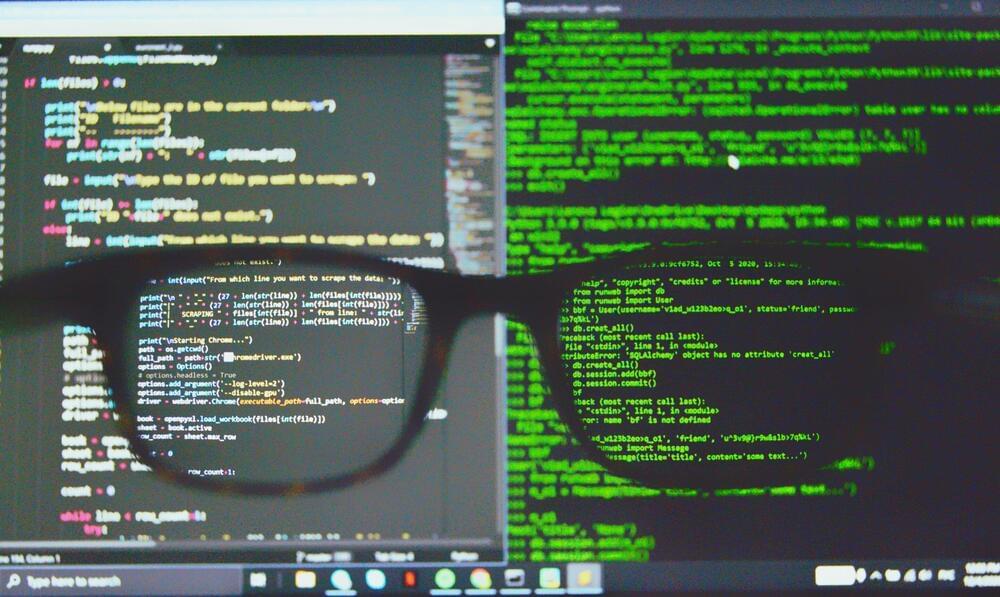

Computer scientists develop open-source tool for dramatically speeding up the programming language Python

A team of computer scientists at the University of Massachusetts Amherst, led by Emery Berger, recently unveiled a prize-winning Python profiler called Scalene. Programs written with Python are notoriously slow—up to 60,000 times slower than code written in other programming languages—and Scalene works to efficiently identify exactly where Python is lagging, allowing programmers to troubleshoot and streamline their code for higher performance.

There are many different programming languages —C++, Fortran and Java are some of the more well-known ones—but, in recent years, one language has become nearly ubiquitous: Python.

“Python is a ‘batteries-included’ language,” says Berger, who is a professor of computer science in the Manning College of Information and Computer Sciences at UMass Amherst, “and it has become very popular in the age of data science and machine learning because it is so user-friendly.” The language comes with libraries of easy-to-use tools and has an intuitive and readable syntax, allowing users to quickly begin writing Python code.

Purdue thermal imaging innovation allows AI to see through pitch darkness like broad daylight

WEST LAFAYETTE, Ind. – Researchers at Purdue University are advancing the world of robotics and autonomy with their patent-pending method that improves on traditional machine vision and perception.

Zubin Jacob, the Elmore Associate Professor of Electrical and Computer Engineering in the Elmore Family School of Electrical and Computer Engineering, and research scientist Fanglin Bao have developed HADAR, or heat-assisted detection and ranging. Their research was featured on the cover of the July 26 issue of the peer-reviewed journal Nature. A video about HADAR is available on YouTube. Nature also has released a podcast episode that includes an interview with Jacob.

Jacob said it is expected that one in 10 vehicles will be automated and that there will be 20 million robot helpers that serve people by 2030.

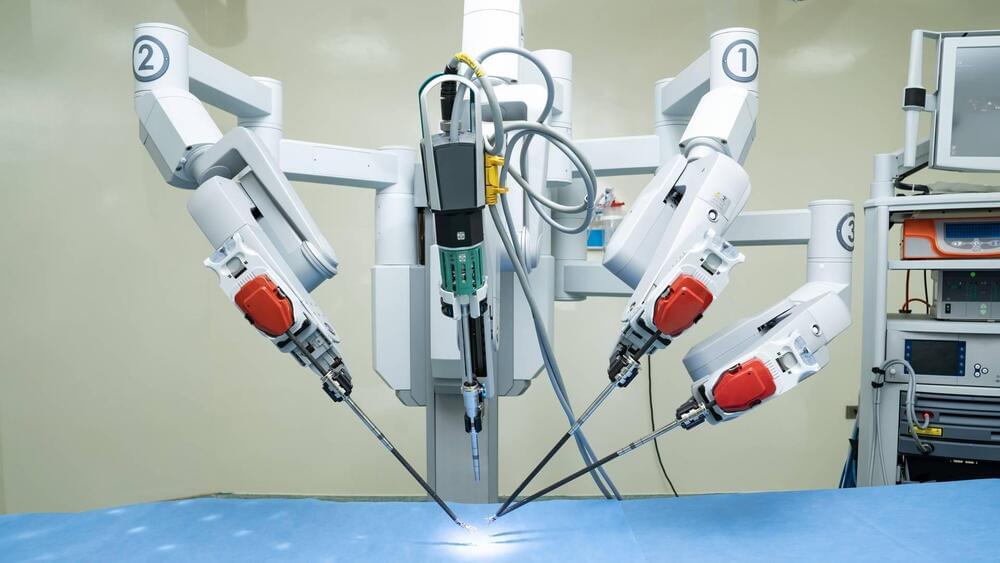

No, they did not do surgery on a banana over 5G

If your mother says she loves you: check it.

A couple weeks ago, I asked Vergecast.

What I was not expecting was for so many people to send me versions of a video that shows a banana getting stitches in a robotic surgery device, with the captions claiming that the surgery is being done remotely over 5G. This video has had an … More.

Meet Dr. Kais Rona, who is as befuddled by the lie appended to his video as anyone else.

Google’s AI-powered note-taking app is the messy beginning of something great

NotebookLM is a neat research tool with some big ideas. It’s still rough and new, but it feels like Google is onto something.

What if you could have a conversation with your notes? That question has consumed a corner of the internet recently.

Google’s version of this is called NotebookLM. It’s an AI-powered research tool that is meant to help you organize and interact with your own notes. (Google originally announced it earlier this year as Project Tailwind but quickly changed the name.) Right now, it’s really just a… More.

NotebookLM gives you a chatbot for your personal docs, and it’s already pretty helpful.

How one robot saved a patient from an inoperable tumor

The Da Vinci robot was able to undertake an operation no doctor would.

Robots are increasingly showing up in operating rooms and they are saving lives. As one patient in Canada reports in a CBC

Glenn Deir recounts the story of how his inoperable tumor nearly cost him his life and thanks the robot that saved him.

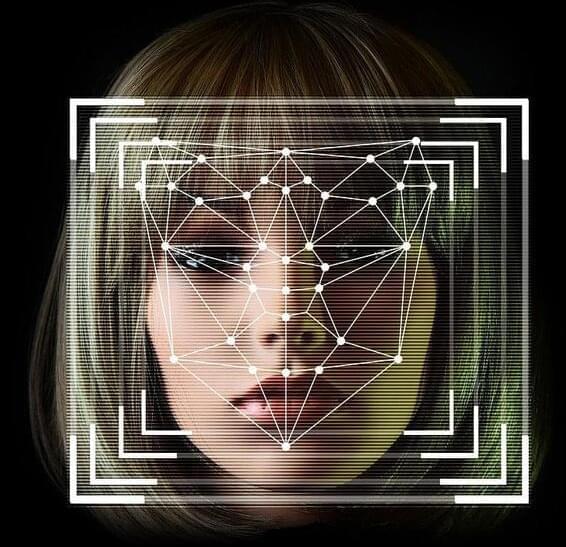

Hacking Biometric Facial Recognition

This post is also available in:  עברית (Hebrew)

עברית (Hebrew)

Are biometric authentication measures no longer safe? Biometric authentication expert says deepfake videos and camera injection attacks are changing the game.

Biometrics authentication is getting more and more popular due to it being fast, easy, and smooth for the user, but Stuart Wells, CTO at biometrics authentication company Jumio, thinks this may be risky.

What’s Next For AI In Healthcare In 2023? — The Medical Futurist

AI is the undoubted buzzword of the year, so let’s take a look at what we can expect from AI in healthcare in the coming period.

GPT-4 — How does it work, and how do I build apps with it? — CS50 Tech Talk

First, you’ll learn how GPT-4 works and why human language turns out to play such a critical role in computing. Next, you’ll see how AI-native software is be…