The dead internet theory and the rise of bots in an AI-dominated world.

Category: robotics/AI – Page 1,103

How AI Could Empower Any Business | Andrew Ng | TED

Expensive to build and often needing highly skilled engineers to maintain, artificial intelligence systems generally only pay off for large tech companies with vast amounts of data. But what if your local pizza shop could use AI to predict which flavor would sell best each day of the week? Andrew Ng shares a vision for democratizing access to AI, empowering any business to make decisions that will increase their profit and productivity. Learn how we could build a richer society – all with just a few self-provided data points.

If you love watching TED Talks like this one, become a TED Member to support our mission of spreading ideas: http://ted.com/membership.

Follow TED!

Twitter: http://twitter.com/TEDTalks.

Instagram: https://www.instagram.com/ted.

Facebook: http://facebook.com/TED

LinkedIn: https://www.linkedin.com/company/ted-conferences.

TikTok: https://www.tiktok.com/@tedtoks.

The TED Talks channel features talks, performances and original series from the world’s leading thinkers and doers. Subscribe to our channel for videos on Technology, Entertainment and Design — plus science, business, global issues, the arts and more. Visit http://TED.com to get our entire library of TED Talks, transcripts, translations, personalized talk recommendations and more.

Watch more: https://go.ted.com/andrewng.

Meta’s new AI model can generate and explain code for you

Derick Hudson/iStock.

But as the name suggests, one can clearly make out that Code Llama is based on Meta’s Llama 2 large language model (LLM) that can understand and generate natural language across various domains. Code Llama has been specialized for coding tasks and supports many popular programming languages, Meta says in its press release.

Household Robots for Washing Dishes in the Kitchen

The video from ErgoSurg GmbH by SH shows how a handy little robotic arm. This robot for caregivers in hospitals, retirement homes and for the elderly at home, sucks on surfaces, can run for hours on a battery and weighs less than 3kg. Caregivers, adults and children can record and play back movements within seconds or minutes. There is a USB interface for programmers. If you are interested, please contact us in Germany, Munich, +49 89 322 94 62.

Qualcomm’s ‘Holy Grail’: Generative AI Is Coming to Phones Soon

The company wants its next-gen Snapdragon chips to use AI for more than just improving camera shots.

Tech gets religion on AI: Inside the Vatican summit with Islamic and Jewish leaders, Microsoft and IBM

Artificial intelligence has such a potential for good not only would it make humans closer becoming God like but also it could manifest God through Artificial intelligence that why the ethics of the world depends on its success towards AI for good. It could create peace across the world and a brighter tomorrow.

It’s unusual for tech executives and religious leaders to get together to discuss their shared interests and goals for the future of humanity and the planet. It’s even more extraordinary for the world’s three largest monotheistic religions to be represented.

When the Pope joins the meeting, it’s basically unprecedented.

That’s what happened at Vatican City this week as the Catholic Church hosted leaders of the Jewish and Islamic faiths, new signatories to the Rome Call for AI Ethics, in a meeting that included executives from Microsoft and IBM.

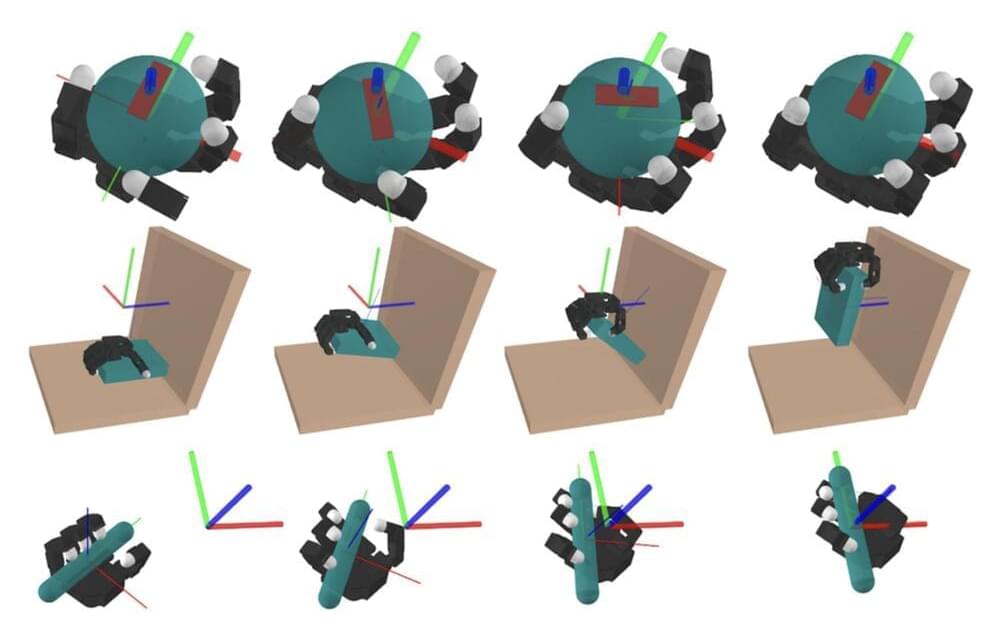

MIT Researchers Developed an Artificial Intelligence (AI) Technique that Enables a Robot to Develop Complex Plans for Manipulating an Object Using its Entire Hand

Whole-body manipulation is a strength of humans but a weakness of robots. The robot interprets each possible contact point between the box and the carrier’s fingers, arms, or torso as a separate contact event. This task becomes difficult to prepare for as soon as one considers the billions of possible contact events. Now, MIT researchers can streamline this technique, called contact-rich manipulation planning. An artificial intelligence approach called smoothing is used to reduce the number of judgments needed to find a good manipulation plan for the robot from the vast number of contact occurrences.

New developments in RL have demonstrated amazing results in manipulating through contact-rich dynamics, something that was previously challenging to achieve using model-based techniques. While these techniques were effective, it has yet to be known why they succeeded while model-based approaches failed. The overarching objective is to grasp and make sense of these factors from a model-based vantage point. Based on these understandings, scientists work to merge RL’s empirical success with the models’ generalizability and efficacy.

The hybrid nature of contact dynamics presents the greatest challenge to planning through touch from a model-based perspective. Since the ensuing dynamics are non-smooth, the Taylor approximation is no longer valid locally, and the linear model built using the gradient quickly breaks down. Since both iterative gradient-based optimization and sampling-based planning use local distance metrics, the local model’s invalidity poses serious difficulties for both. In response to these problems, numerous publications have attempted to take contact modes into account by either listing them or providing examples of them. These planners, who have a model-based understanding of the dynamic modes, often switch between continuous-state planning in the current contact mode and a discrete search for the next mode, leading to trajectories with a few-mode shifts here and there.

How to minimize data risk for generative AI and LLMs in the enterprise

Head over to our on-demand library to view sessions from VB Transform 2023. Register Here

Enterprises have quickly recognized the power of generative AI to uncover new ideas and increase both developer and non-developer productivity. But pushing sensitive and proprietary data into publicly hosted large language models (LLMs) creates significant risks in security, privacy and governance. Businesses need to address these risks before they can start to see any benefit from these powerful new technologies.

As IDC notes, enterprises have legitimate concerns that LLMs may “learn” from their prompts and disclose proprietary information to other businesses that enter similar prompts. Businesses also worry that any sensitive data they share could be stored online and exposed to hackers or accidentally made public.