This video is about How ChatGPT/ AI can disrupt healthcare.

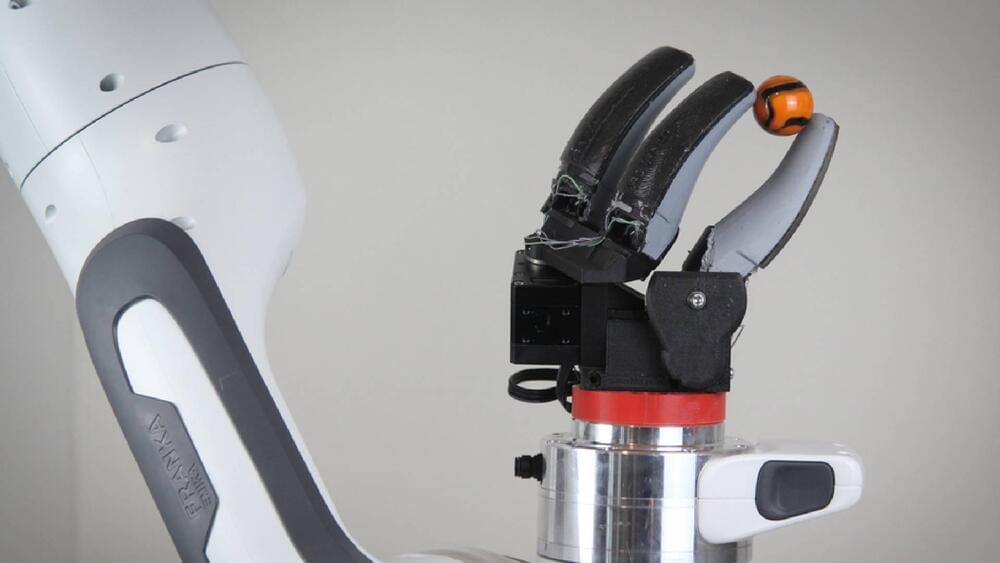

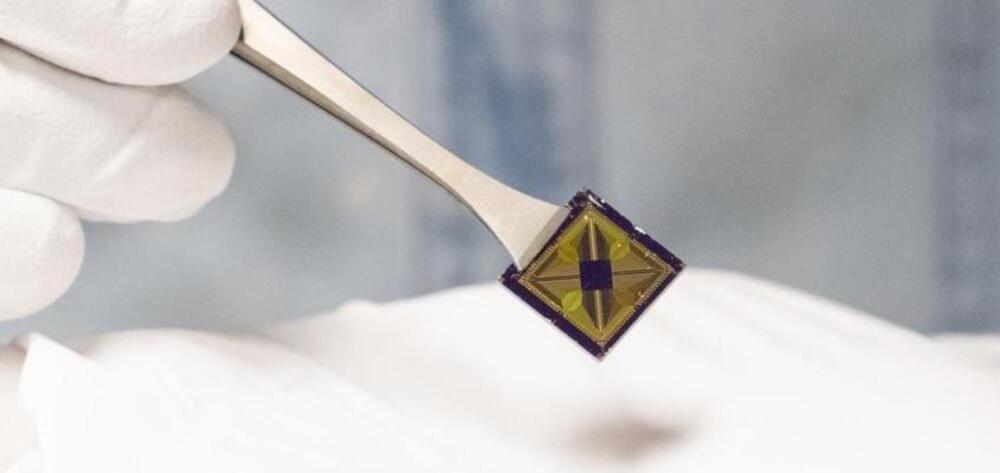

ChatGPT is an AI-powered chat platform developed by OpenAI. It allows users to ask questions in a conversational format and build on previous conversations, which allows for improved learning over time. Microsoft has invested billions of dollars in ChatGPT, integrating it into their search engine Bing and web browser Edge. Although the rise of AI has caused concern over job security, ChatGPT currently requires human input to generate questions and diagnose patients, making it a tool to augment human abilities in healthcare. The technology can be used for diagnosis, research, medical education, and radiographs. It can assist healthcare professionals in diagnosing and researching diseases, visualizing anatomy and procedures, and analyzing medical images.

#chatgpt #ai #healthcare.

Try ChatGPT Here:

https://chat.openai.com/chat.

Try Dall-E2 Here:

https://labs.openai.com/

Try the NEW Bing here: