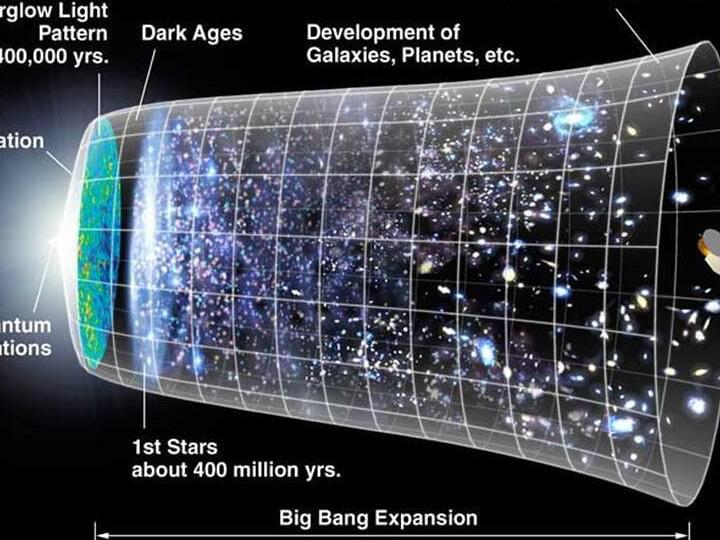

From the vast expanse of galaxies that paint our night skies to the intricate neural networks within our brains, everything we know and see can trace its origins back to a singular moment: the Big Bang. It’s a concept that has not only reshaped our understanding of the universe but also offers profound insights into the interconnectedness of all existence.

Imagine, if you will, the entire universe compressed into an infinitesimally small point. This is not a realm of science fiction but the reality of our cosmic beginnings. Around 13.8 billion years ago, a singular explosion gave birth to time, space, matter, and energy. And in that magnificent burst of creation, the seeds for everything — galaxies, stars, planets, and even us — were sown.

But what if the Big Bang was not just a physical event? What if it also marked the birth of a universal consciousness? A consciousness that binds every particle, every star, and every living being in a cosmic tapestry of shared experience and memory.