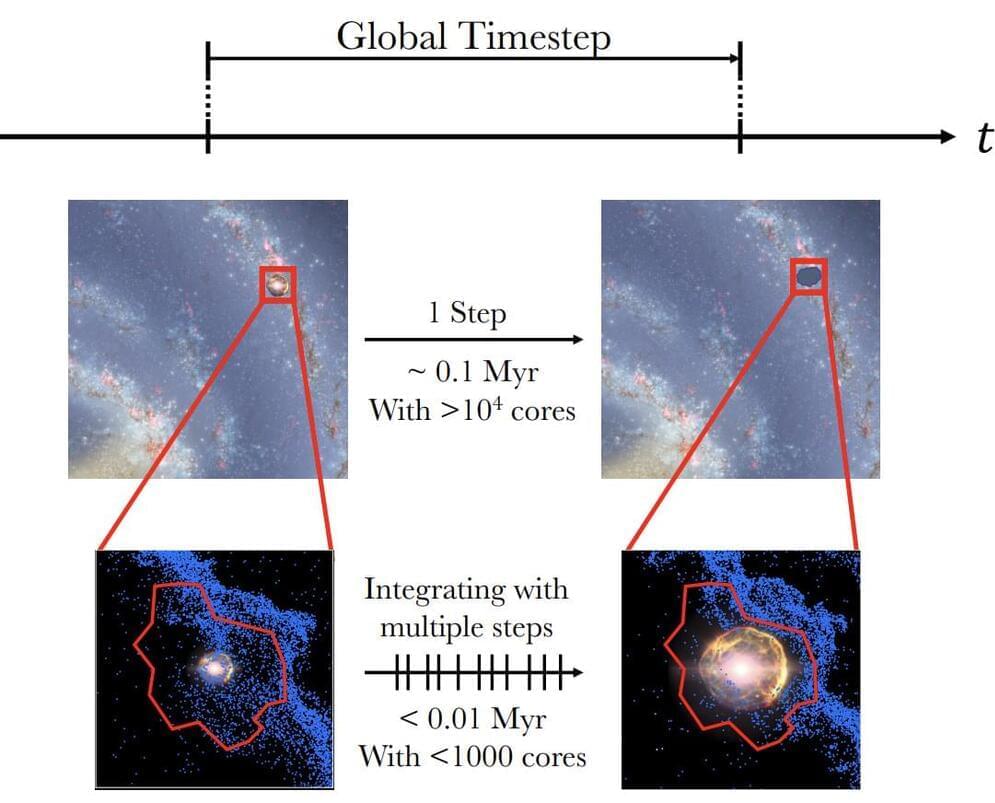

A new way to simulate supernovae may help shed light on our cosmic origins. Supernovae, exploding stars, play a critical role in the formation and evolution of galaxies. However, key aspects of them are notoriously difficult to simulate accurately in reasonably short amounts of time. For the first time, a team of researchers, including those from The University of Tokyo, apply deep learning to the problem of supernova simulation. Their approach can speed up the simulation of supernovae, and therefore of galaxy formation and evolution as well. These simulations include the evolution of the chemistry which led to life.

When you hear about deep learning, you might think of the latest app that sprung up this week to do something clever with images or generate humanlike text. Deep learning might be responsible for some behind-the-scenes aspects of such things, but it’s also used extensively in different fields of research. Recently, a team at a tech event called a hackathon applied deep learning to weather forecasting. It proved quite effective, and this got doctoral student Keiya Hirashima from the University of Tokyo’s Department of Astronomy thinking.

“Weather is a very complex phenomenon but ultimately it boils down to fluid dynamics calculations,” said Hirashima. “So, I wondered if we could modify deep learning models used for weather forecasting and apply them to another fluid system, but one that exists on a vastly larger scale and which we lack direct access to: my field of research, supernova explosions.”