Explore how artificial intelligence (AI) is revolutionizing industries, unleashing transformative potential, and supercharging efficiency.

Category: robotics/AI – Page 1,009

Technology For Technology’s Sake Is The Downfall Of The CIO

A unique use case for AI is around enhanced transaction monitoring to help combat financial fraud. Traditional rule-based approaches to anti-money laundering (AML) use static thresholds that only capture one element of a transaction, meaning they deliver a high rate of false positives. Not only is this hugely inefficient, but it can also be very demotivating for staff. With AI, multiple factors can be reviewed simultaneously to extract a risk score and develop an intelligent understanding of what risky behavior looks like. A feedback loop based on advanced analytics means that the more data is collected, the more intelligent the solution becomes. Pinpointing financial crime becomes more efficient and employees also benefit from more free time to focus efforts on other areas of importance like strategy and business development.

Thanks to its ever-increasing applications to evolving business challenges, regulators and financial institutions can no longer turn a blind eye to the potential of AI, with the power to revolutionize the financial system. It presents unique opportunities to reduce the capacity for human error, costing highly regulated industries billions each year.

What’s clear is that some technologies will, over time, become too difficult to ignore. As we saw with the adoption of the cloud, failure to embrace innovative technologies means organizations will get left behind. The cloud was once a pipedream, but now it’s a crucial part of all business operations today. Businesses implemented (or are in the process of implementing) huge digital transformation projects to migrate business processes to the cloud. Similarly, new organizations will kickstart their businesses in the cloud. This is a lesson that technologists must remain alert and continue to keep their finger on the pulse when it comes to incorporating fresh solutions.

It’s Alive? This Billionaire Funds Startup Growing Brain Cell ‘Biocomputers’

Billionaire investor Li Ka-Shing is funding a new technology that can potentially rival artificial intelligence (AI) by using brain cells blended with computers in a technology it calls DishBrain.

Peter Thiel, Mark Cuban and Warren Buffet funded early-stage startups and made millions. You don’t need to be a well-connected billionaire to do the same. Click here to invest in promising startups today.

This science fiction-sounding tech comes from Australian biotech firm Cortical Labs. The company recently raised $10 million in a round led by Horizons Ventures, the investment vehicle of the 94-year-old Ka-Shing, the richest person in Hong Kong. Additional investors included Blackbird Ventures, an Australian venture capital (VC) fund; In-Q-Tel, the investment arm of the Central Intelligence Agency; U.S. firm LifeX Ventures; and others.

Apple Researchers Introduce ByteFormer: An AI Model That Consumes Only Bytes And Does Not Explicitly Model The Input Modality

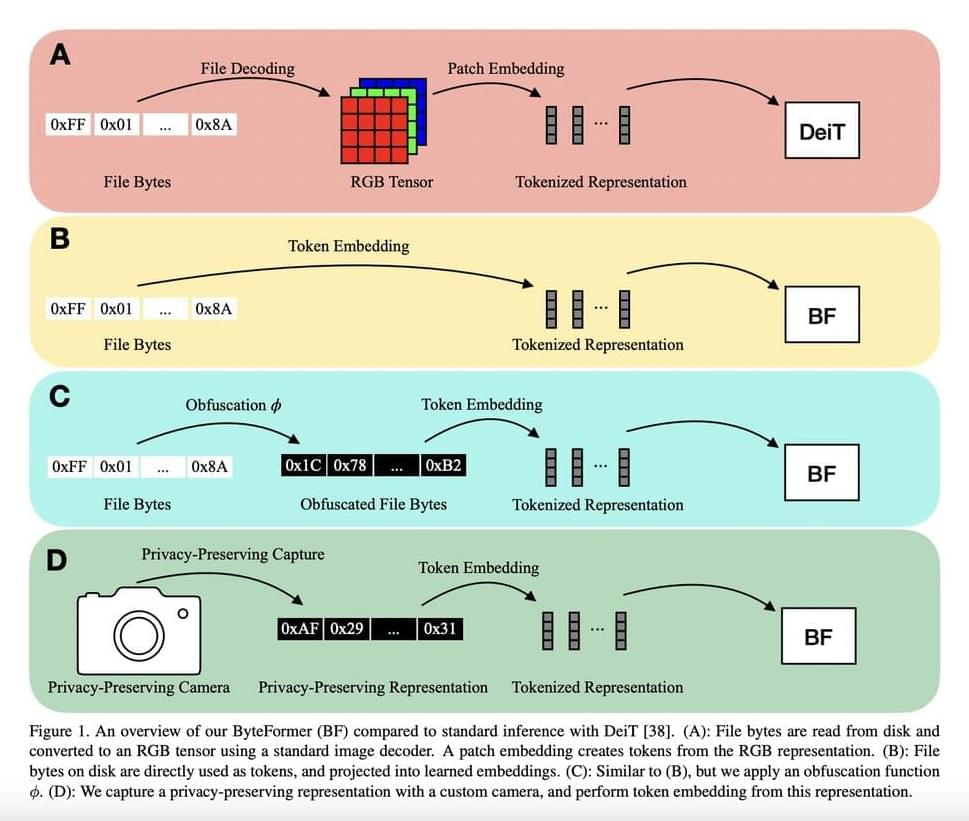

The explicit modeling of the input modality is typically required for deep learning inference. For instance, by encoding picture patches into vectors, Vision Transformers (ViTs) directly model the 2D spatial organization of images. Similarly, calculating spectral characteristics (like MFCCs) to transmit into a network is frequently involved in audio inference. A user must first decode a file into a modality-specific representation (such as an RGB tensor or MFCCs) before making an inference on a file that is saved on a disc (such as a JPEG image file or an MP3 audio file), as shown in Figure 1a. There are two real downsides to decoding inputs into a modality-specific representation.

It first involves manually creating an input representation and a model stem for each input modality. Recent projects like PerceiverIO and UnifiedIO have demonstrated the versatility of Transformer backbones. These techniques still need modality-specific input preprocessing, though. For instance, before sending picture files into the network, PerceiverIO decodes them into tensors. Other input modalities are transformed into various forms by PerceiverIO. They postulate that executing inference directly on file bytes makes it feasible to eliminate all modality-specific input preprocessing. The exposure of the material being analyzed is the second disadvantage of decoding inputs into a modality-specific representation.

Think of a smart home gadget that uses RGB photos to conduct inference. The user’s privacy may be jeopardized if an enemy gains access to this model input. They contend that deduction can instead be carried out on inputs that protect privacy. They make notice that numerous input modalities share the ability to be saved as file bytes to solve these shortcomings. As a result, they feed file bytes into their model at inference time (Figure 1b) without doing any decoding. Given their capability to handle a range of modalities and variable-length inputs, they adopt a modified Transformer architecture for their model.

More Than Half of Americans Think AI Poses a Threat to Humanity

One nebulous aspect of the poll, and of many of the headlines about AI we see on a daily basis, is how the technology is defined. What are we referring to when we say “AI”? The term encompasses everything from recommendation algorithms that serve up content on YouTube and Netflix, to large language models like ChatGPT, to models that can design incredibly complex protein architectures, to the Siri assistant built into many iPhones.

IBM’s definition is simple: “a field which combines computer science and robust datasets to enable problem-solving.” Google, meanwhile, defines it as “a set of technologies that enable computers to perform a variety of advanced functions, including the ability to see, understand and translate spoken and written language, analyze data, make recommendations, and more.”

It could be that peoples’ fear and distrust of AI comes partly from a lack of understanding of it, and a stronger focus on unsettling examples than positive ones. The AI that can design complex proteins may help scientists discover stronger vaccines and other drugs, and could do so on a vastly accelerated timeline.

Hundreds of Protestants attended a sermon in Nuremberg given

The artificial intelligence chatbot asked the believers in the fully packed St. Paul’s church in the Bavarian town of Fuerth to rise from the pews and praise the Lord.

The ChatGPT chatbot, personified by an avatar of a bearded Black man on a huge screen above the altar, then began preaching to the more than 300 people who had shown up on Friday morning for an experimental Lutheran church service almost entirely generated by AI.

“Dear friends, it is an honor for me to stand here and preach to you as the first artificial intelligence at this year’s convention of Protestants in Germany,” the avatar said with an expressionless face and monotonous voice.

Ben Goertzel — Approaches Towards a General Theory of General AI

The General Theory of General Intelligence: A Pragmatic Patternist Perspective — paper by Ben Goertzel: https://arxiv.org/abs/2103.15100 Abstract: “A multi-decade exploration into the theoretical foundations of artificial and natural general intelligence, which has been expressed in a series of books and papers and used to guide a series of practical and research-prototype software systems, is reviewed at a moderate level of detail. The review covers underlying philosophies (patternist philosophy of mind, foundational phenomenological and logical ontology), formalizations of the concept of intelligence, and a proposed high level architecture for AGI systems partly driven by these formalizations and philosophies. The implementation of specific cognitive processes such as logical reasoning, program learning, clustering and attention allocation in the context and language of this high level architecture is considered, as is the importance of a common (e.g. typed metagraph based) knowledge representation for enabling “cognitive synergy” between the various processes. The specifics of human-like cognitive architecture are presented as manifestations of these general principles, and key aspects of machine consciousness and machine ethics are also treated in this context. Lessons for practical implementation of advanced AGI in frameworks such as OpenCog Hyperon are briefly considered.“

Talk held at AGI17 — http://agi-conference.org/2017/#AGI17 #AGI #ArtificialIntelligence #Understanding #MachineUnderstanding #CommonSence #ArtificialGeneralIntelligence #PhilMind https://en.wikipedia.org/wiki/Artificial_general_intelligenceMany thanks for tuning in!

Have any ideas about people to interview? Want to be notified about future events? Any comments about the STF series?

Please fill out this form: https://docs.google.com/forms/d/1mr9PIfq2ZYlQsXRIn5BcLH2onbiSI7g79mOH_AFCdIk/

Consider supporting SciFuture by:

a) Subscribing to the SciFuture YouTube channel: http://youtube.com/subscription_center?add_user=TheRationalFuture b) Donating.

- Bitcoin: 1BxusYmpynJsH4i8681aBuw9ZTxbKoUi22

- Ethereum: 0xd46a6e88c4fe179d04464caf42626d0c9cab1c6b.

- Patreon: https://www.patreon.com/scifuture c) Sharing the media SciFuture creates.

Kind regards.

Adam Ford.

- Science, Technology & the Future — #SciFuture — http://scifuture.org

From Thought to Text: AI Converts Silent Speech into Written Words

Summary: A novel artificial intelligence system, the semantic decoder, can translate brain activity into continuous text. The system could revolutionize communication for people unable to speak due to conditions like stroke.

This non-invasive approach uses fMRI scanner data, turning thoughts into text without requiring any surgical implants. While not perfect, this AI system successfully captures the essence of a person’s thoughts half of the time.