Superradiant phase transition seenUntil now, the phenomenon was debated as it defies the “no-go theorem” in light-based quantum systems.

Subscribe for exclusive content at https://lawrencekrauss.substack.com/

Learn more and support the foundation at https://originsproject.org/

Connect with Sabine:

https://www.youtube.com/channel/UC1yNl2E66ZzKApQdRuTQ4tw.

https://www.instagram.com/sciencewtg/

Connect with Lawrence:

https://www.youtube.com/@lkrauss1

Tweets by LKrauss1

https://www.instagram.com/lkrauss1/

A note from Lawrence:

I’m excited to announce the third episode of our new series, What’s New in Science, co-hosted by Sabine Hossenfelder. Once again, Sabine and I each brought a few recent science stories to the table, and we took turns introducing them before diving into thoughtful discussions. It’s a format that continues to spark engaging exchanges, and based on the feedback we’ve received, it’s resonating well with listeners.

This time, we covered a wide range of intriguing topics. We began with the latest buzz from the Dark Energy Spectroscopic Instrument suggesting that dark energy might be changing over time. I remain skeptical, but the possibility alone is worth a closer look. We followed that with results from the Euclid space telescope, which has already identified nearly 500 strong gravitational lensing candidates—an impressive yield from just the early data.

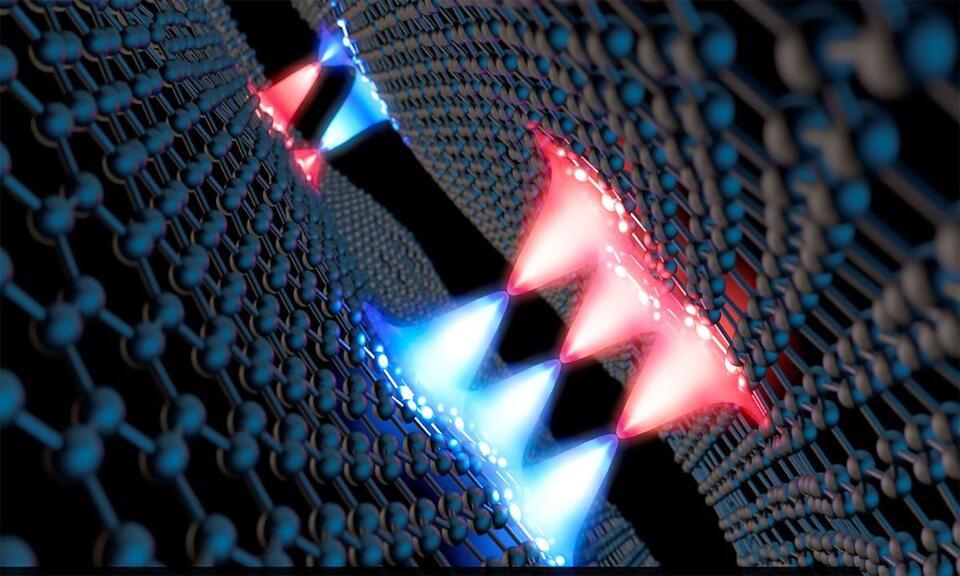

Here we report on low temperature transport measurements of encapsulated bilayer graphene nano constrictions fabricated employing electrode-free AFM-based local anodic oxidation (LAO) nanolithography. This technique allows for the creation of constrictions as narrow as 20 nm. While larger constrictions exhibit an enhanced energy gap, single quantum dot (QD) formation is observed within smaller constrictions with addition energies exceeding 100 meV, which surpass previous experiments on patterned QDs. These results suggest that transport through these narrow constrictions is governed by edge disorder combined with quantum confinement effects. Our findings introduce electrode-free AFM-LAO lithography as an easy and flexible method for creating nanostructures with tunable electronic properties without relying on patterning techniques such as e-beam lithography. The excellent control and reproducibility provided by this technique opens exciting opportunities for carbon-based quantum electronics and spintronics.

Citation.

Physical Review B

Physicist Dr. Lídia Del Rio, Essentia Foundation’s Research Fellow for Quantum Information Theory at the University of Zürich, explains to Hans Busstra one of the strangest quantum conundra confronting the foundations of physics: the Frauchiger-Renner (FR) thought experiment.

Scientific papers discussed in this video:

Quantum theory cannot consistently describe the use of itself.

Daniela Frauchiger & Renato Renner: https://www.nature.com/articles/s4146… experiments in a quantum computer, Nuriya Nurgalieva, Simon Mathis, Lídia del Rio, Renato Renner: https://arxiv.org/abs/2209.06236 Other interesting links related to the video: Quantum ‘thought experiment software’ https://github.com/XuemeiGu/Quanundrum Great Quantum artwork by Nuriya Nurgalieva: https://www.theoryverse.com/art Part One: Modelling Observers 00:00 Introduction 04:13 The object-subject divide in quantum mechanics 07:58 How would you explain the Wigner’s Friend thought experiment? 09:40 Observations are not facts 12:16 Is collapse relative? 14:11 Losing information = measurement 15:38 How do you model the agent in quantum mechanics? 17:54 What is reversibility in QM? Part Two: Explaining the Frauchiger-Renner Thought Experiment 22:14 Lídia explains Maxwell’s Demon and how the demon can be modelled 29:28 Formatting the ‘hard drive’ of the demon equals the energy gained 31:20 Lídia explains the Frauchiger-Renner thought experiment 41:51 The quantum circuit of the FR experiment 50:31 Where the experiment gets really weird 54:52 How to make sense of the weirdness? Part Three: The Implications and Meaning of the FR Experiment 1:03:59 What assumptions CANNOT all be true? 1:07:47 Critique from the physics community on the FR experiment 1:13:30 The philosophical implications of the FR experiment 1:16:04 Agreeing or disagreeing on Heisenberg cuts 1:17:27 Quanundrum software to test thought experiments 1:20:14 (No title – you might want to add something here) 1:23:16 Does the FR experiment “favor” a many-worlds interpretation, or does it require an epistemic approach? 1:25:04 Every theory, at some point, breaks 1:26:57 On the (in)completeness of quantum theory 1:29:35 What the FR experiment could mean for quantum computers… 1:32:06 What makes the FR experiment REALLY strange? 1:35:31 You cannot have an outside view AND know what’s going on inside… 1:36:01 What does it mean philosophically? 1:40:16 What if objective collapse or many-worlds is true? 1:43:20 Do you believe in free will? 1:45:54 Lídia does believe in an objective world… 1:47:15 What would a world weirder than quantum mechanics look like? 1:52:37 Where does thinking about “different” universes become relevant for physics? 1:55:51 On What the Bleep Do We Know, quantum woo, and the real meaning of quantum mechanics… 1:57:52 Nature doesn’t care about our Heisenberg cut… 1:59:33 Quantum mechanics and non-dualism 2:02:04 Physicists should be aware of their own faiths, religion, and mortality… 2:04:06 On the nature of the self, and how Lídia’s work has informed her outlook on life 2:09:31 Final words Sesame Street Russian Dolls video, under fair use: • Sesame Street Matryoshka Doll 10 All music licensed under Storyblocks and Soundstripe All stock footage licensed under Storyblocks Interview content copyright by Essentia Foundation, 2025 www.essentiafoundation.org.

Thought experiments in a quantum computer.

Nuriya Nurgalieva, Simon Mathis, Lídia del Rio, Renato Renner:

https://arxiv.org/abs/2209.

Other interesting links related to the video:

Quantum ‘thought experiment software’

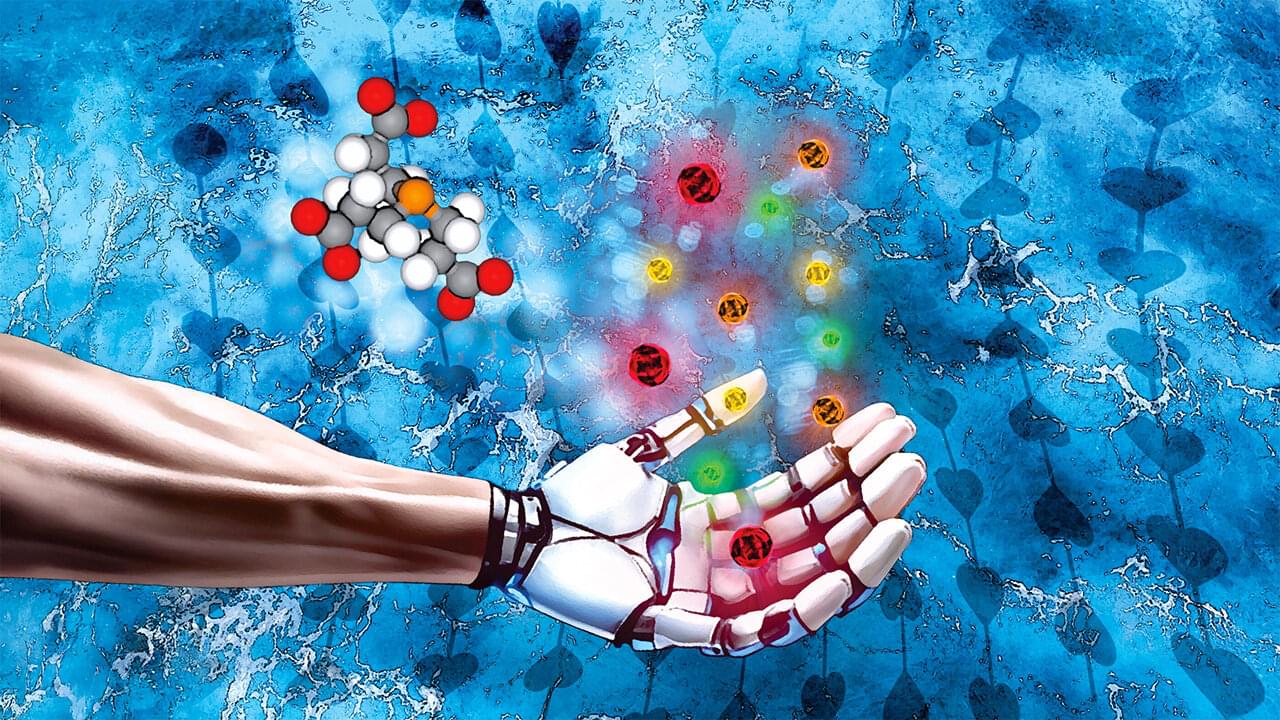

As the demand for innovative materials continues to grow—particularly in response to today’s technological and environmental challenges—research into nanomaterials is emerging as a strategic field. Among these materials, quantum dots are attracting particular attention due to their unique properties and wide range of applications. A team of researchers from ULiège has recently made a significant contribution by proposing a more sustainable approach to the production of these nanostructures.

Quantum dots (QDs) are nanometer-sized semiconductor particles with unique optical and electronic properties. Their ability to absorb and emit light with high precision makes them ideal for use in solar cells, LEDs, medical imaging, and sensors.

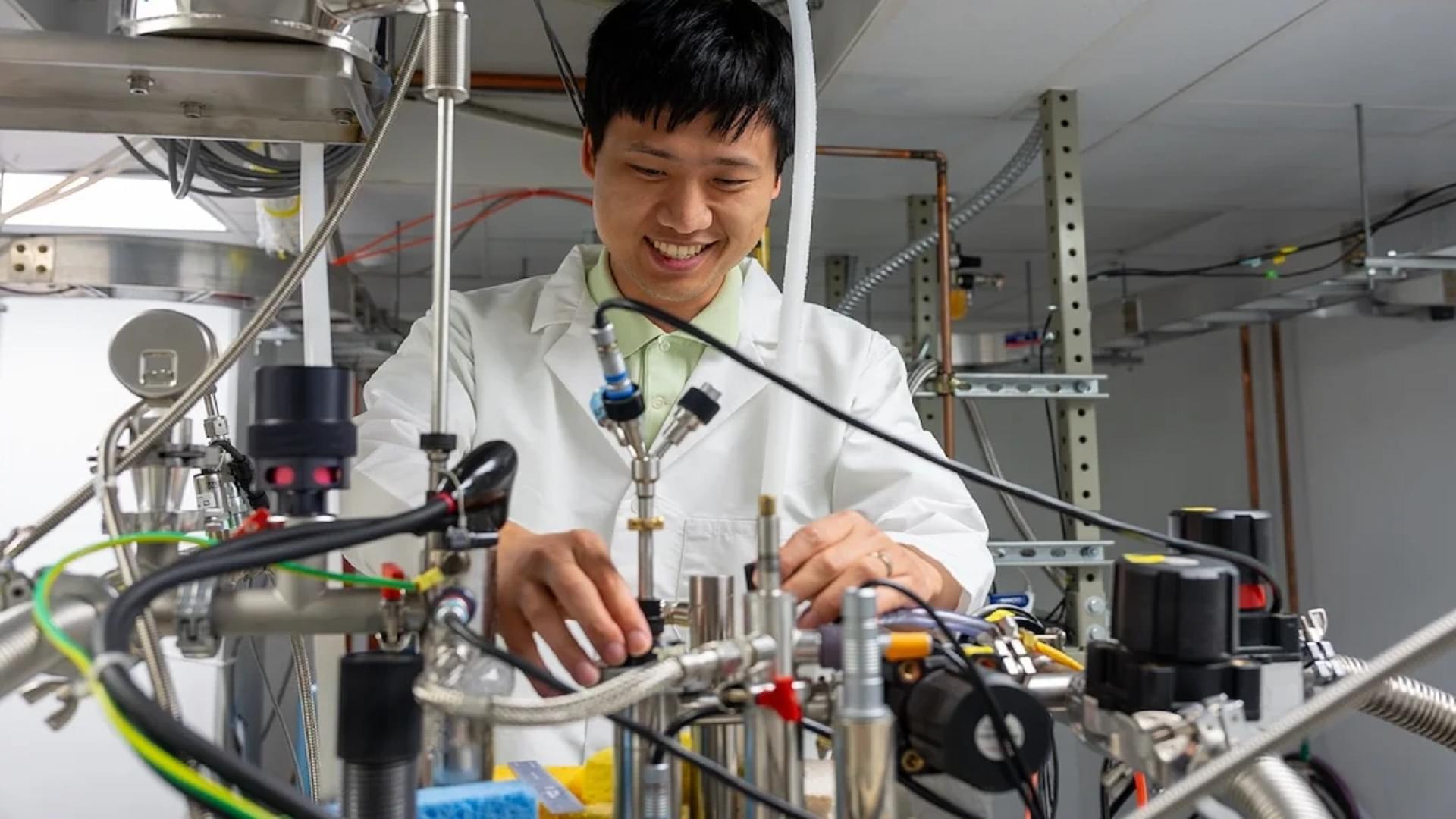

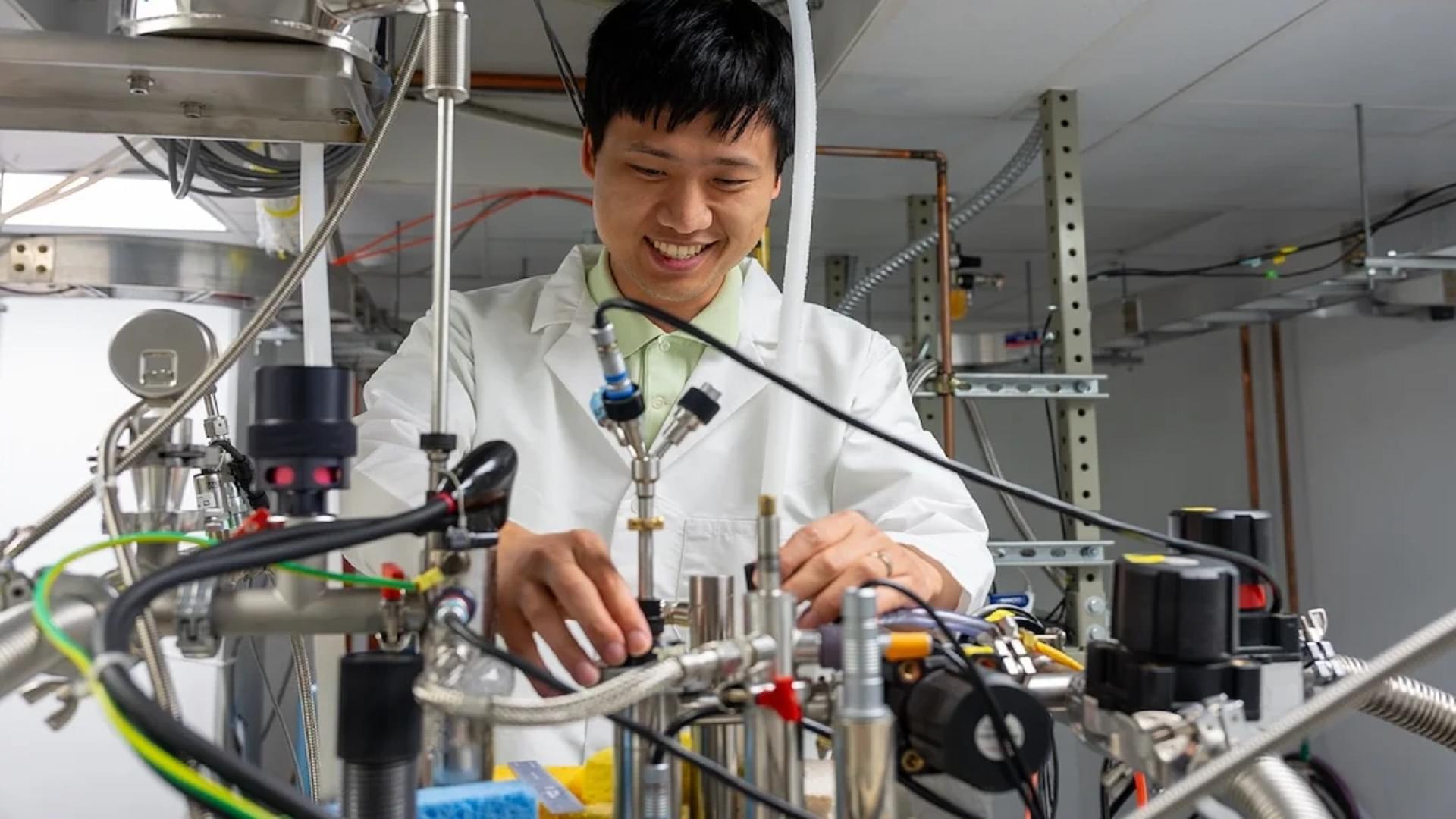

In a recent study, researchers at ULiège developed the first intensified, scalable process to produce cadmium chalcogenide quantum dots (semiconducting compounds widely used in optoelectronics and nanotechnology) in water using a novel, biocompatible chalcogenide source (chemical elements such as sulfur, selenium, and tellurium).

Quantum mechanics has always left people scratching their heads. Tiny particles seem to break usual laws of nature, hinting at puzzling scenarios that have intrigued physicists for decades, often sparking debates on how these subatomic oddities might push the limits of future technology.

One curious area in this field involves charges that behave in fractions, providing glimpses into phenomena that defy classical logic.

Scientists have spent years studying these strange properties, hoping to uncover new knowledge about how particles might transform the way we store and process information.