Quantum gravity is one of the holy grails of theoretical physics. Here’s how we might turn it into an experimental science at long last.

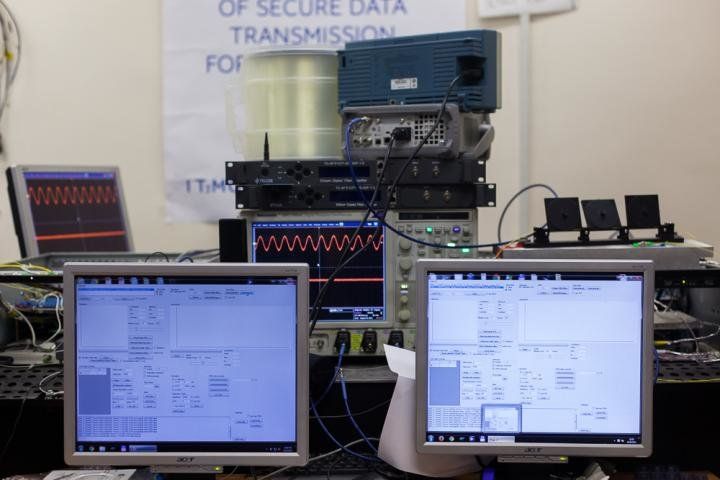

Scientists from ITMO University in Saint Petersburg, Russia have enabled the longer distance (250 Kilos) of secured data transmission occur via Quantum. Nice; and should be a wake up call to the US as well on advancing their efforts more.

A group of scientists from ITMO University in Saint Petersburg, Russia has developed a novel approach to the construction of quantum communication systems for secure data exchange. The experimental device based on the results of the research is capable of transmitting single-photon quantum signals across distances of 250 kilometers or more, which is on par with other cutting edge analogues. The research paper was published in the Optics Express journal.

Information security is becoming more and more of a critical issue not only for large companies, banks and defense enterprises, but even for small businesses and individual users. However, the data encryption algorithms we currently use for protecting our data are imperfect — in the long-term, their logic can be cracked. Regardless of how complex and intricate the algorithm is, getting round it is just the matter of time.

Contrary to algorithm-based encryption, systems that protect information by making use of the fundamental laws of quantum physics, can make data transmission completely immune to hacker attacks in the future. Information in a quantum channel is carried by single photons that change irreversibly once an eavesdropper attempts to intercept them. Therefore, the legitimate users will instantly know about any kind of intervention.

One thing about Quntum; nothing ever stays consistent. Why it’s loved & hated by Cyber Security enthusiasts as well as AI engineers.

When water in a pot is slowly heated to the boil, an exciting duel of energies takes place inside the liquid. On the one hand there is the interaction energy that wants to keep the water molecules together because of their mutual attraction. On the other hand, however, the motional energy, which increases due to heating, tries to separate the molecules. Below the boiling point the interaction energy prevails, but as soon as the motional energy wins the water boils and turns into water vapour. This process is also known as a phase transition. In this scenario the interaction only involves water molecules that are in immediate proximity to one another.

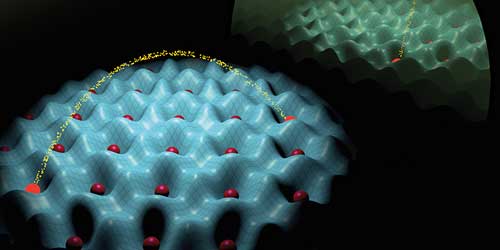

An artificial quantum world of atoms and light: Atoms (red) spontaneously arrange themselves in a checkerboard pattern as a result of the complex interplay between short- and long-range interactions. (Visualizations: ETH Zurich / Tobias Donner)

A team of researchers led by Tilman Esslinger at the Institute for Quantum Electronics at ETH Zurich, and Tobias Donner, a scientist in his group, have now shown that particles can be made to “feel” each other even over large distances. By adding such long-range interactions the physicists were able to observe novel phase transitions that result from energetic three-way battles (“Quantum phases from competing short- and long-range interactions in an optical lattice”).

The question is what does Stefano Pirandola and Samuel L. Braunstein consider “hybrid” when it comes to QC? In much of the Quantum research today only shows us things like “synthetic diamonds”, etc. are added to stablize data storage and transmissions not much else.

Physics: Unite to build a quantum Internet. Braunstein.

Lov’n Quantum Espresso

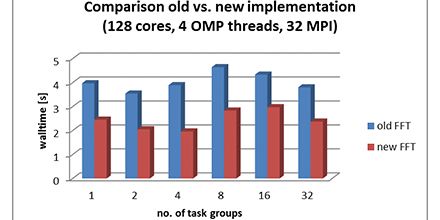

Researchers use specialized software such as Quantum ESPRESSO and a variety of HPC software in conducting quantum materials research. Quantum ESPRESSO is an integrated suite of computer codes for electronic-structure calculations and materials modeling, based on density-functional theory, plane waves and pseudo potentials. Quantum ESPRESSO is coordinated by the Quantum ESPRESSO Foundation and has a growing world-wide user community in academic and industrial research. Its intensive use of dense mathematical routines makes it an ideal candidate for many-core architectures, such as the Intel Xeon Phi coprocessor.

The Intel Parallel Computing Centers at Cineca and Lawrence Berkeley National Lab (LBNL) along with the National Energy Research Scientific Computing Center (NERSC) are at the forefront in using HPC software and modifying Quantum ESPRESSO (QE) code to take advantage of Intel Xeon processors and Intel Xeon Phi coprocessors used in quantum materials research. In addition to Quantum ESPRESSO, the teams use tools such as Intel compilers, libraries, Intel VTune and OpenMP in their work. The goal is to incorporate the changes they make to Quantum ESPRESSO into the public version of the code so that scientists can gain from the modification they have made to improve code optimization and parallelization without requiring researchers to manually modify legacy code.

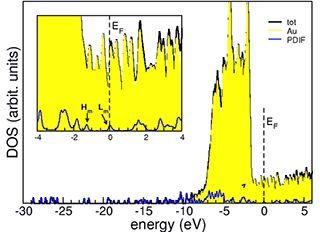

Electrical conductivity of a PDI-FCN2 molecule.

For communications; teleporting is definitely key. When it comes to Quantum Internet, one only needs to consult with researchers at Los Alamos National Lab to see how they have evolved in this space since 2009.

Advances in quantum communication will come from investment in hybrid technologies, explain Stefano Pirandola and Samuel L. Braunstein.

Another pre-Quantum Computing interim solution for super computing. So, we have this as well as Nvidia’s GPU. Wonder who else?

In summer 2015, US president Barack Obama signed an order intended to provide the country with an exascale supercomputer by 2025. The machine would be 30 times more powerful than today’s leading system: China’s Tianhe-2. Based on extrapolations of existing electronic technology, such a machine would draw close to 0.5GW – the entire output of a typical nuclear plant. It brings into question the sustainability of continuing down the same path for gains in computing.

One way to reduce the energy cost would be to move to optical interconnect. In his keynote at OFC in March 2016, Professor Yasuhiko Arakawa of University of Tokyo said high performance computing (HPC) will need optical chip to chip communication to provide the data bandwidth for future supercomputers. But digital processing itself presents a problem as designers try to deal with issues such as dark silicon – the need to disable large portions of a multibillion transistor processor at any one time to prevent it from overheating. Photonics may have an answer there as well.

Optalysys founder Nick New says: “With the limits of Moore’s Law being approached, there needs to be a change in how things are done. Some technologies are out there, like quantum computing, but these are still a long way off.”

When I read articles like this one; I wonder if folks really fully understand the full impact of what Quantum brings to all things in our current daily lives.

The high performance computing market is going through a technology transition – the Co-Design transition. As has already been discussed in many articles, this transition has emerged in order to solve the performance bottlenecks of today’s infrastructures and applications, performance bottlenecks that were created by multi-core CPUs and the existing CPU-centric system architecture.

How are multi-core CPUs the source for today’s performance bottlenecks? In order to understand that, we need to go back in time to the era of single-core CPUs. Back then, performance gains came from increases in CPU frequency and from the reduction of networking functions (network adapter and switches). Each new generation of product brought faster CPUs and lower-latency network adapters and switches, and that combination was the main performance factor. But this could not continue forever. The CPU frequency could not be increased any more due to power limitations, and instead of increasing the speed of the application process, we began using more CPU cores in parallel, thereby executing more processes at the same time. This enabled us to continue improving application performance, not by running faster, but by running more at the same time.

This new paradigm of increasing the amount of CPU cores dramatically increased the burden on the interconnect, and, moreover, changed the interconnect into the main performance enabler of the system. The key performance concern was how fast all the CPU processes could be synchronized and how fast data could be aggregated and distributed between them.

D-Wave not only created the standard for Quantum Computing; they are the standard for QC in N. America at least. Granted more competitors will enter the field; however, D-Wave is the commercial competitor with proven technology and credentials that others will have to meet up to or excel past to be a real player in the QC landscape.

Burnaby-based D-Wave, which was founded in 1999 as a spin-off from the physics department of the University of British Columbia has become nothing less than the leading repository of quantum computing intellectual property in the world, says the analyst. He thinks D-Wave’s customers will be positioned to gain massive competitive advantages because they will be able to solve problems that normal computers simply can’t, such those in areas such as DNA sequencing, financial analysis, and artificial intelligence.

“We stand at the precipice of a computing revolution,” says Kim. “Processing power is taking a huge leap forward thanks to ingenious innovations that leverage the counter-intuitive and unique properties of the quantum realm. Quantum mechanics, theorized many decades ago, is finally ready for prime time. Imagine, if we could go back to 1946 and have the same foresight with the ENIAC, the first electronic general-purpose computer. ENIAC’s pioneers created a new industry and opened up unimaginable possibilities. The same opportunity exists today with D-Wave Systems. D-Wave is the world’s first quantum computing company and represents the most unique and disruptive company that we have seen in our career.

Part 2

In part 1 of the journey, we saw the leading observations that needed explanation. Explanations that we want to do through the theory of relativity and quantum mechanics. No technical and expert knowledge in these theories yet, only scratches of its implications. So let us continue.

THE RELATIVITY THEORY Deducing from the Hubble expansion, the galaxies were close in the distant past but certainly not in this current form as the telescopes now see them receding. In fact, if they were receding it also means they were expanding.

Therefore, when we reverse the receding galaxies into the far distant past they should end up at a point somewhere sometime with the smallest imaginable extension, if that extension is conceivable at all. Scientists call it the singularity, a mathematical deduction from the relativity theory. How did this immeasurable Universe made of clusters of galaxies we now see ever existed in that point called singularity?