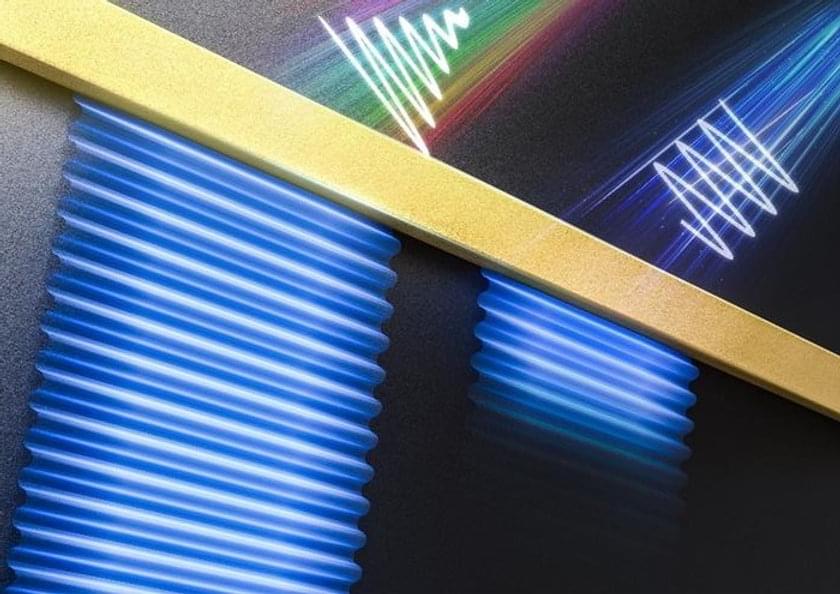

A collaborative research team co-led by Professor Shuang ZHANG, the Interim Head of the Department of Physics, The University of Hong Kong (HKU), along with Professor Qing DAI from National Center for Nanoscience and Technology, China, has introduced a solution to a prevalent issue in the realm of nanophotonics – the study of light at an extremely small scale. Their findings, recently published in the prestigious academic journal Nature Materials, propose a synthetic complex frequency wave (CFW) approach to address optical loss in polariton propagation. These findings offer practical solutions such as more efficient light-based devices for faster and more compact data storage and processing in devices such as computer chips and data storage devices, and improved accuracy in sensors, imaging techniques, and security systems.

Surface plasmon polaritons and phonon polaritons offer advantages such as efficient energy storage, local field enhancement, and high sensitivities, benefitting from their ability to confine light at small scales. However, their practical applications are hindered by the issue of ohmic loss, which causes energy dissipation when interacting with natural materials.

Over the past three decades, this limitation has impeded progress in nanophotonics for sensing, superimaging, and nanophotonic circuits. Overcoming ohmic loss would significantly enhance device performance, enabling advancement in sensing technology, high-resolution imaging, and advanced nanophotonic circuits.