Aging is a universal experience, evident through changes like wrinkles and graying hair. However, aging goes beyond the surface; it begins within our cells. Over time, our cells gradually lose their ability to perform essential functions, leading to a decline that affects every part of our bodies, from our cognitive abilities to our immune health.

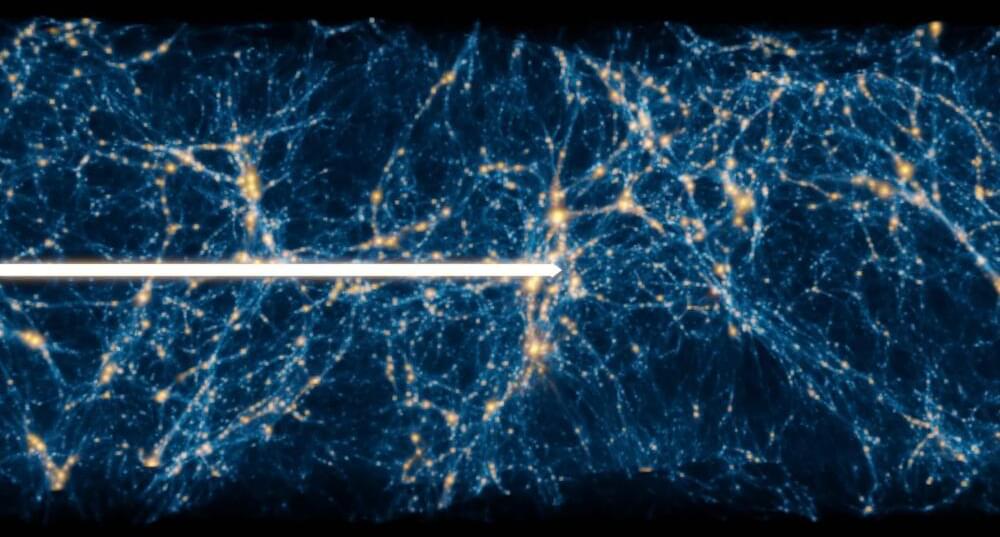

To understand how cellular changes lead to age-related disorders, Calico scientists are using advanced RNA sequencing to map molecular changes in individual cells over time in the roundworm, C. elegans. Much like mapping networks of roads and landscapes, we’re charting the complexities of our biology. These atlases uncover cell characteristics, functions, and interactions, providing deeper insights into how our bodies age.

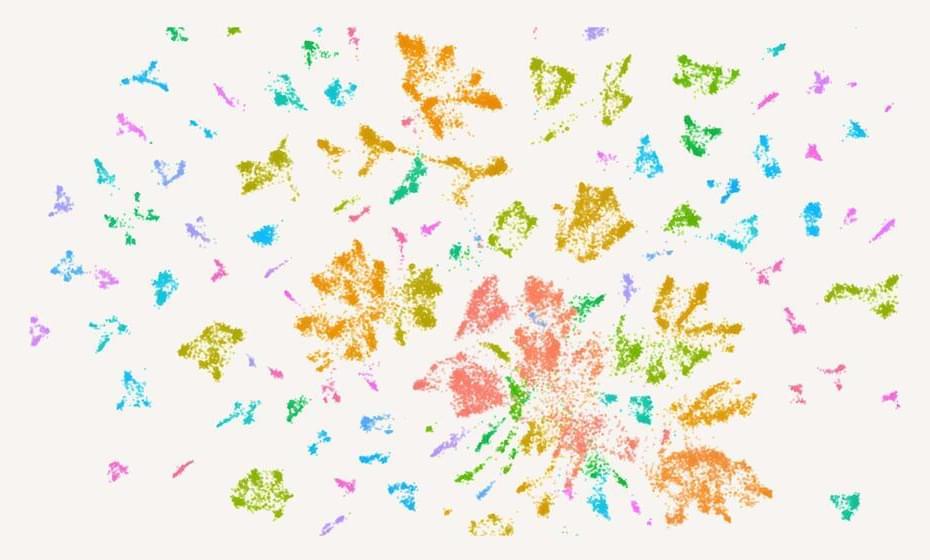

In the early 1990s, Cynthia Kenyon, Vice President of Aging Research at Calico, and her former team at UCSF discovered genes in C. elegans that control lifespan; these genes, which influence IGF1 signaling, function similarly to extend lifespan in many other organisms, including mammals. The genetic similarities between this tiny worm and more complex animals make it a useful model for studying the aging process. In work published in Cell Reports last year, our researchers created a detailed map of gene activity in every cell of the body of C. elegans throughout its development, providing a comprehensive blueprint of its cellular diversity and functions. They found that aging is an organized process, not merely random deterioration. Each cell type follows its own aging path, with many activating cell-specific protective gene expression pathways, and with some cell types aging faster than others. Even within the same cell type, the rate of aging can vary.