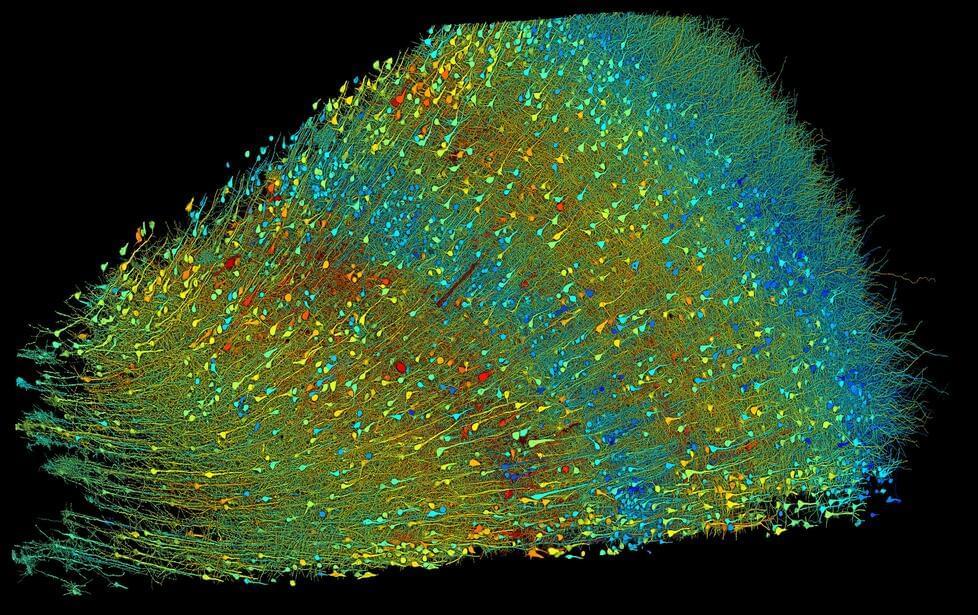

We are all very familiar with the concept of the Earth’s magnetic field. It turns out that most objects in space have magnetic fields but it’s quite tricky to measure them. Astronomers have developed an ingenious way to measure the magnetic field of the Milky Way using polarized light from interstellar dust grains that align themselves to the magnetic field lines. A new survey has begun this mapping process and has mapped an area that covers the equivalent of 15 times the full moon.

Many people will remember experiments in school with iron filings and bar magnets to unveil their magnetic field. It’s not quite so easy to capture the magnetic field of the Milky Way though. The new method to measure the field relies upon the small dust grains which permeate space between the stars.

The grains of dust are similar in size to smoke particles but they are not spherical. Just like a boat turning itself into the current, the dust particles’ long axis tends to align with the local magnetic field. As they do, they emit a glow in the same frequency as the cosmic background radiation and it is this that astronomers have been tuning in to.