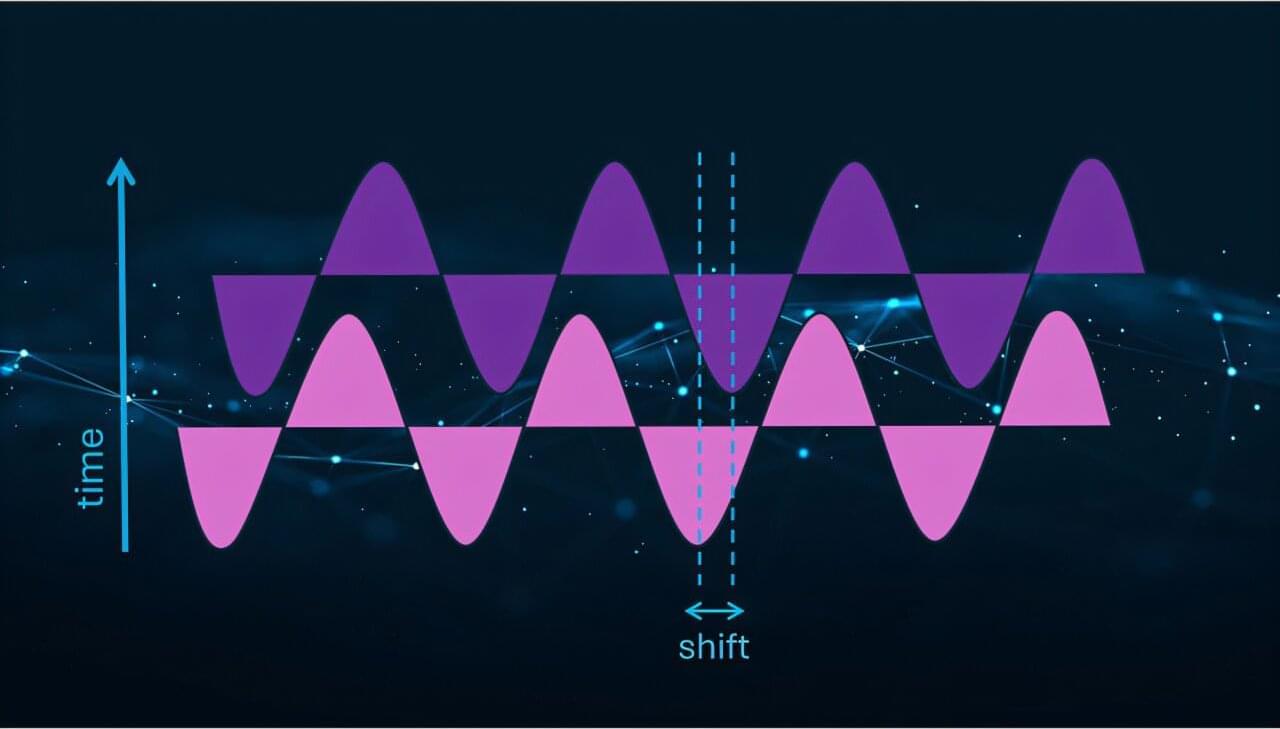

Scattering takes place across the universe at large and miniscule scales. Billiard balls clank off each other in bars, the nuclei of atoms collide to power the stars and create heavy elements, and even sound waves deviate from their original trajectory when they hit particles in the air.

Understanding such scattering can lead to discoveries about the forces that govern the universe. In a recent publication in Physical Review C, researchers from Lawrence Livermore National Laboratory (LLNL), the InQubator for Quantum Simulations and the University of Trento developed an algorithm for a quantum computer that accurately simulates scattering.

“Scattering experiments help us probe fundamental particles and their interactions,” said LLNL scientist Sofia Quaglioni. “The scattering of particles in matter [materials, atoms, molecules, nuclei] helps us understand how that matter is organized at a microscopic level.”