19 votes and so far on Reddit.

“I read more than my share of textbooks,” Gates says. “But it’s a pretty limited way to learn something. Even the best text can’t figure out which concepts you understand and which ones you need more help with.”

Software can be used to create a much more dynamic learning experience, he says.

Gates gives the example of learning algebra. “Instead of just reading a chapter on solving equations, you can look at the text online, watch a super-engaging video that shows you how it’s done, and play a game that reinforces the concepts,” he writes. “Then you solve a few problems online, and the software creates new quiz questions to zero in on the ideas you’re not quite getting.”

Circa 2018

The experimental mastery of complex quantum systems is required for future technologies like quantum computers and quantum encryption. Scientists from the University of Vienna and the Austrian Academy of Sciences have broken new ground. They sought to use more complex quantum systems than two-dimensionally entangled qubits and thus can increase the information capacity with the same number of particles. The developed methods and technologies could in the future enable the teleportation of complex quantum systems. The results of their work, “Experimental Greenberger-Horne-Zeilinger entanglement beyond qubits,” is published recently in the renowned journal Nature Photonics.

Similar to bits in conventional computers, qubits are the smallest unit of information in quantum systems. Big companies like Google and IBM are competing with research institutes around the world to produce an increasing number of entangled qubits and develop a functioning quantum computer. But a research group at the University of Vienna and the Austrian Academy of Sciences is pursuing a new path to increase the information capacity of complex quantum systems.

The idea behind it is simple: Instead of just increasing the number of particles involved, the complexity of each system is increased. “The special thing about our experiment is that for the first time, it entangles three photons beyond the conventional two-dimensional nature,” explains Manuel Erhard, first author of the study. For this purpose, the Viennese physicists used quantum systems with more than two possible states—in this particular case, the angular momentum of individual light particles. These individual photons now have a higher information capacity than qubits. However, the entanglement of these light particles turned out to be difficult on a conceptual level. The researchers overcame this challenge with a groundbreaking idea: a computer algorithm that autonomously searches for an experimental implementation.

The transition from PCs to QCs will not merely continue the doubling of computing power, in accord with Moore’s Law. It will induce a paradigm shift, both in the power of computing (at least for certain problems) and in the conceptual frameworks we use to understand computation, intelligence, neuroscience, social interactions, and sensory perception.

Today’s PCs depend, of course, on quantum mechanics for their proper operation. But their computations do not exploit two computational resources unique to quantum theory: superposition and entanglement. To call them computational resources is already a major conceptual shift. Until recently, superposition and entanglement have been regarded primarily as mathematically well-defined by psychologically incomprehensible oddities of the quantum world—fodder for interminable and apparently unfruitful philosophical debate. But they turn out to be more than idle curiosities. They are bona fide computational resources that can solve certain problems that are intractable with classical computers. The best known example is Peter Shor’s quantum algorithm which can, in principle, break encryptions that are impenetrable to classical algorithms.

The issue is the “in principle” part. Quantum theory is well established and quantum computation, although a relatively young discipline, has an impressive array of algorithms that can in principle run circles around classical algorithms on several important problems. But what about in practice? Not yet, and not by a long shot. There are formidable materials-science problems that must be solved—such as instantiating quantum bits (qubits) and quantum gates, and avoiding an unwanted noise called decoherence—before the promise of quantum computation can be fulfilled by tangible quantum computers. Many experts bet the problems can’t adequately be solved. I think this bet is premature. We will have laptop QCs, and they will transform our world.

A team of researchers affiliated with several institutions in Austria and Germany has shown that introducing environmental noise to a line of ions can lead to enhanced transport of energy across them. In their paper published in Physical Review Letters, the researchers describe their experiments and why they believe their findings will be helpful to other researchers.

Prior research has shown that when electrons move through conductive material, the means by which they do so can be described by quantum mechanics equations. But in the real world, such movement can be hindered by interference due to noise in the environment, leading to suppression of the transport energy. Prior research has also shown that electricity moving through a material can be described as a wave—if such waves remain in step, they are described as being coherent. But such waves can be disturbed by noise or defects in an atomic lattice, leading to suppression of flow. Such suppression at a given location is known as an Anderson localization. In this new effort, the researchers have shown that Anderson localizations can be overcome through the use of environmental noise.

The work consisted of isolating 10 calcium ions and holding them in space as a joined line—a one-dimensional crystal. Lasers were used to switch the ions between states, and energy was introduced to the ion line using laser pulses. This setup allowed them to watch as energy moved along the line of ions from one end to the other. Anderson localizations were introduced by firing individual lasers at each of the ions—the energy from the lasers resulted in ions with different intensities. With a degree of disorder in place, the team then created noise by randomly changing the intensity of the beams fired at the individual ions. This resulted in frequency wobble. And it was that wobble that the team found allowed the movement of energy between the ions to overcome the Anderson localizations.

Love: The Glue That Holds the Universe Together. “Love contrasts with fear, light with dark, black implies white, self implies other, suffering implies ecstasy, death implies life. We can devise and apprehend something only in terms of what it is not. This is the cosmic binary code: Ying/Yang, True/False, Infinite/Finite, Masculine/Feminine, On/Off, Yes/No… There are really only two opposing forces at play: love as universal integrating force and fear as universal disintegrating force… Like in Conway’s Game of Life information flows along the path of the least resistance influenced by the bigger motivator – either love factor of fear factor (or, rather, their sophisticated gradients like pleasure and pain) – Go or No go. Love and its contrasting opposite fear is what makes us feel alive… Love is recognized self-similarity in the other, a fractal algorithm of the least resistance. And love, as the finest intelligence, is obviously an extreme form of collaboration… collectively ascending to higher love, “becoming one planet of love.” Love is the glue that holds the Universe together…” –Excerpt from ‘The Syntellect Hypothesis: Five Paradigms of the Mind’s Evolution’ by Alex Vikoulov, available now on Amazon.

#SyntellectHypothesis #AlexVikoulov #Love

P.S. Extra For Digitalists: “In this quantum [computational] multiverse the essence of digital IS quantum entanglement. The totality of your digital reality is what your conscious mind implicitly or explicitly chooses to experience out of the infinite -\-\ a cocktail of love response and fear response.”

Smartphones aren’t just for selfies anymore. A novel cell phone imaging algorithm can now analyze assays typically evaluated via spectroscopy, a powerful device used in scientific research. Researchers analyzed more than 10,000 images and found that their method consistently outperformed existing algorithms under a wide range of operating field conditions. This technique reduces the need for bulky equipment and increases the precision of quantitative results.

Accessible, connected, and computationally powerful, smartphones aren’t just for “selfies” anymore. They have emerged as powerful evaluation tools capable of diagnosing medical conditions in point-of-care settings. Smartphones also are a viable solution for health care in the developing world because they allow untrained users to collect and transmit data to medical professionals.

Although smartphone camera technology today offers a wide range of medical applications such as microscopy and cytometric analysis, in practice, cell phone image tests have limitations that severely restrict their utility. Addressing these limitations requires external smartphone hardware to obtain quantitative results – imposing a design tradeoff between accessibility and accuracy.

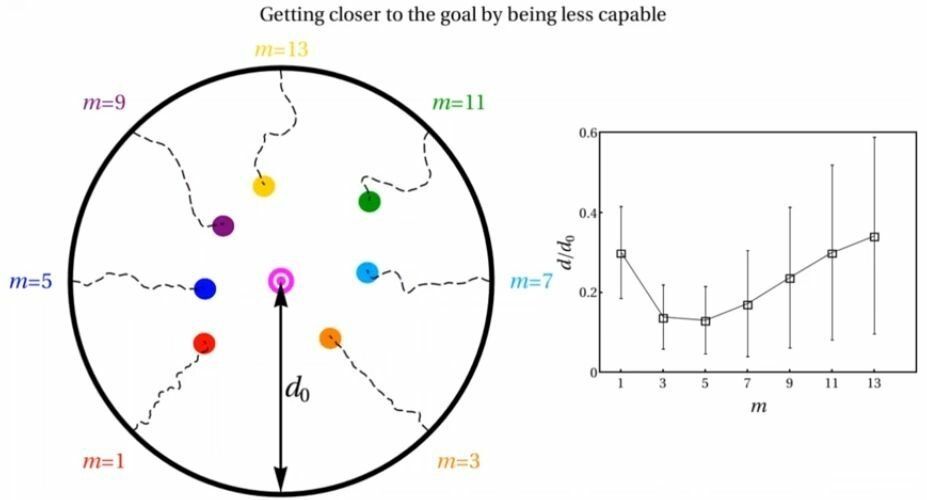

A team of researchers including Neil Johnson, a professor of physics at the George Washington University, has discovered that decentralized systems work better when the individual parts are less capable.

Dr. Johnson was interested in understanding how systems with many moving parts can reach a desired target or goal without centralized control. This explores a common theory that decentralized systems, those without a central brain, would be more resilient against damage or errors.

This research has the potential to inform everything from how to effectively structure a company, build a better autonomous vehicle, optimize next-generation artificial intelligence algorithms—and could even transform our understanding of evolution. The key lies in understanding how the “sweet spot” between decentralized and centralized systems varies with how clever the pieces are, Dr. Johnson said.

Forensics is on the cusp of a third revolution in its relatively young lifetime. The first revolution, under the brilliant but complicated mind of J. Edgar Hoover, brought science to the field and was largely responsible for the rise of criminal justice as we know it today. The second, half a century later, saw the introduction of computers and related technologies in mainstream forensics and created the subfield of digital forensics.

We are now hurtling headlong into the third revolution with the introduction of Artificial Intelligence (AI) – intelligence exhibited by machines that are trained to learn and solve problems. This is not just an extension of prior technologies. AI holds the potential to dramatically change the field in a variety of ways, from reducing bias in investigations to challenging what evidence is considered admissible.

AI is no longer science fiction. A 2016 survey conducted by the National Business Research Institute (NBRI) found that 38% of enterprises are already using AI technologies and 62% will use AI technologies by 2018. “The availability of large volumes of data—plus new algorithms and more computing power—are behind the recent success of deep learning, finally pulling AI out of its long winter,” writes Gil Press, contributor to Forbes.com.