The equations suggest a deeper structure beneath what general relativity has allowed us to see—until now.

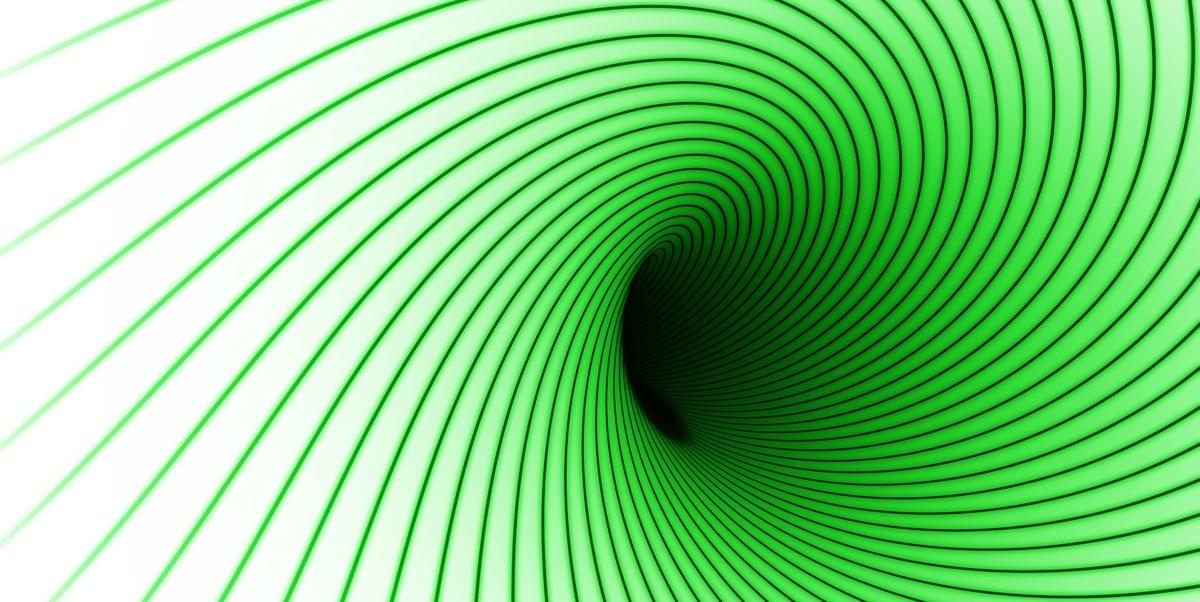

As the photons traveled along the waveguide and tunneled into the barrier, they also tunneled into the secondary waveguide, jumping back and forth between the two at a consistent rate, allowing the research team to calculate their speed.

By combining this element of time with measurements of the photon’s rate of decay inside the barrier, the researchers were able to calculate dwell time, which was found to be finite.

The researchers write, “Our findings contribute to the ongoing tunneling time debate and can be viewed as a test of Bohmian trajectories in quantum mechanics. Regarding the latter, we find that the measured energy–speed relationship does not align with the particle dynamics postulated by the guiding equation in Bohmian mechanics.”

Quantum mechanics has a reputation that precedes it. Virtually everyone who has bumped up against the quantum realm, whether in a physics class, in the lab, or in popular science writing, is left thinking something like, “Now, that is really weird.” For some, this translates to weird and wonderful. For others it is more like weird and disturbing.

Chip Sebens, a professor of philosophy at Caltech who asks foundational questions about physics, is firmly in the latter camp. “Philosophers of physics generally get really frustrated when people just say, ‘OK, here’s quantum mechanics. It’s going to be weird. Don’t worry. You can make the right predictions with it. You don’t need to try to make too much sense out of it, just learn to use it.’ That kind of thing drives me up the wall,” Sebens says.

One particularly weird and disturbing area of physics for people like Sebens is quantum field theory. Quantum field theory goes beyond quantum mechanics, incorporating the special theory of relativity and allowing the number of particles to change over time (such as when an electron and positron annihilate each other and create two photons).

Physicists propose that calculations of certain aspects of quantum gravity can currently be done even without a full theory of quantum gravity itself. Basically, they work backwards from the fact that quantum gravity on the macro scale must conform to Einstein’s relativity theories. This approach is effective until the small scale of a black hole singularity is close.

(See my Comment below for an article link to POPULAR MECHANICS that discussed the scientific article in an accessible manner.

We study new black-hole solutions in quantum gravity. We use the Vilkovisky-DeWitt unique effective action to obtain quantum gravitational corrections to Einstein’s equations. In full analogy to previous work done for quadratic gravity, we find new black-hole–like solutions. We show that these new solutions exist close to the horizon and in the far-field limit.

Chinese researchers present new technology for data processing and sorting AI systems using memristors.

Memristors are electronic elements that can change their resistance depending on the electric charges that flow through them. They are able to mimic the functions of processing and storing information in a similar way to the human brain.

Chinese scientists have combined the use of memristors with an advanced data sorting algorithm to process large amounts of information more efficiently. According to them, this approach can help overcome performance limitations not only in computing, but also in artificial intelligence systems and equipment design.

D.P. Kroese, Z.I. Botev, T. Taimre, R. Vaisman. Data Science and Machine Learning: Mathematical and Statistical Methods, Chapman and Hall/CRC, Boca Raton, 2019.

The purpose of this book is to provide an accessible, yet comprehensive textbook intended for students interested in gaining a better understanding of the mathematics and statistics that underpin the rich variety of ideas and machine learning algorithms in data science.

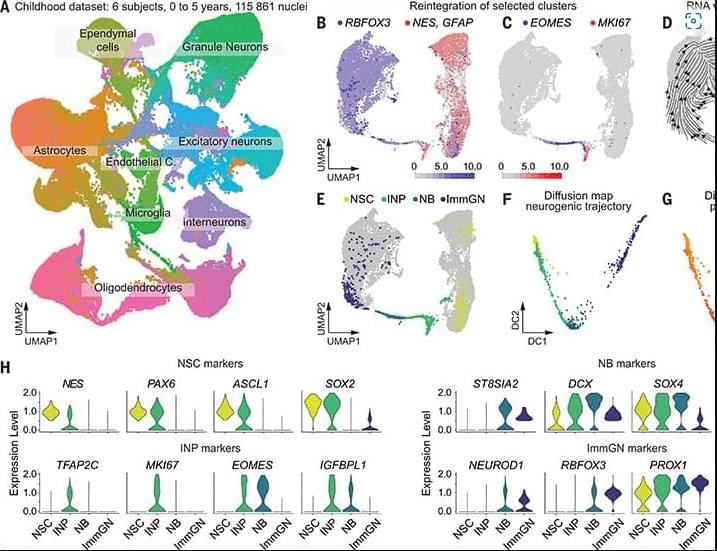

Continuous adult hippocampal neurogenesis is involved in memory formation and mood regulation but is challenging to study in humans. Difficulties finding proliferating progenitor cells called into question whether and how new neurons may be generated. We analyzed the human hippocampus from birth through adulthood by single-nucleus RNA sequencing. We identified all neural progenitor cell stages in early childhood. In adults, using antibodies against the proliferation marker Ki67 and machine learning algorithms, we found proliferating neural progenitor cells. Furthermore, transcriptomic data showed that neural progenitors were localized within the dentate gyrus. The results contribute to understanding neurogenesis in adult humans.

Understanding randomness is crucial in many fields. From computer science and engineering to cryptography and weather forecasting, studying and interpreting randomness helps us simulate real-world phenomena, design algorithms and predict outcomes in uncertain situations.

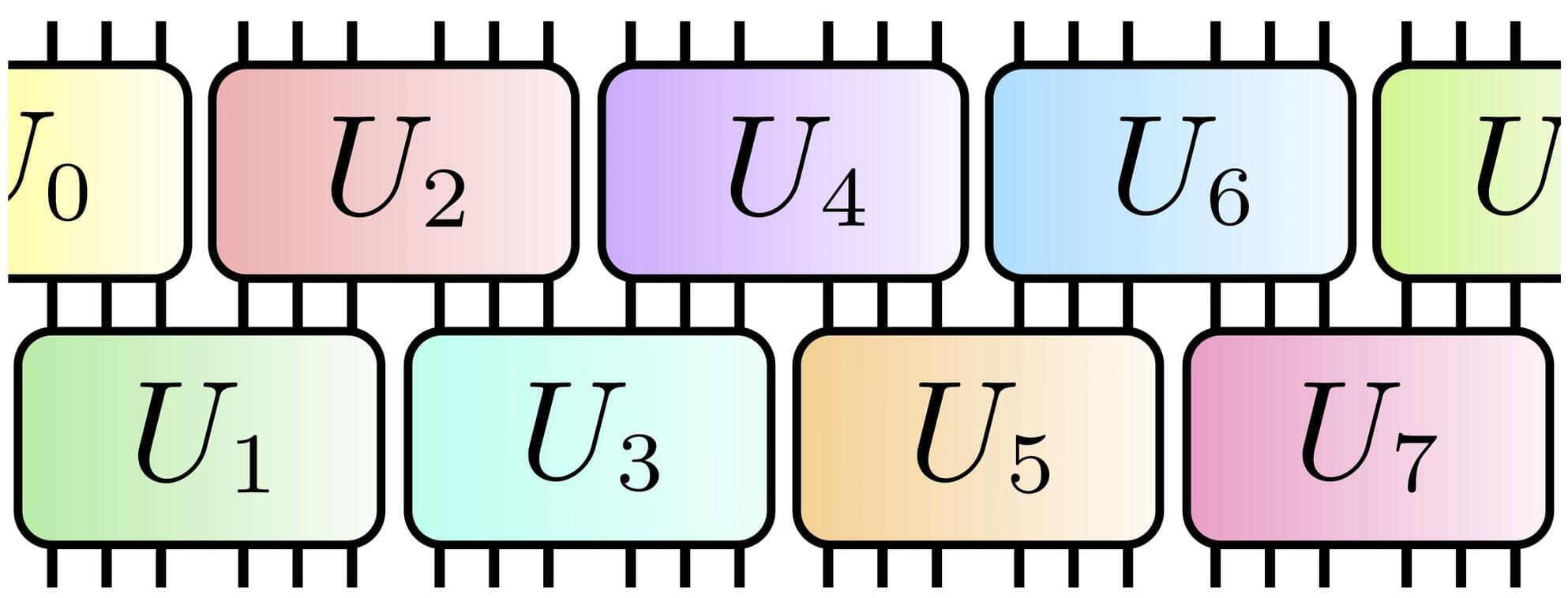

Randomness is also important in quantum computing, but generating it typically involves a large number of operations. However, Thomas Schuster and colleagues at the California Institute of Technology have demonstrated that quantum computers can produce randomness much more easily than previously thought.

And that’s good news because the research could pave the way for faster and more efficient quantum computers.