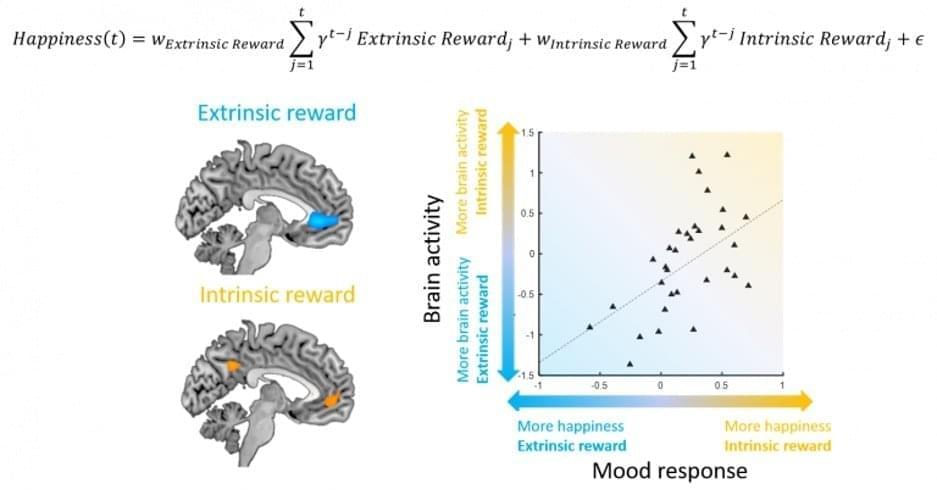

“Our mathematical equation lets us predict which individuals will have both more happiness and more brain activity for intrinsic compared to extrinsic rewards. The same approach can be used in principle to measure what people actually prefer without asking them explicitly, but simply by measuring their mood.”

Summary: A new mathematical equation predicts which individuals will have more happiness and increased brain activity for intrinsic rather than extrinsic rewards. The approach can be used to predict personal preferences based on mood and without asking the individual.

Source: UCL

A new study led by researchers at the Wellcome Centre for Human Neuroimaging shows that using mathematical equations with continuous mood sampling may be better at assessing what people prefer over asking them directly.

People can struggle to accurately assess how they feel about something, especially something they feel social pressure to enjoy, like waking up early for a yoga class.