A new method developed at the University of Warwick offers the first simple and predictive way to calculate how irregularly shaped nanoparticles—a dangerous class of airborne pollutant—move through the air.

Every day, we breathe in millions of microscopic particles, including soot, dust, pollen, microplastics, viruses, and synthetic nanoparticles. Some are small enough to slip deep into the lungs and even enter the bloodstream, contributing to conditions such as heart disease, stroke, and cancer.

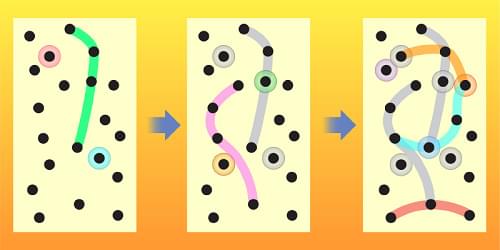

Most of these airborne particles are irregularly shaped. Yet the mathematical models used to predict how these particles behave typically assume they are perfect spheres, simply because the equations are easier to solve. This makes it difficult to monitor or predict the movement of real-world, non-spherical—and often more hazardous—particles.