If you’re a programmer seeking to develop cost-free code for your projects while saving time and effort, explore these AI tools that help you generate free code.

Generative AI and other easy-to-use software tools can help employees with no coding background become adept programmers, or what the authors call citizen developers. By simply describing what they want in a prompt, citizen developers can collaborate with these tools to build entire applications—a process that until recently would have required advanced programming fluency.

Information technology has historically involved builders (IT professionals) and users (all other employees), with users being relatively powerless operators of the technology. That way of working often means IT professionals struggle to meet demand in a timely fashion, and communication problems arise among technical experts, business leaders, and application users.

Citizen development raises a critical question about the ultimate fate of IT organizations. How will they facilitate and safeguard the process without placing too many obstacles in its path? To reject its benefits is impractical, but to manage it carelessly may be worse. In this article the authors share a road map for successfully introducing citizen development to your employees.

Are democratic societies ready for a future in which AI algorithmically assigns limited supplies of respirators or hospital beds during pandemics? Or one in which AI fuels an arms race between disinformation creation and detection? Or sways court decisions with amicus briefs written to mimic the rhetorical and argumentative styles of Supreme Court justices?

Decades of research show that most democratic societies struggle to hold nuanced debates about new technologies. These discussions need to be informed not only by the best available science but also the numerous ethical, regulatory and social considerations of their use. Difficult dilemmas posed by artificial intelligence are already… More.

Even AI experts are uneasy about how unprepared societies are for moving forward with the technology in a responsible fashion. We study the public and political aspects of emerging science. In 2022, our research group at the University of Wisconsin-Madison interviewed almost 2,200 researchers who had published on the topic of AI. Nine in 10 (90.3%) predicted that there will be unintended consequences of AI applications, and three in four (75.9%) did not think that society is prepared for the potential effects of AI applications.

Who gets a say on AI?

Industry leaders, policymakers and academics have been slow to adjust to the rapid onset of powerful AI technologies. In 2017, researchers and scholars met in Pacific Grove for another small expert-only meeting, this time to outline principles for future AI research. Senator Chuck Schumer plans to hold the first of a series of AI Insight Forums on Sept. 13, 2023, to help Beltway policymakers think through AI risks with tech leaders like Meta’s Mark Zuckerberg and X’s Elon Musk.

A team of scientists from Ames National Laboratory has developed a new machine learning model for discovering critical-element-free permanent magnet materials. The model predicts the Curie temperature of new material combinations. It is an important first step in using artificial intelligence to predict new permanent magnet materials. This model adds to the team’s recently developed capability for discovering thermodynamically stable rare earth materials. The work is published in Chemistry of Materials.

High performance magnets are essential for technologies such as wind energy, data storage, electric vehicles, and magnetic refrigeration. These magnets contain critical materials such as cobalt and rare earth elements like neodymium and dysprosium. These materials are in high demand but have limited availability. This situation is motivating researchers to find ways to design new magnetic materials with reduced critical materials.

Machine learning (ML) is a form of artificial intelligence. It is driven by computer algorithms that use data and trial-and-error algorithms to continually improve its predictions. The team used experimental data on Curie temperatures and theoretical modeling to train the ML algorithm. Curie temperature is the maximum temperature at which a material maintains its magnetism.

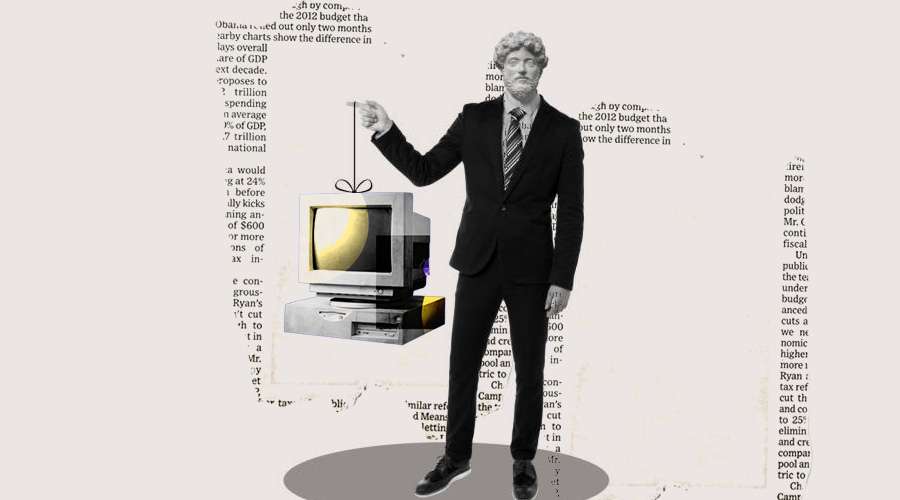

Using a standardized assessment, researchers in the UK compared the performance of a commercially available artificial intelligence (AI) algorithm with human readers of screening mammograms. Results of their findings were published in Radiology.

Mammographic screening does not detect every breast cancer. False-positive interpretations can result in women without cancer undergoing unnecessary imaging and biopsy. To improve the sensitivity and specificity of screening mammography, one solution is to have two readers interpret every mammogram.

According to the researchers, double reading increases cancer detection rates by 6 to 15% and keeps recall rates low. However, this strategy is labor-intensive and difficult to achieve during reader shortages.

Please see my new FORBES article:

Thanks and please follow me on Linkedin for more tech and cybersecurity insights.

More remarkably, the advent of artificial intelligence (AI) and machine learning-based computers in the next century may alter how we relate to ourselves.

The digital ecosystem’s networked computer components, which are made possible by machine learning and artificial intelligence, will have a significant impact on practically every sector of the economy. These integrated AI and computing capabilities could pave the way for new frontiers in fields as diverse as genetic engineering, augmented reality, robotics, renewable energy, big data, and more.

Three important verticals in this digital transformation are already being impacted by AI: 1) Healthcare, 2) Cybersecurity, and 3) Communications.

Artificial intelligence (AI) has been helping humans in IT security operations since the 2010s, analyzing massive amounts of data quickly to detect the signals of malicious behavior. With enterprise cloud environments producing terabytes of data to be analyzed, threat detection at the cloud scale depends on AI. But can that AI be trusted? Or will hidden bias lead to missed threats and data breaches?

Bias can create risks in AI systems used for cloud security. There are steps humans can take to mitigate this hidden threat, but first, it’s helpful to understand what types of bias exist and where they come from.

Today marks nine months since ChatGPT was released, and six weeks since we announced our AI Start seed fund. Based on our conversations with scores of inception and early-stage AI founders, and hundreds of leading CXOs (chief experience officers), I can attest that we are definitely in exuberant times.

In the span of less than a year, AI investments have become de rigueur in any portfolio, new private company unicorns are being created every week, and the idea that AI will drive a stock market rebound is taking root. People outside of tech are becoming familiar with new vocabulary.

Large language models. ChatGPT. Deep-learning algorithms. Neural networks. Reasoning engines. Inference. Prompt engineering. CoPilots. Leading strategists and thinkers are sharing their view on how it will transform business, how it will unlock potential, and how it will contribute to human flourishing.

But most deep learning models are loosely based on the brain’s inner workings. AI agents are increasingly endowed with human-like decision-making algorithms. The idea that machine intelligence could become sentient one day no longer seems like science fiction.

How could we tell if machine brains one day gained sentience? The answer may be based on our own brains.

A preprint paper authored by 19 neuroscientists, philosophers, and computer scientists, including Dr. Robert Long from the Center for AI Safety and Dr. Yoshua Bengio from the University of Montreal, argues that the neurobiology of consciousness may be our best bet. Rather than simply studying an AI agent’s behavior or responses—for example, during a chat—matching its responses to theories of human consciousness could provide a more objective ruler.