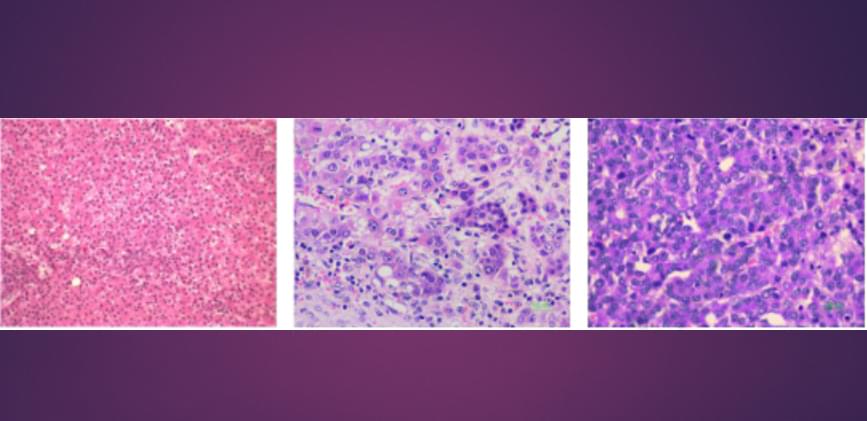

The findings support a diagnostic algorithm to identify the cancer subtype and guide specialized treatment.

The new book Minding the Brain from Discovery Institute Press is an anthology of 25 renowned philosophers, scientists, and mathematicians who seek to address that question. Materialism shouldn’t be the only option for how we think about ourselves or the universe at large. Contributor Angus Menuge, a philosopher from Concordia University Wisconsin, writes.

Neuroscience in particular has implicitly dualist commitments, because the correlation of brain states with mental states would be a waste of time if we did not have independent evidence that these mental states existed. It would make no sense, for example, to investigate the neural correlates of pain if we did not have independent evidence of the existence of pain from the subjective experience of what it is like to be in pain. This evidence, though, is not scientific evidence: it depends on introspection (the self becomes aware of its own thoughts and experiences), which again assumes the existence of mental subjects. Further, Richard Swinburne has argued that scientific attempts to show that mental states are epiphenomenal are self-refuting, since they require that mental states reliably cause our reports of being in those states. The idea, therefore, that science has somehow shown the irrelevance of the mind to explaining behavior is seriously confused.

The AI optimists can’t get away from the problem of consciousness. Nor can they ignore the unique capacity of human beings to reflect back on themselves and ask questions that are peripheral to their survival needs. Functions like that can’t be defined algorithmically or by a materialistic conception of the human person. To counter the idea that computers can be conscious, we must cultivate an understanding of what it means to be human. Then maybe all the technology humans create will find a more modest, realistic place in our lives.

At HLTH, Mayo Clinic Platform President John Halamka gave a window into how his health system is mitigating generative AI risks. Some of the measures Mayo is taking include running analyses on how well algorithms perform across various subgroups and training models only on internal de-identified data.

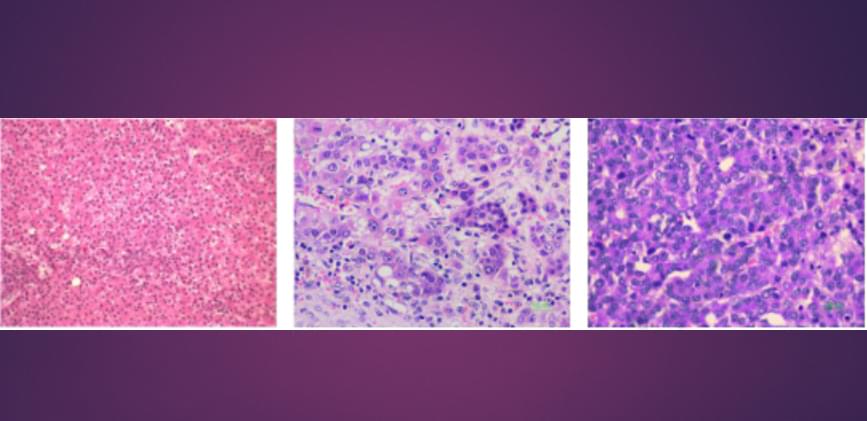

Computer vision algorithms have become increasingly advanced over the past decades, enabling the development of sophisticated technologies to monitor specific environments, detect objects of interest in video footage and uncover suspicious activities in CCTV recordings. Some of these algorithms are specifically designed to detect and isolate moving objects or people of interest in a video, a task known as moving target segmentation.

While some conventional algorithms for moving target segmentation attained promising results, most of them perform poorly in real-time (i.e., when analyzing videos that are not pre-recorded but are being captured in the present moment). Some research teams have thus been trying to tackle this problem using alternative types of algorithms, such as so-called quantum algorithms.

Researchers at Nanjing University of Information Science and Technology and Southeast University in China recently developed a new quantum algorithm for the segmentation of moving targets in grayscale videos. This algorithm, published in Advanced Quantum Technologies, was found to outperform classical approaches in tasks that involve the analysis of video footage in real-time.

It is. based on reinforcement learning algorithms (RL) to allow for quick robot movement.

Robotic dogs have a massive hurdle in autonomous navigation in crowded spaces. Robot navigation in crowds has applications in various fields, including shopping mall service robots, transportation, healthcare, etc.

To facilitate rapid and efficient movement, developing new methods is crucial to enable robots to navigate crowded spaces and obstacles safely.

ILexx/iStock.

Robot navigation in crowds has applications in various fields, including shopping mall service robots, transportation, healthcare, etc.

“The CIA and other US intelligence agencies will soon have an AI chatbot similar to ChatGPT. The program, revealed on Tuesday by Bloomberg, will train on publicly available data and provide sources alongside its answers so agents can confirm their validity. The aim is for US spies to more easily sift through ever-growing troves of information, although the exact nature of what constitutes “public data” could spark some thorny privacy issues.

“We’ve gone from newspapers and radio, to newspapers and television, to newspapers and cable television, to basic internet, to big data, and it just keeps going,” Randy Nixon, the CIA’s director of Open Source Enterprise, said in an interview with Bloomberg. “We have to find the needles in the needle field.” Nixon’s division plans to distribute the AI tool to US intelligence agencies “soon.””.

The CIA confirmed that it’s developing an AI chatbot for all 18 US intelligence agencies to quickly parse troves of ‘publicly available’ data.

The researchers tested their algorithm on a replica of a US Army combat ground vehicle and found it was 99% effective in preventing a malicious attack.

Australian researchers have developed an artificial intelligence algorithm to detect and stop a cyberattack on a military robot in seconds.

The research was conducted by Professor Anthony Finn from the University of South Australia (UniSA) and Dr Fendy Santoso from Charles Sturt University in collaboration with the US Army Futures Command. They simulated a MitM attack on a GVT-BOT ground vehicle and trained its operating system to respond to it, according to the press release.

According to Professor Finn, an autonomous systems researcher at UniSA, the robot operating system (ROS) is prone to cyberattacks because it is highly networked. He explained that Industry 4, characterized by advancements in robotics, automation, and the Internet of Things, requires robots to work together, where sensors, actuators, and controllers communicate and share information via cloud services. He added that this makes them very vulnerable to cyberattacks. He also said that computing power is increasing exponentially every few years, enabling them to develop and implement sophisticated AI algorithms to protect systems from digital threats.

Australian researchers have designed an algorithm that can intercept a man-in-the-middle (MitM) cyberattack on an unmanned military robot and shut it down in seconds.

In an experiment using deep learning neural networks to simulate the behavior of the human brain, artificial intelligence experts from Charles Sturt University and the University of South Australia (UniSA) trained the robot’s operating system to learn the signature of a MitM eavesdropping cyberattack. This is where attackers interrupt an existing conversation or data transfer.

The algorithm, tested in real time on a replica of a United States army combat ground vehicle, was 99% successful in preventing a malicious attack. False positive rates of less than 2% validated the system, demonstrating its effectiveness.

Summary: Unveiling the neurological enigma of traumatic memory formation, researchers harnessed innovative optical and machine-learning methodologies to decode the brain’s neuronal networks engaged during trauma memory creation.

The team identified a neural population encoding fear memory, revealing the synchronous activation and crucial role of the dorsal part of the medial prefrontal cortex (dmPFC) in associative fear memory retrieval in mice.

Groundbreaking analytical approaches, including the ‘elastic net’ machine-learning algorithm, pinpointed specific neurons and their functional connectivity within the spatial and functional fear-memory neural network.