I will try to live as long as possible.

Dr. Ezekiel Emanuel plans to reject life-extending medical care at the age of 75. The reason he does this is quite similar to why the Kaelons commit ritual suicide in Star Trek: The Next Generation. Does this make sense?

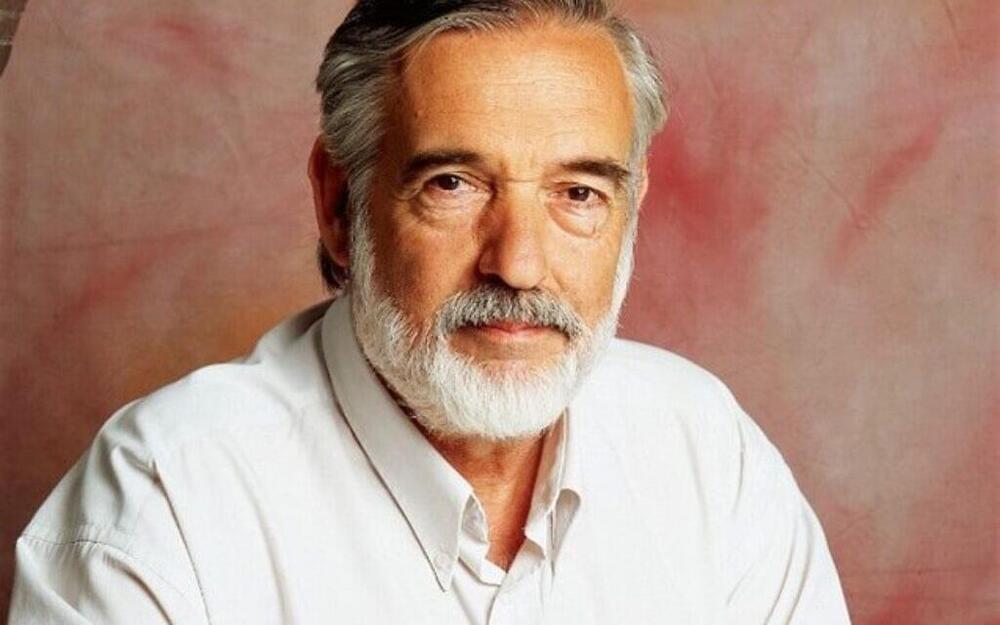

In this thought-provoking episode of Lifespan News, host Ryan O’Shea delves deep into the controversial topic of choosing when to die and the ethics surrounding medical interventions to prolong life. Using the lens of a Star Trek: The Next Generation episode and drawing parallels with Dr. Ezekiel Emanuel’s The Atlantic article, “Why I Hope to Die at 75″, Ryan confronts the moral and societal implications of setting an arbitrary age to stop seeking medical treatment. With advancements in rejuvenation biotechnologies, is it reasonable to maintain such views? As we push the boundaries of science and healthcare, when should we draw the line? Join Ryan as he navigates these complex questions, and remember to share your thoughts in the comments below. Don’t forget to subscribe for more!

Video Clips:

Leading US doctor says he won’t get treatment if he gets cancer after 75, CNN — https://www.youtube.com/watch?v=TgrO4rrrFgQ

How Long Do You Want to Live?, The Atlantic — https://youtu.be/fQBzY-aorFQ