The pandemic also helped by normalizing remote work.

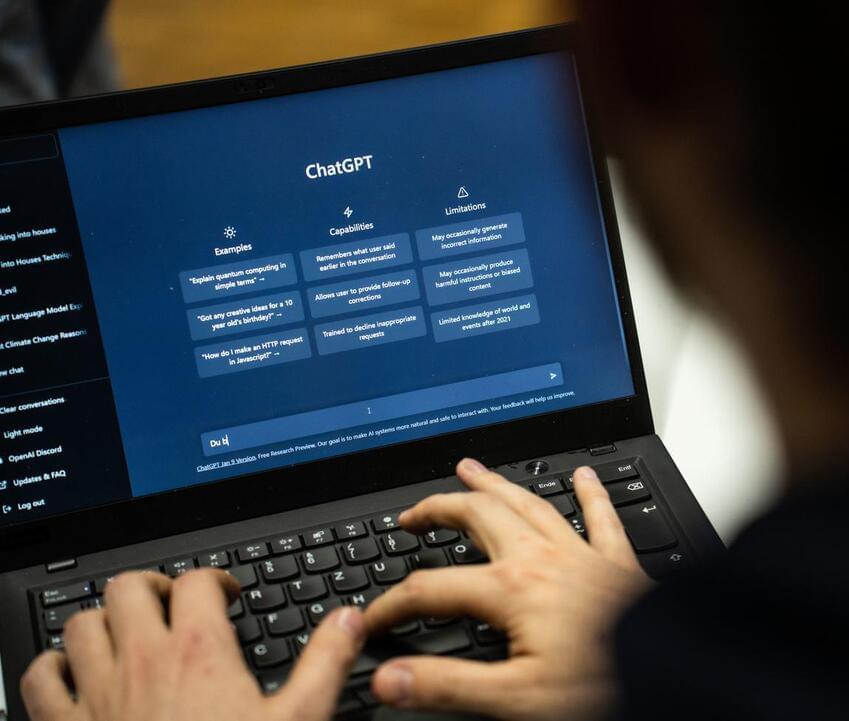

A new report by Vice.

“That’s the only reason I got my job this year,” one worker referred to only as Ben said of OpenAI’s tool.

Fulltimetraveller/iStock.

Artificial-intelligence tools can enable remote workers to not just more than one job, but to do them with time left to spare. Vice spoke anonymously to various workers holding down two to four full-time jobs with help from these tools and they all were in agreement that it is an ideal way to increase one’s income.