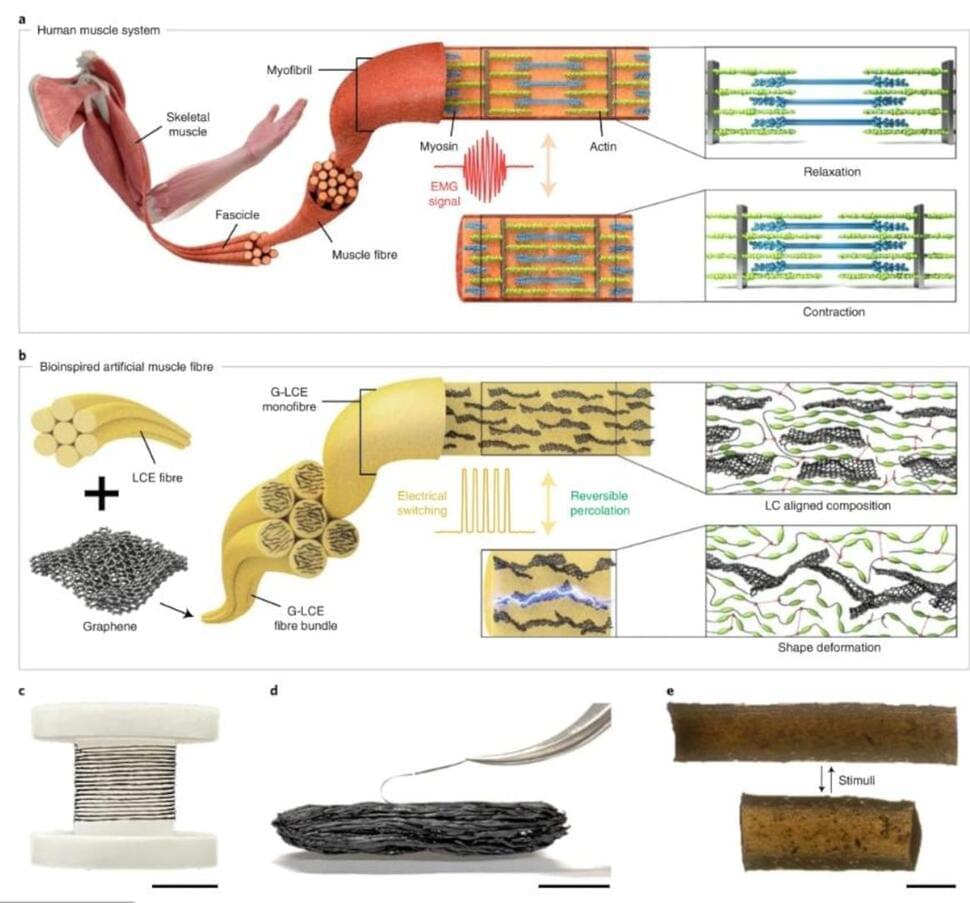

We’ve all seen Cyborgs in Hollywood blockbusters. But it turns out these fictional beings aren’t so far-fetched. In fact, this program features a true-to-life cyborg, who at four months of age, was the youngest American to be outfitted with a myoelectric hand. And at one ground-breaking engineering.

facility, engineers are developing biotechnologies that can even further enhance high-tech like this by giving mechanical prosthetics something incredible: the physical sensation of touch!

SUBSCRIBE for more amazing stories, including free FULL documentaries. At Java Films we have an incredible library of award-winning documentaries: from world-leading investigations to true crime and history, we have something for everyone!

Click the SUBSCRIBE button and make sure to set NOTIFICATIONS to stay updated with all new content!

Sign up to the Java Films Clubs for exclusive deals and discounts for amazing documentaries — find out first about FREE FULL docs: http://eepurl.com/hhNC69

Head to https://www.watchjavafilms.tv/ to check out our catalogue of documentaries available on Demand.

You can also find our docs on :

Amazon Prime Video: https://www.amazon.com/v/javafilms?tag=lifeboatfound-20.

Vimeo On Demand: https://vimeo.com/javafilms/vod_pages.

Like us on Facebook: https://www.facebook.com/JavaFilms/