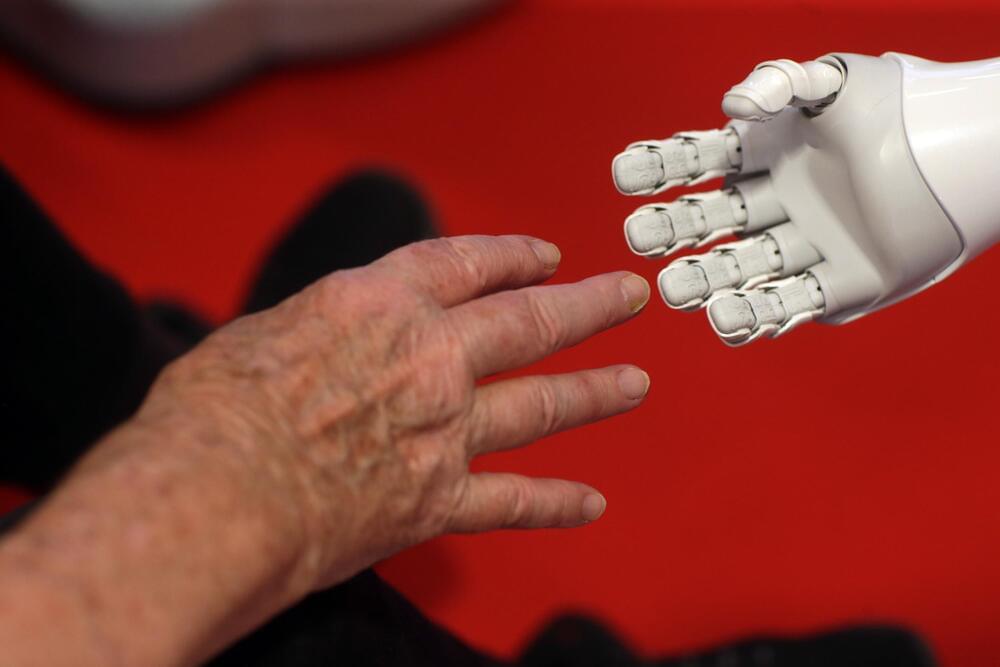

An artificial sensory system that is able to recognize fine textures—such as twill, corduroy and wool—with a high resolution, similar to a human finger, is reported in a Nature Communications paper. The findings may help improve the subtle tactile sensation abilities of robots and human limb prosthetics and could be applied to virtual reality in the future, the authors suggest.

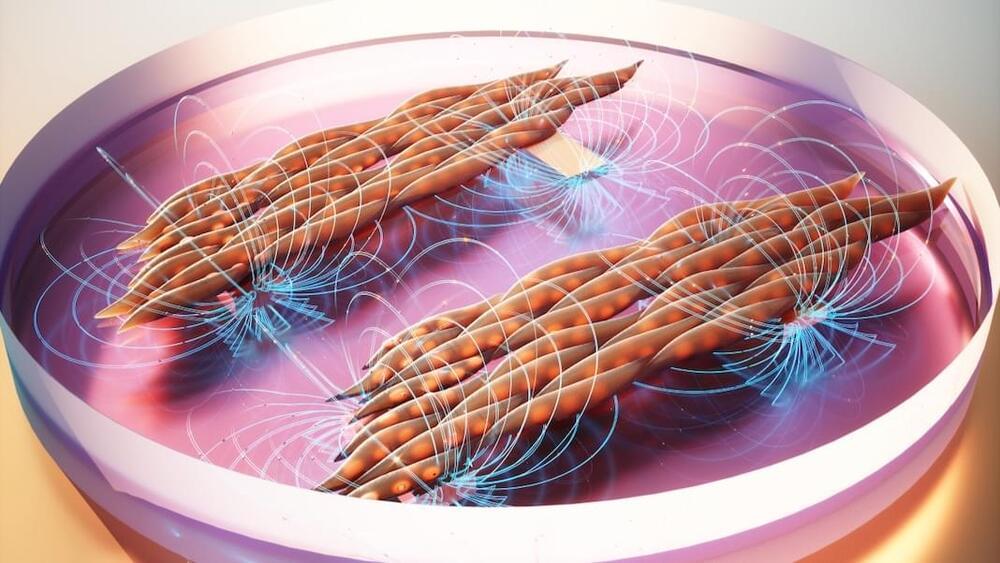

Humans can gently slide a finger on the surface of an object and identify it by capturing both static pressure and high-frequency vibrations. Previous approaches to create artificial tactile sensors for sensing physical stimuli, such as pressure, have been limited in their ability to identify real-world objects upon touch, or they rely on multiple sensors. Creating a real-time artificial sensory system with high spatiotemporal resolution and sensitivity has been challenging.

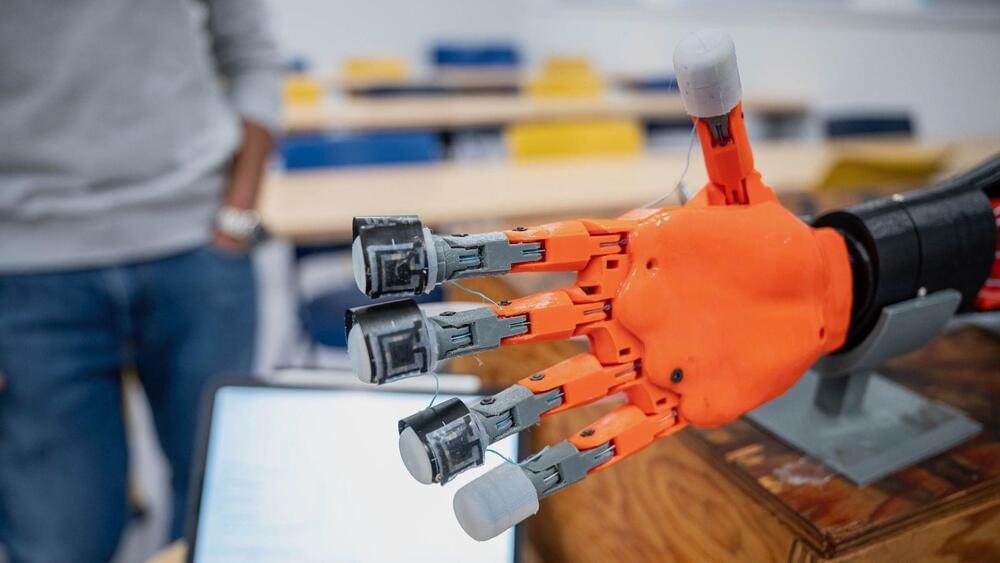

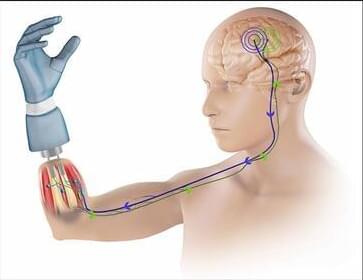

Chuan Fei Guo and colleagues present a flexible slip sensor that mimics the features of a human fingerprint to enable the system to recognize small features on surface textures when touching or sliding the sensor across the surface. The authors integrated the sensor onto a prosthetic human hand and added machine learning to the system.