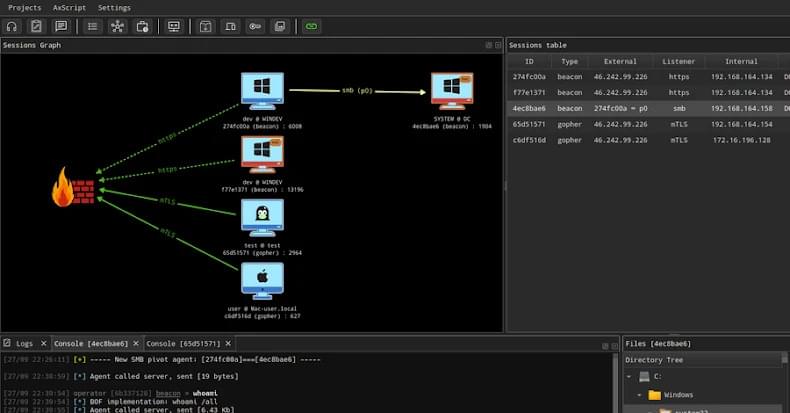

The activity targeted diplomatic organizations in Hungary, Belgium, Italy, and the Netherlands, as well as government agencies in Serbia, Arctic Wolf said in a technical report published Thursday.

“The attack chain begins with spear-phishing emails containing an embedded URL that is the first of several stages that lead to the delivery of malicious LNK files themed around European Commission meetings, NATO-related workshops, and multilateral diplomatic coordination events,” the cybersecurity company said.

The files are designed to exploit ZDI-CAN-25373 to trigger a multi-stage attack chain that culminates in the deployment of the PlugX malware using DLL side-loading. PlugX is a remote access trojan that’s also referred to as Destroy RAT, Kaba, Korplug, SOGU, and TIGERPLUG.