New research shows that cells understand and execute directions correctly, and scientists may be able to take advantage.

By hijacking the DNA of a human cell, they showed it’s possible to program it like a simple computer.

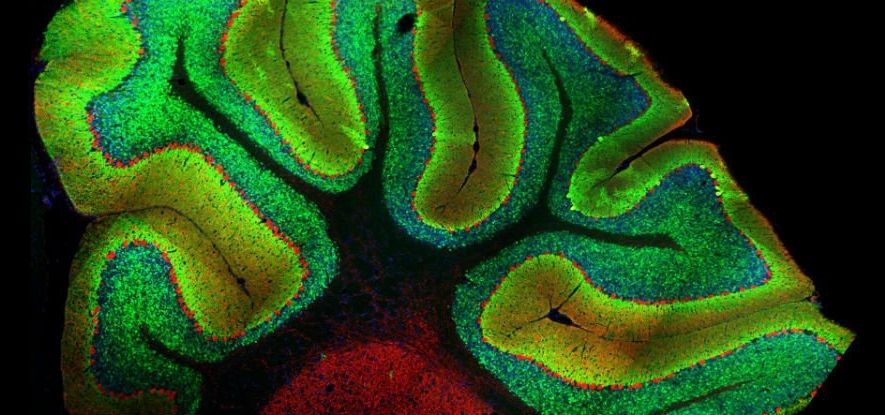

One of the best-known regions of the brain, the cerebellum accounts for just 10 percent of the organ’s total volume, but contains more than 50 percent of its neurons.

Despite all that processing power, it’s been assumed that the cerebellum functions largely outside the realm of conscious awareness, instead coordinating physical activities like standing and breathing. But now neuroscientists have discovered that it plays an important role in the reward response — one of the main drives that motivate and shape human behaviour.

Not only does this open up new research possibilities for the little region that has for centuries been primarily linked motor skills and sensory input, but it suggests that the neurons that make up much of the cerebellum — called granule cells — are functioning in ways we never anticipated.

A webpage today is often the sum of many different components. A user’s home page on a social-networking site, for instance, might display the latest posts from the users’ friends; the associated images, links, and comments; notifications of pending messages and comments on the user’s own posts; a list of events; a list of topics currently driving online discussions; a list of games, some of which are flagged to indicate that it’s the user’s turn; and of course the all-important ads, which the site depends on for revenues.

With increasing frequency, each of those components is handled by a different program running on a different server in the website’s data center. That reduces processing time, but it exacerbates another problem: the equitable allocation of network bandwidth among programs.

Many websites aggregate all of a page’s components before shipping them to the user. So if just one program has been allocated too little bandwidth on the data center network, the rest of the page—and the user—could be stuck waiting for its component.

Mushroom buildings, jurassic park and terraforming.

Did you ever hear about synthetic biology? No? Imagine that we could alter and produce DNA from scratch just like an engineer. Doesn’t it sound like one of the greatest interdisciplinary achievements in recent history?

Think about it, a bio-technologist is doing more or less the work of a programmer but instead of using a computer language he’s doing it by arranging molecules embedded in every living cell. The outcome, if ever mastered, could reshape the world around us dramatically.

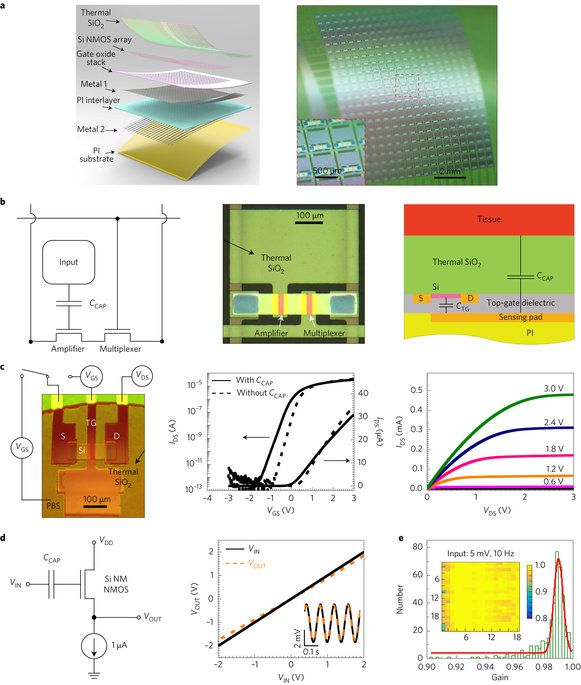

Advanced capabilities in electrical recording are essential for the treatment of heart-rhythm diseases. The most advanced technologies use flexible integrated electronics; however, the penetration of biological fluids into the underlying electronics and any ensuing electrochemical reactions pose significant safety risks. Here, we show that an ultrathin, leakage-free, biocompatible dielectric layer can completely seal an underlying array of flexible electronics while allowing for electrophysiological measurements through capacitive coupling between tissue and the electronics, without the need for direct metal contact. The resulting current-leakage levels and operational lifetimes are, respectively, four orders of magnitude smaller and between two and three orders of magnitude longer than those of other flexible-electronics technologies. Systematic electrophysiological studies with normal, paced and arrhythmic conditions in Langendorff hearts highlight the capabilities of the capacitive-coupling approach. These advances provide realistic pathways towards the broad applicability of biocompatible, flexible electronic implants.

Elon Musk wants to connect your brain to a computer.

This is big: Is the Singularity a step closer?

Tesla Inc founder and Chief Executive Elon Musk has launched a company called Neuralink Corp through which computers could merge with human brains, the Wall Street Journal reported, citing people familiar with the matter.

Neuralink is pursuing what Musk calls the “neural lace” technology, implanting tiny brain electrodes that may one day upload and download thoughts, the Journal reported. (on.wsj.com/2naUATf)

Musk has not made an official announcement, but Neuralink was registered in California as a “medical research” company last July, and he plans on funding the company mostly by himself, a person briefed on the plans told the Journal.

To put it mildly, sequencing and building a genome from scratch isn’t cheap. It’s sometimes affordable for human genomes, but it’s often prohibitively expensive (hundreds of thousands of dollars) whenever you’re charting new territory — say, a specific person or an unfamiliar species. A chromosome can have hundreds of millions of genetic base pairs, after all. Scientists may have a way to make it affordable across the board, however. They’ve developed a new method, 3D genome assembly, that can sequence and build genomes from the ground up for less than $10,000.

Where earlier approaches saw researchers using computers to stick small pieces of genetic code together, the new technique takes advantages of folding maps (which show how a 6.5ft long genome can cram into a cell’s nucleus) to quickly build out a sequence. As you only need short reads of DNA to make this happen, the cost is much lower. You also don’t need to know much about your sample organism going in.

As an example of what’s possible, the team completely assembled the three chromosomes for the Aedes aegypti mosquito for the first time. More complex organisms would require more work, of course, but the dramatically lower cost makes that more practical than ever. Provided the approach finds widespread use, it could be incredibly valuable for both biology and medicine.

How small is the world’s smallest hard drive? Smaller than you’d believe.