Category: computing – Page 894

Elephants Never Forget!

It’s a common saying that elephants never forget. But the more we learn about elephants, the more it appears that their impressive memory is only one aspect of an incredible intelligence that makes them some of the most social, creative, and benevolent creatures on Earth. Alex Gendler takes us into the incredible, unforgettable mind of an elephant.

It’s a common saying that elephants never forget, but these magnificent animals are more than giant walking hard drives. The more we learn about elephants, the more it appears that their impressive memory is only one aspect of an incredible intelligence that makes them some of the most social, creative and benevolent creatures on Earth.

A Solution of the P versus NP Problem

Abstract: Berg and Ulfberg and Amano and Maruoka have used CNF-DNF-approximators to prove exponential lower bounds for the monotone network complexity of the clique function and of Andreev’s function. We show that these approximators can be used to prove the same lower bound for their non-monotone network complexity. This implies P not equal NP.

Futurist Gray Scott: We Can’t Ignore Our Psychological Future

Why are we often so wrong about how the future and future technology will reshape society and our personal lives? In this new video from the Galactic Public Archives, Futurist Gray Scott tells us why he thinks it is important to look at all aspects of the future.

Follow us on social media:

Twitter / Facebook / Instagram

Follow Gray Scott:

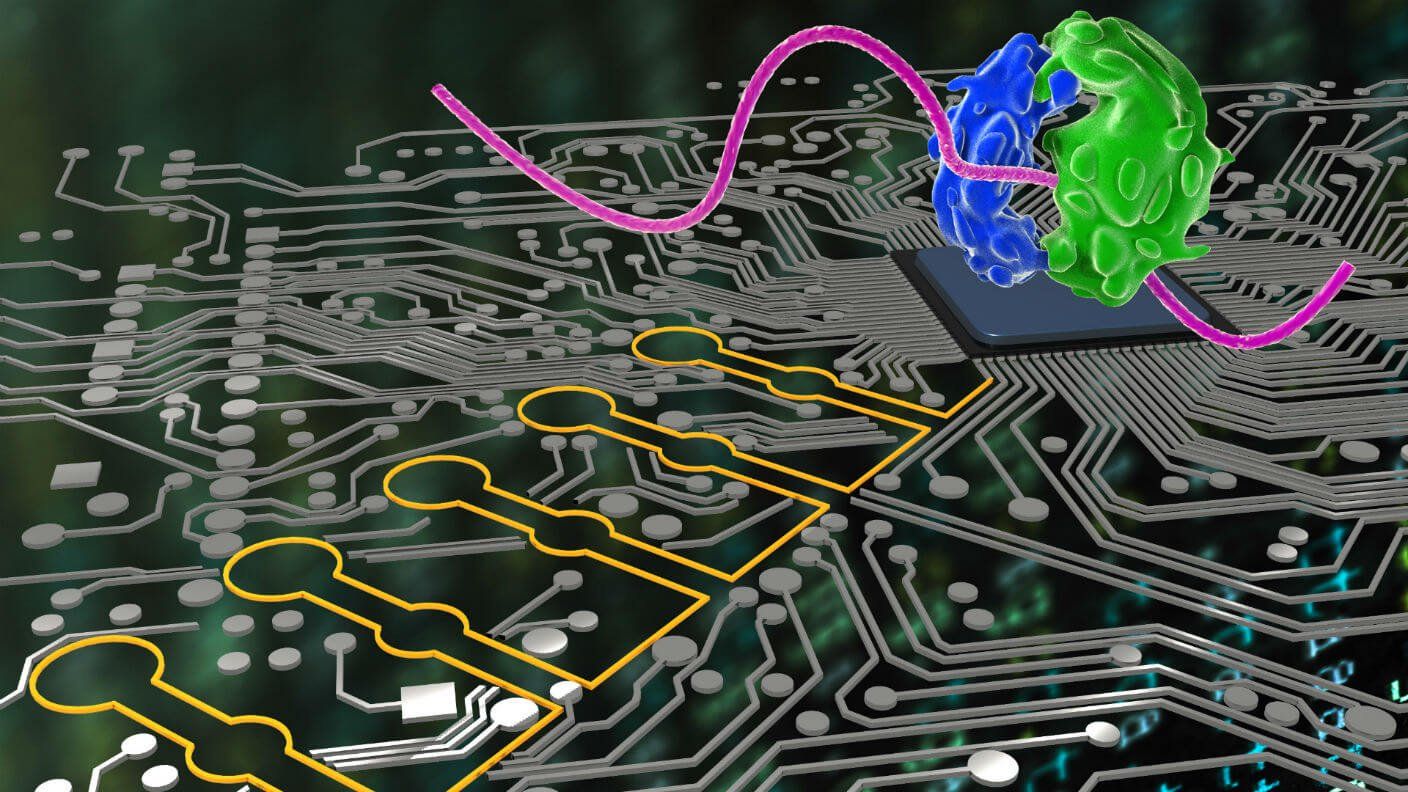

The First Ever Blueprint for a Large Scale Quantum Computer has been Unveiled

An international team, led by a scientist from the University of Sussex, have today unveiled the first practical blueprint for how to build a quantum computer, the most powerful computer on Earth.

This huge leap forward towards creating a universal quantum computer is published today (1 February 2017) in the influential journal Science Advances. It has long been known that such a computer would revolutionise industry, science and commerce on a similar scale as the invention of ordinary computers. But this new work features the actual industrial blueprint to construct such a large-scale machine, more powerful in solving certain problems than any computer ever constructed before.

Once built, the computer’s capabilities mean it would have the potential to answer many questions in science; create new, lifesaving medicines; solve the most mind-boggling scientific problems; unravel the yet unknown mysteries of the furthest reaches of deepest space; and solve some problems that an ordinary computer would take billions of years to compute.

Here Are Some New Ideas for Fighting Botnets

It’s a tricky problem, so solutions have to be carefully thought out.

Federal agencies face a thorny path as they try to step up the government’s fight against armies of infected computers and connected devices known as botnets, responses to a government information request reveal.

Industry, academic and think tank commenters all agreed more should be done to combat the zombie computer armies that digital ne’er-do-wells frequently hire to force adversaries offline.