New computer program uses brain activity to draw images of airplanes, leopards, and stained-glass windows.

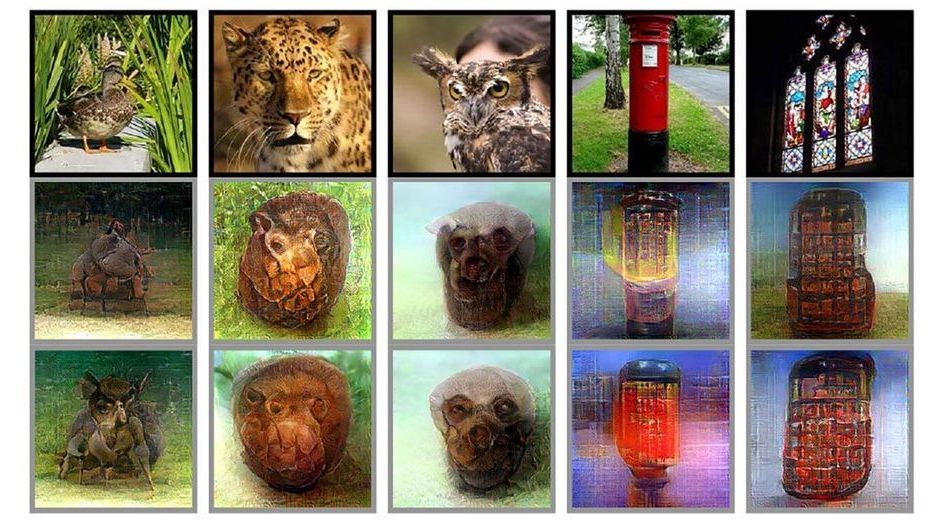

A new method allows the quantum state of atomic “qubits”—the basic unit of information in quantum computers—to be measured with twenty times less error than was previously possible, without losing any atoms. Accurately measuring qubit states, which are analogous to the one or zero states of bits in traditional computing, is a vital step in the development of quantum computers. A paper describing the method by researchers at Penn State appears March 25, 2019 in the journal Nature Physics.

“We are working to develop a quantum computer that uses a three-dimensional array of laser-cooled and trapped cesium atoms as qubits,” said David Weiss, professor of physics at Penn State and the leader of the research team. “Because of how quantum mechanics works, the atomic qubits can exist in a ‘superposition’ of two states, which means they can be, in a sense, in both states simultaneously. To read out the result of a quantum computation, it is necessary to perform a measurement on each atom. Each measurement finds each atom in only one of its two possible states. The relative probability of the two results depends on the superposition state before the measurement.”

To measure qubit states, the team first uses lasers to cool and trap about 160 atoms in a three-dimensional lattice with X, Y, and Z axes. Initially, the lasers trap all of the atoms identically, regardless of their quantum state. The researchers then rotate the polarization of one of the laser beams that creates the X lattice, which spatially shifts atoms in one qubit state to the left and atoms in the other qubit state to the right. If an atom starts in a superposition of the two qubit states, it ends up in a superposition of having moved to the left and having moved to the right. They then switch to an X lattice with a smaller lattice spacing, which tightly traps the atoms in their new superposition of shifted positions. When light is then scattered from each atom to observe where it is, each atom is either found shifted left or shifted right, with a probability that depends on its initial state.

Physicists at EPFL propose a new “quantum simulator”: a laser-based device that can be used to study a wide range of quantum systems. Studying it, the researchers have found that photons can behave like magnetic dipoles at temperatures close to absolute zero, following the laws of quantum mechanics. The simple simulator can be used to better understand the properties of complex materials under such extreme conditions.

When subject to the laws of quantum mechanics, systems made of many interacting particles can display behaviour so complex that its quantitative description defies the capabilities of the most powerful computers in the world. In 1981, the visionary physicist Richard Feynman argued we can simulate such complex behavior using an artificial apparatus governed by the very same quantum laws – what has come to be known as a “quantum simulator.”

One example of a complex quantum system is that of magnets placed at really low temperatures. Close to absolute zero (−273.15 degrees Celsius), magnetic materials may undergo what is known as a “quantum phase transition.” Like a conventional phase transition (e.g. ice melting into water, or water evaporating into steam), the system still switches between two states, except that close to the transition point the system manifests quantum entanglement – the most profound feature predicted by quantum mechanics. Studying this phenomenon in real materials is an astoundingly challenging task for experimental physicists.

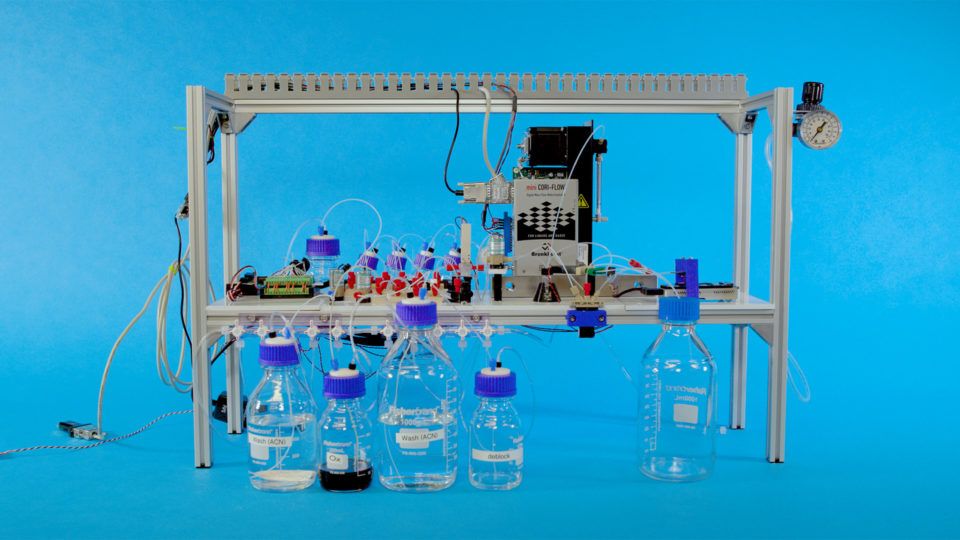

Microsoft has helped build the first device that automatically encodes digital information into DNA and back to bits again.

DNA storage: Microsoft has been working toward a photocopier-size device that would replace data centers by storing files, movies, and documents in DNA strands, which can pack in information at mind-boggling density.

According to Microsoft, all the information stored in a warehouse-size data center would fit into a set of Yahztee dice, were it written in DNA.

A good intro to QUANTUM COMPUTERS, at 5 levels of explanations — from kid-level to expert.

WIRED has challenged IBM’s Dr. Talia Gershon (Senior Manager, Quantum Research) to explain quantum computing to 5 different people; a child, teen, a college student, a grad student and a professional.

Still haven’t subscribed to WIRED on YouTube? ►► http://wrd.cm/15fP7B7

ABOUT WIRED

WIRED is where tomorrow is realized. Through thought-provoking stories and videos, WIRED explores the future of business, innovation, and culture.

Quantum computing expert explains one concept in 5 levels of difficulty | WIRED.

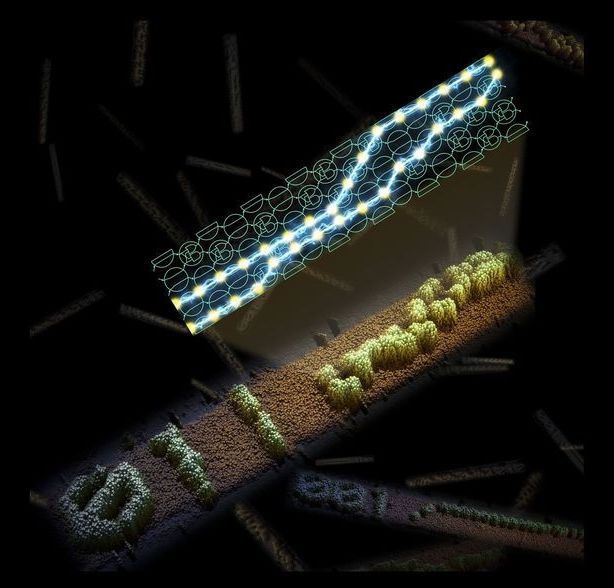

Computer scientists at the University of California, Davis, and the California Institute of Technology have created DNA molecules that can self-assemble into patterns essentially by running their own program. The work is published March 21 in the journal Nature.

“The ultimate goal is to use computation to grow structures and enable more sophisticated molecular engineering,” said David Doty, assistant professor of computer science at UC Davis and co-first author on the paper.

The system is analogous to a computer, but instead of using transistors and diodes, it uses molecules to represent a six-bit binary number (for example, 011001). The team developed a variety of algorithms that can be computed by the molecules.

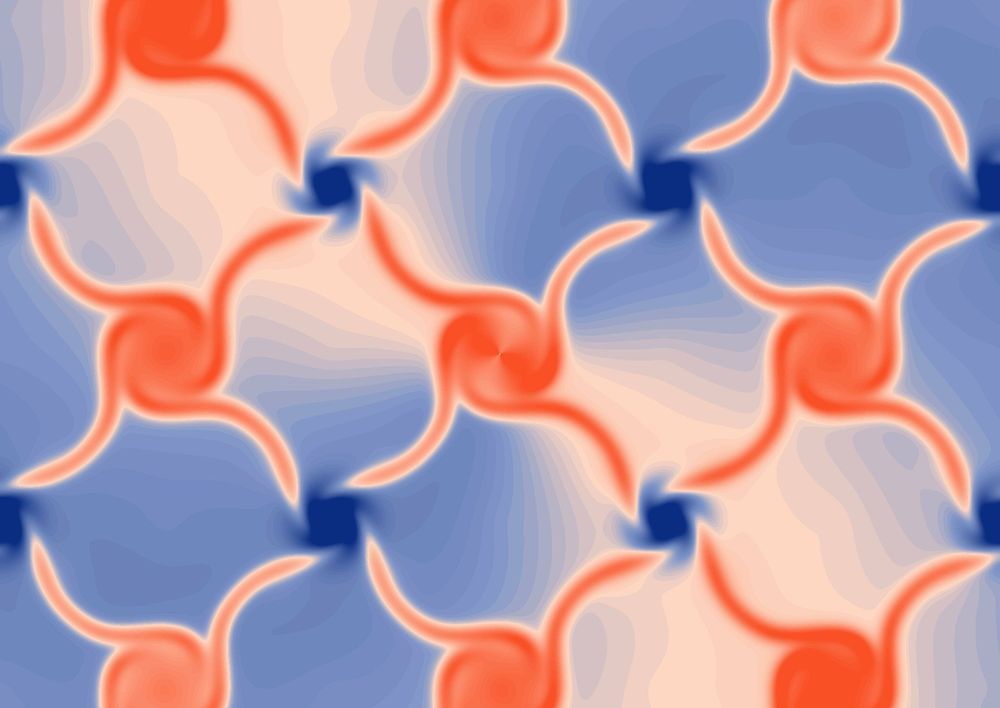

The realization of so-called topological materials—which exhibit exotic, defect-resistant properties and are expected to have applications in electronics, optics, quantum computing, and other fields—has opened up a new realm in materials discovery.

Several of the hotly studied topological materials to date are known as topological insulators. Their surfaces are expected to conduct electricity with very little resistance, somewhat akin to superconductors but without the need for incredibly chilly temperatures, while their interiors—the so-called “bulk” of the material—do not conduct current.

Now, a team of researchers working at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) has discovered the strongest topological conductor yet, in the form of thin crystal samples that have a spiral-staircase structure. The team’s study of crystals, dubbed topological chiral crystals, is reported in the March 20 edition of the journal Nature.

Carbon monoxide detectors in our homes warn of a dangerous buildup of that colorless, odorless gas we normally associate with death. Astronomers, too, have generally assumed that a build-up of carbon monoxide in a planet’s atmosphere would be a sure sign of lifelessness. Now, a UC Riverside-led research team is arguing the opposite: celestial carbon monoxide detectors may actually alert us to a distant world teeming with simple life forms.

“With the launch of the James Webb Space Telescope two years from now, astronomers will be able to analyze the atmospheres of some rocky exoplanets,” said Edward Schwieterman, the study’s lead author and a NASA Postdoctoral Program fellow in UCR’s Department of Earth Sciences. “It would be a shame to overlook an inhabited world because we did not consider all the possibilities.”

In a study published today in The Astrophysical Journal, Schwieterman’s team used computer models of chemistry in the biosphere and atmosphere to identify two intriguing scenarios in which carbon monoxide readily accumulates in the atmospheres of living planets.