The traveling salesman problem is considered a prime example of a combinatorial optimization problem. Now a Berlin team led by theoretical physicist Prof. Dr. Jens Eisert of Freie Universität Berlin and HZB has shown that a certain class of such problems can actually be solved better and much faster with quantum computers than with conventional methods.

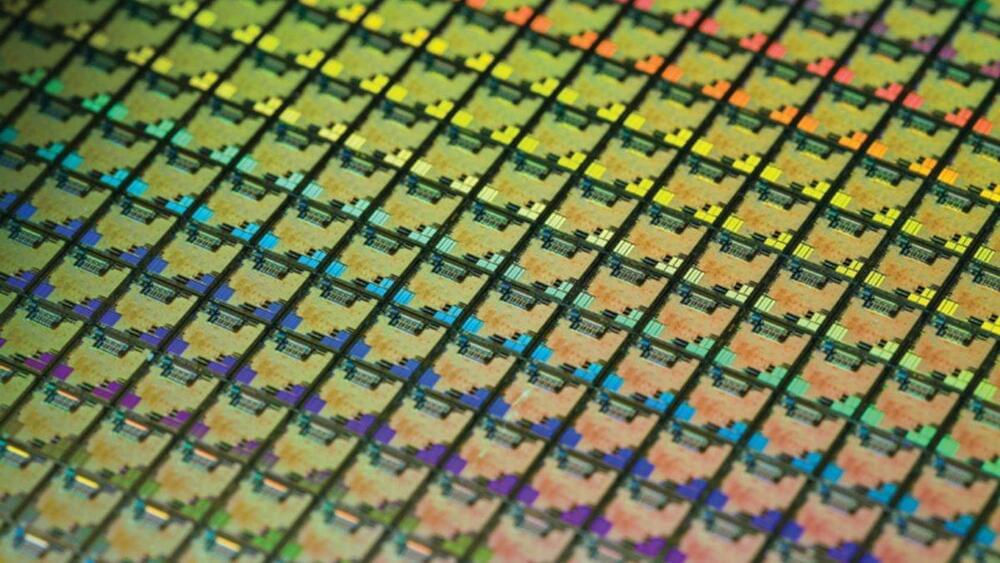

Quantum computers use so-called qubits, which are not either zero or one as in conventional logic circuits, but can take on any value in between. These qubits are realized by highly cooled atoms, ions, or superconducting circuits, and it is still physically very complex to build a quantum computer with many qubits. However, mathematical methods can already be used to explore what fault-tolerant quantum computers could achieve in the future.

“There are a lot of myths about it, and sometimes a certain amount of hot air and hype. But we have approached the issue rigorously, using mathematical methods, and delivered solid results on the subject. Above all, we have clarified in what sense there can be any advantages at all,” says Prof. Dr. Jens Eisert, who heads a joint research group at Freie Universität Berlin and Helmholtz-Zentrum Berlin.

עברית (Hebrew)

עברית (Hebrew)