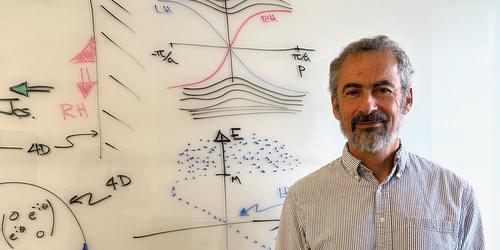

David Kaplan has developed a lattice model for particles that are left-or right-handed, offering a firmer foundation for the theory of weak interactions.

David Kaplan is on a quest to straighten out chirality, or “handedness,” in particle physics. A theorist at the University of Washington, Seattle, Kaplan has been wrestling with chirality conundrums for over 30 years. The main problem he has been working on is how to place chiral particles, such as left-handed electrons or right-handed antineutrinos, on a discrete space-time, or “lattice.” That may sound like a minor concern, but without a solution to this problem the weak interaction—and by extension the standard model of particle physics—can’t be simulated on a computer beyond low-energy approximations. Attempts to develop a lattice theory for chiral particles have run into model-dooming inconsistencies. There’s even a well-known theorem that says the whole endeavor should be impossible.

Kaplan is unfazed. He has been a pioneer in formulating chirality’s place in particle physics. One of his main contributions has been to show that some of chirality’s problems can be solved in extra dimensions. Kaplan has now taken this extra-dimension strategy further, showing that reducing the boundaries, or edges, around the extra dimensions can help keep left-and right-handed particle states from mixing [1, 2]. With further work, he believes this breakthrough could finally make the lattice “safe” for chiral particles. Physics Magazine spoke to Kaplan about the issues surrounding chirality in particle physics.

עברית (Hebrew)

עברית (Hebrew)