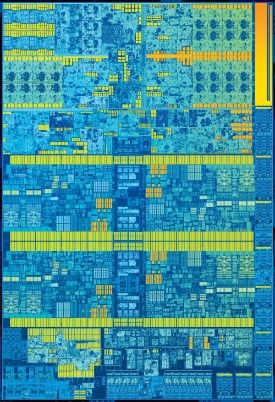

So, you think you’ve seen it all? You haven’t seen anything yet. By the year 2030, advancements will excel anything we’ve seen before concerning human intelligence. In fact, predictions offer glimpses of something truly amazing – the development of a human hybrid, a mind that thinks in artificial intelligence.

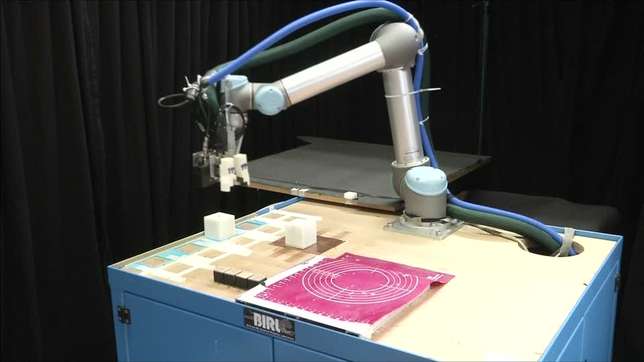

Ray Kurzweil, director of engineering at Google, spoke openly about this idea at the Exponential Finance Conference in New York. He predicts that humans will have hybrid brains able to connect to the cloud, just as with computers. In this cloud, there will be thousands of computers which will update human intelligence. The larger the cloud, the more complicated the thinking. This will all be connected using DNA strands called Nanobots. Sounds like a Sci-Fi movie, doesn’t it?

Kurzweil says: