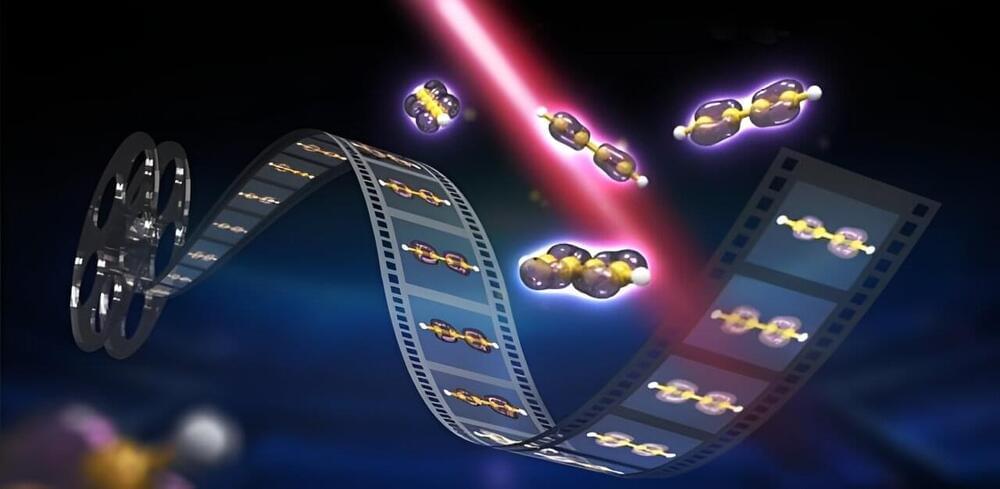

If modern artificial intelligence has a founding document, a sacred text, it is Google’s 2017 research paper “Attention Is All You Need.” This paper introduced a new deep learning architecture known as the transformer, which has gone on to revolutionize the field of AI over the past half-decade.

The generative AI mania currently taking the world by storm can be traced directly to the invention of the transformer. Every major AI model and product in the headlines today—ChatGPT, GPT-4, Midjourney, Stable Diffusion, GitHub Copilot, and so on—is built using transformers.

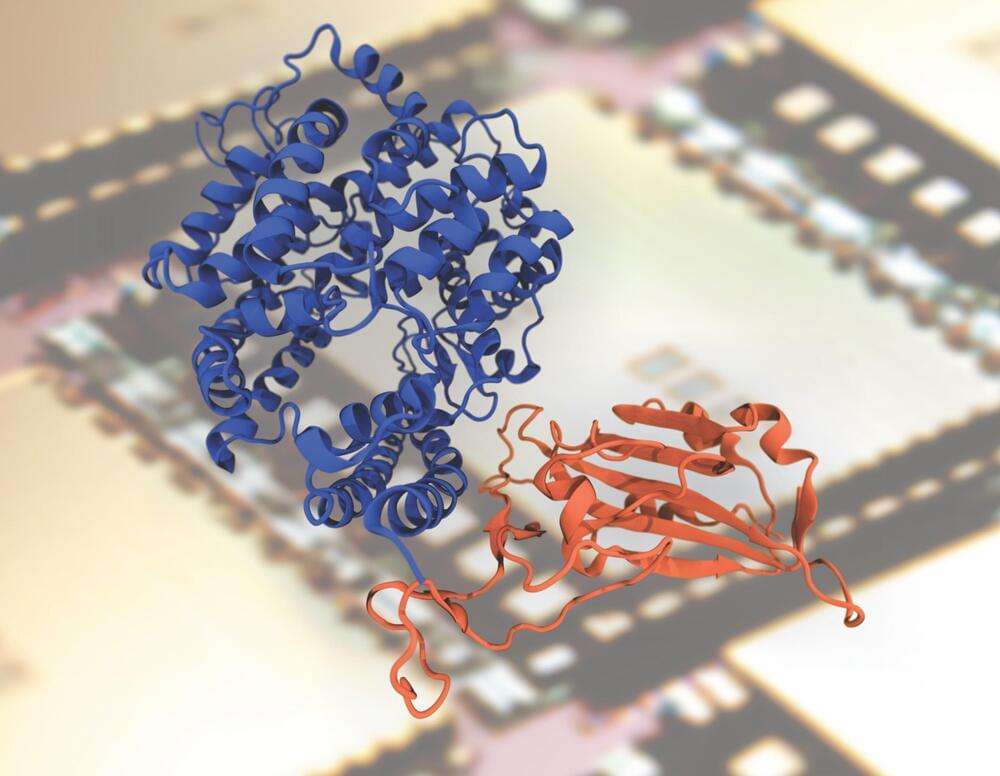

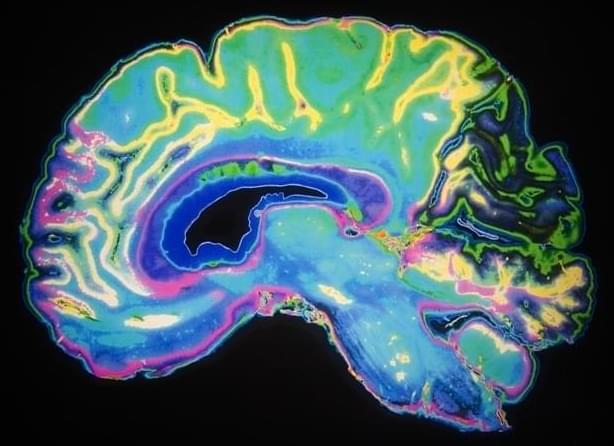

Transformers are remarkably general-purpose: while they were initially developed for language translation specifically, they are now advancing the state of the art in domains ranging from computer vision to robotics to computational biology.

In short, transformers represent the undisputed gold standard for AI technology today.

But no technology remains dominant forever.