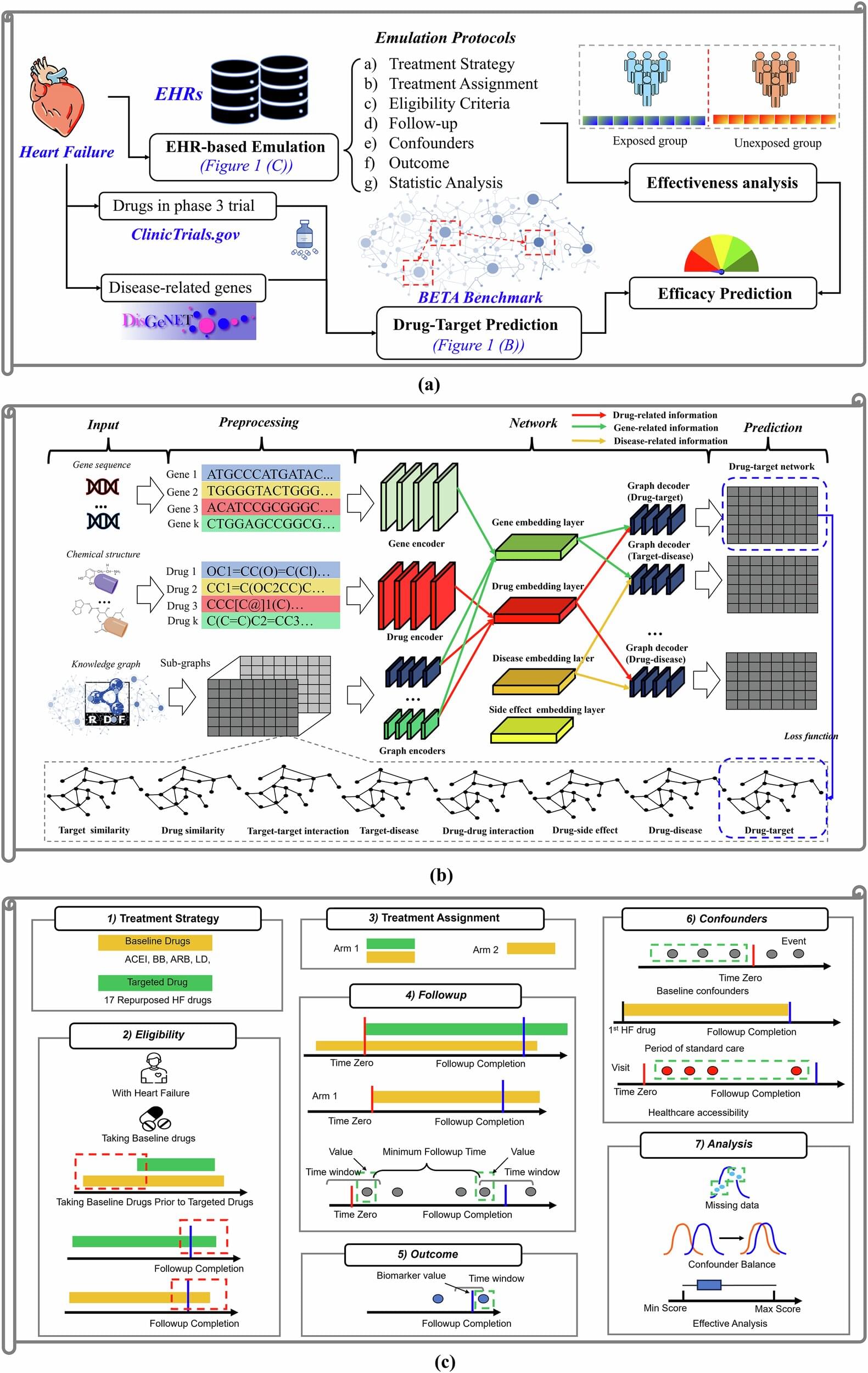

Mayo Clinic researchers have developed a new way to predict whether existing drugs could be repurposed to treat heart failure, one of the world’s most pressing health challenges. By combining advanced computer modeling with real-world patient data, the team has created “virtual clinical trials” that may facilitate the discovery of effective therapies while reducing the time, cost, and risk of failed studies.

“We’ve shown that with our framework, we can predict the clinical effect of a drug without a randomized controlled trial. We can say with high confidence if a drug is likely to succeed or not,” says Nansu Zong, Ph.D., a biomedical informatician at Mayo Clinic and lead author of the study, which was published in npj Digital Medicine.