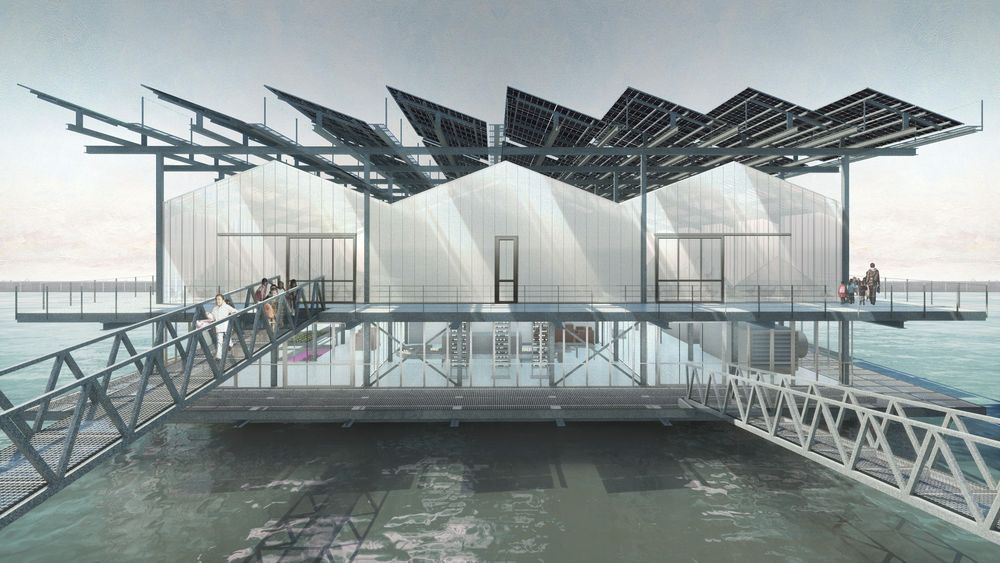

We’ve reported on several eye-catching floating architecture projects lately, including a “parkipelago” and a greenery-covered office. This solar-powered urban poultry farm is the most off-the-wall idea of the bunch though and, assuming it goes ahead as planned, will host roughly 7,000 egg-laying hens, as well as an area for growing food, in a harbor in Rotterdam, the Netherlands.

The building is officially named Floating Farm Poultry and was designed by Goldsmith for a company named Floating Farm Holding BV.

It will be based on a floating platform with three pontoons and will measure 3,500 sq m (roughly 37,670 sq ft), spread over three floors. Its interior layout will consist of a lower level used for farming vegetables, herbs and cresses under LED lighting, while upstairs will be a production factory floor for processing and packaging. The uppermost level is conceived as the poultry area and will contain the egg-laying hens.