The study of visual illusions has proven to be a very useful approach in vision science. In this work we start by showing that, while convolutional neural networks (CNNs) trained for low-level visual tasks in natural images may be deceived by brightness and color illusions, some network illusions can be inconsistent with the perception of humans. Next, we analyze where these similarities and differences may come from. On one hand, the proposed linear eigenanalysis explains the overall similarities: in simple CNNs trained for tasks like denoising or deblurring, the linear version of the network has center-surround receptive fields, and global transfer functions are very similar to the human achromatic and chromatic contrast sensitivity functions in human-like opponent color spaces. These similarities are consistent with the long-standing hypothesis that considers low-level visual illusions as a by-product of the optimization to natural environments. Specifically, here human-like features emerge from error minimization. On the other hand, the observed differences must be due to the behavior of the human visual system not explained by the linear approximation. However, our study also shows that more ‘flexible’ network architectures, with more layers and a higher degree of nonlinearity, may actually have a worse capability of reproducing visual illusions. This implies, in line with other works in the vision science literature, a word of caution on using CNNs to study human vision: on top of the intrinsic limitations of the L + NL formulation of artificial networks to model vision, the nonlinear behavior of flexible architectures may easily be markedly different from that of the visual system.

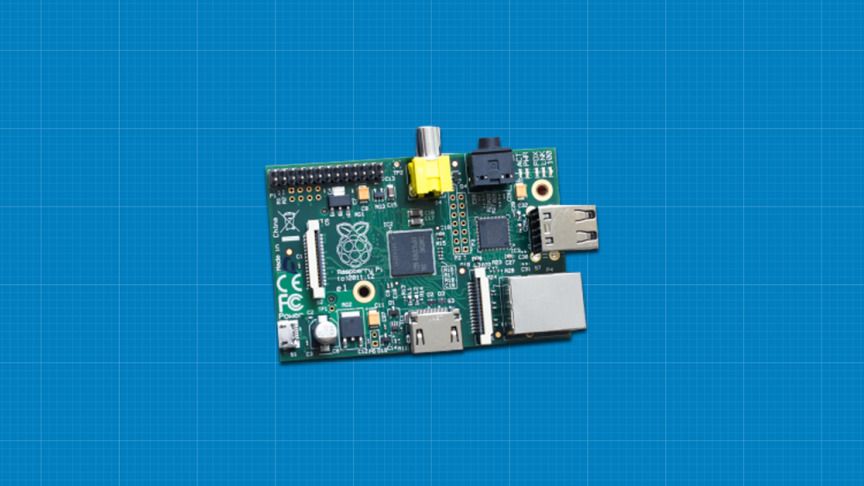

Raspberry Pi and ROS Robotics are versatile exciting tools that allow you to build many wondrous projects. However, they are not always the easiest systems to manage and use… until now.

The Ultimate Raspberry Pi & ROS Robotics Developer Super Bundle will turn you into a Raspberry Pi and ROS Robotics expert in no time. With over 39 hours of training and over 15 courses, the bundle leaves no stone unturned.

There is almost nothing you won’t be able to do with your new-found bundle on Raspberry Pi and ROS Robotics.

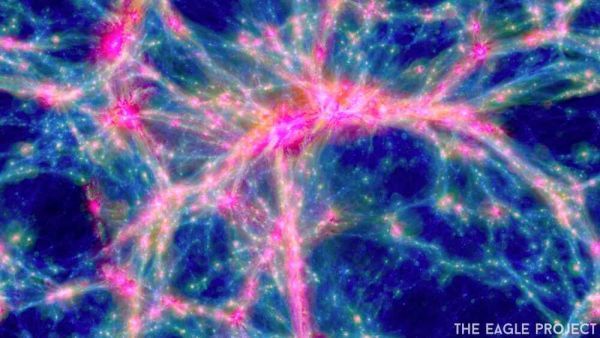

A hypothetical particle known as the ultralight boson could be responsible for our universe’s dark matter.

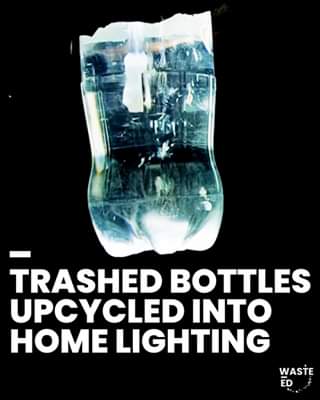

Empty Coke bottles are being turned into solar-powered light sources! They’re lighting the way for communities without access to electricity and upcycling plast… See More.

Northwestern researchers have developed a first-of-its-kind soft, aquatic robot that is powered by light and rotating magnetic fields. These life-like robotic materials could someday be used as “smart” microscopic systems for production of fuels and drugs, environmental cleanup or transformative medical procedures.

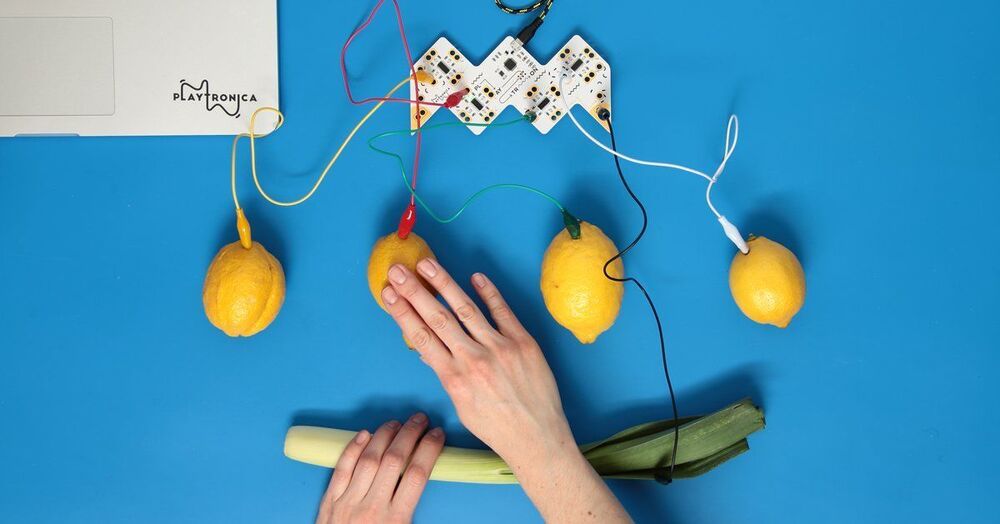

Creative technology studio playtronica has found a way of making music with pretty much anything including vegetables. their electronic devices transform touch into midi notes making anything into a midi controller including one that turns the human body into a keyboard. how it works is by effectively creating a circuit between the device and human body or the fruit. it’s then connected to a computer so when you touch the instrument the circuit is closed, and a specified sound is played. the tools are designed to work with organic materials and mostly anything that has water inside.

Every day, we produce large quantities of urine, at no cost. So instead of flushing it down the toilet, what if it was transformed into something useful? The Down to Earth team takes a closer look.

Urine is made up of 95 percent water as well as other compounds such as nitrogen, phosphorus and potassium, all of which help plants grow. They are known as the “Big 3” primary nutrients used to produce synthetic fertilisers, a process that is both expensive and polluting.

So young and already so evolved: Thanks to observations obtained at the Large Binocular Telescope, an international team of researchers coordinated by Paolo Saracco of the Istituto Nazionale di Astrofisica (INAF, Italy) was able to reconstruct the wild evolutionary history of an extremely massive galaxy that existed 12 billion years ago, when the universe was only 1.8 billion years old, less than 13% of its present age. This galaxy, dubbed C1-23152, formed in only 500 million years, an incredibly short time to give rise to a mass of about 200 billion suns. To do so, it produced as many as 450 stars per year, more than one per day, a star formation rate almost 300 times higher than the current rate in the Milky Way. The information obtained from this study will be fundamental for galaxy formation models for objects it for which it is currently difficult to account.

The most massive galaxies in the universe reach masses several hundred billion times that of the sun, and although they are numerically just one-third of all galaxies, they contain more than 70% of the stars in the universe. For this reason, the speed at which these galaxies formed and the dynamics involved are among the most debated questions of modern astrophysics. The current model of galaxy formation—the so-called hierarchical model—predicts that smaller galaxies formed earlier, while more massive systems formed later, through subsequent mergers of the pre-existing smaller galaxies.

On the other hand, some of the properties of the most massive galaxies observed in the local universe, such as the age of their stellar populations, suggest instead that they formed at early epochs. Unfortunately, the variety of evolutionary phenomena that galaxies can undergo during their lives does not allow astronomers to define the way in which they formed, leaving large margins of uncertainty. However, an answer to these questions can come from the study of the properties of massive galaxies in the early universe, as close as possible to the time when they formed most of their mass.

Voicebots, humanoids and other tools capture memories for future generations.

What happens after we die—digitally, that is? In this documentary, WSJ’s Joanna Stern explores how technology can tell our stories for generations to come.

Old photos, letters and tapes. Tech has long allowed us to preserve memories of people long after they have died. But with new tools there are now interactive solutions, including memorialized online accounts, voice bots and even humanoid robots. WSJ’s Joanna Stern journeys across the world to test some of those for a young woman who is living on borrowed time. Photo illustration: Adele Morgan/The Wall Street Journal.

More from the Wall Street Journal: