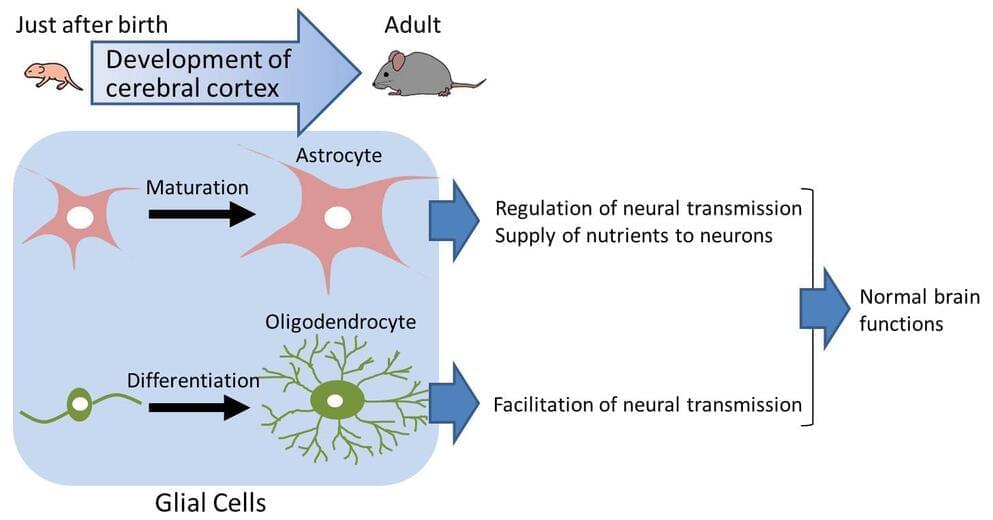

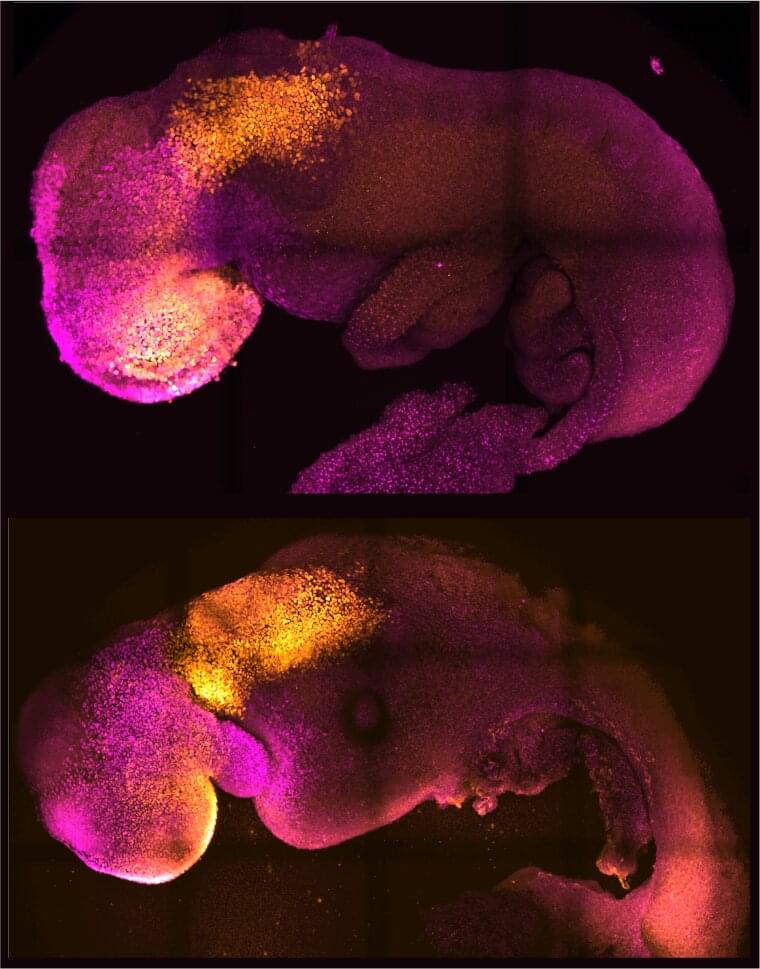

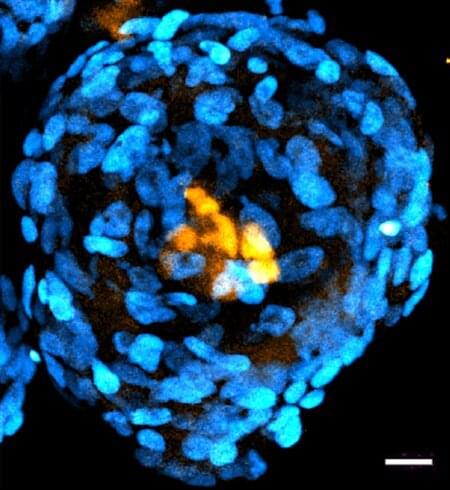

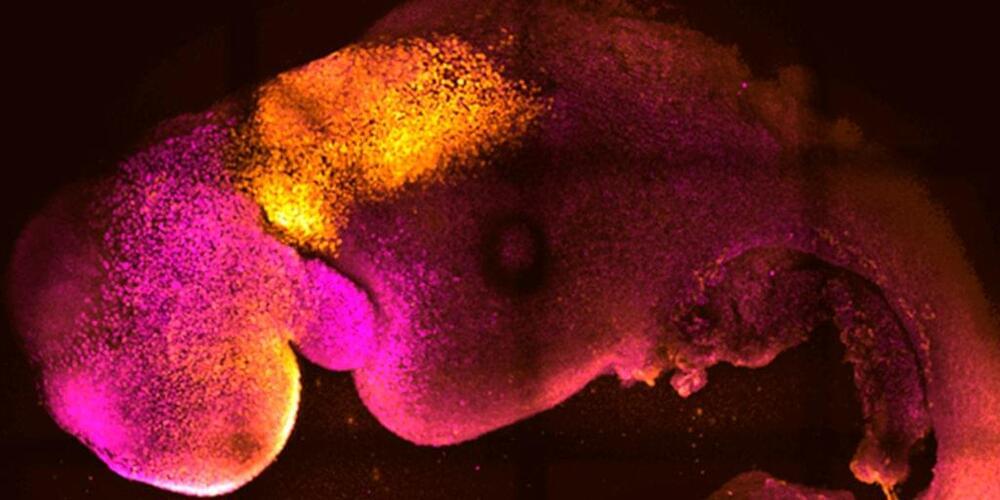

The brain consists of neurons and glial cells. The developmental abnormality of glial cells causes various diseases and aberrant cerebral cortex development. CD38 gene knockout is shown to cause aberrant development of glial cells, especially astrocytes and oligodendrocytes. The CD38 gene is known to be involved in cerebral cortex development. The present study suggests the importance of glial cells for cerebral cortex development.

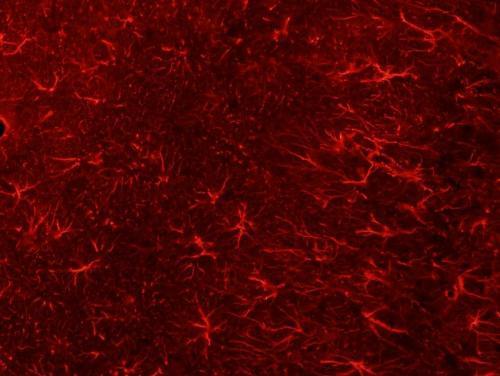

It is essential for brain development that both neurons and glial cells develop in a normal manner not only during fetal but also postnatal stages. In the postnatal brain, neurons extend long protrusions (axons and dendrites) to form complex networks for information exchange. On the other hand, glial cells are thought to support network formation of neurons, to regulate transmission of information, and to help survival of neurons. It is known that more than 50 percent of total cells in the brain are glial cells, three times more than neurons in number. It is also known that in the human brain has far more glial cells than the brains of rodents or primates. This indicates that for the higher functions of the brain, glial cells are of particular importance.

In the past, research on developmental disorders of the brain focused on neurons. Recently, however, research has focused on the abnormality of glial cells. There remain a number of unsolved problems concerning the mechanism of glial cell development in the postnatal brain and the relationship of glial cell abnormalities and developmental disorders of the brain.