//Although lipomatosis is very common and increases with age, scientists have previously devoted little discussion and research to it. Scientists at Uppsala University have just published a study that offers significant insights into the causes of human lymph node function decline with aging and the effects on immune system performance.

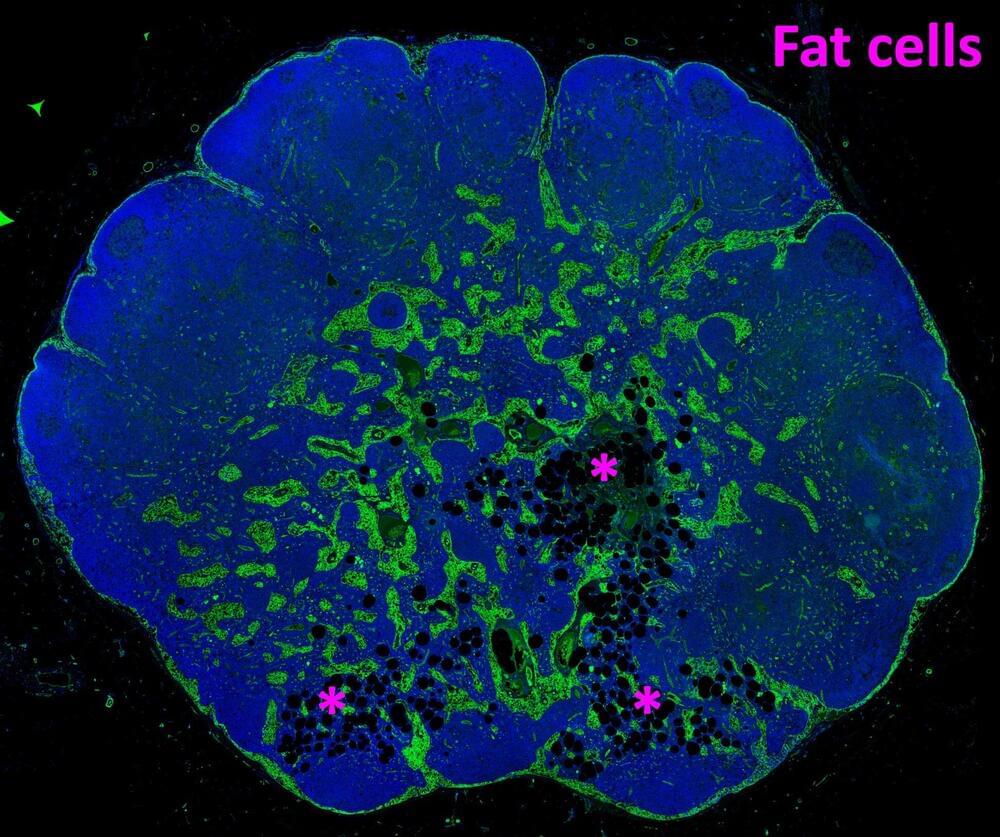

Scientists carefully examined more than 200 lymph nodes to show that lipomatosis starts in the medulla, which is the center of the lymph node. They also provided evidence connecting lipomatosis to converting lymph node supporting cells (fibroblasts) into adipocytes (fat cells). They also demonstrate that fibroblast subtypes in the medulla are more likely to develop into adipocytes.\.

The study is a first step toward understanding why lipomatosis occur.