For decades the field of cryobiology was largely ignored and underfunded. However, in recent years, several advances were made and will enable the creation of massively transformative industries. One of these advances in rapid reheating and is pioneered by the Dayong Gao group. Here is the story.

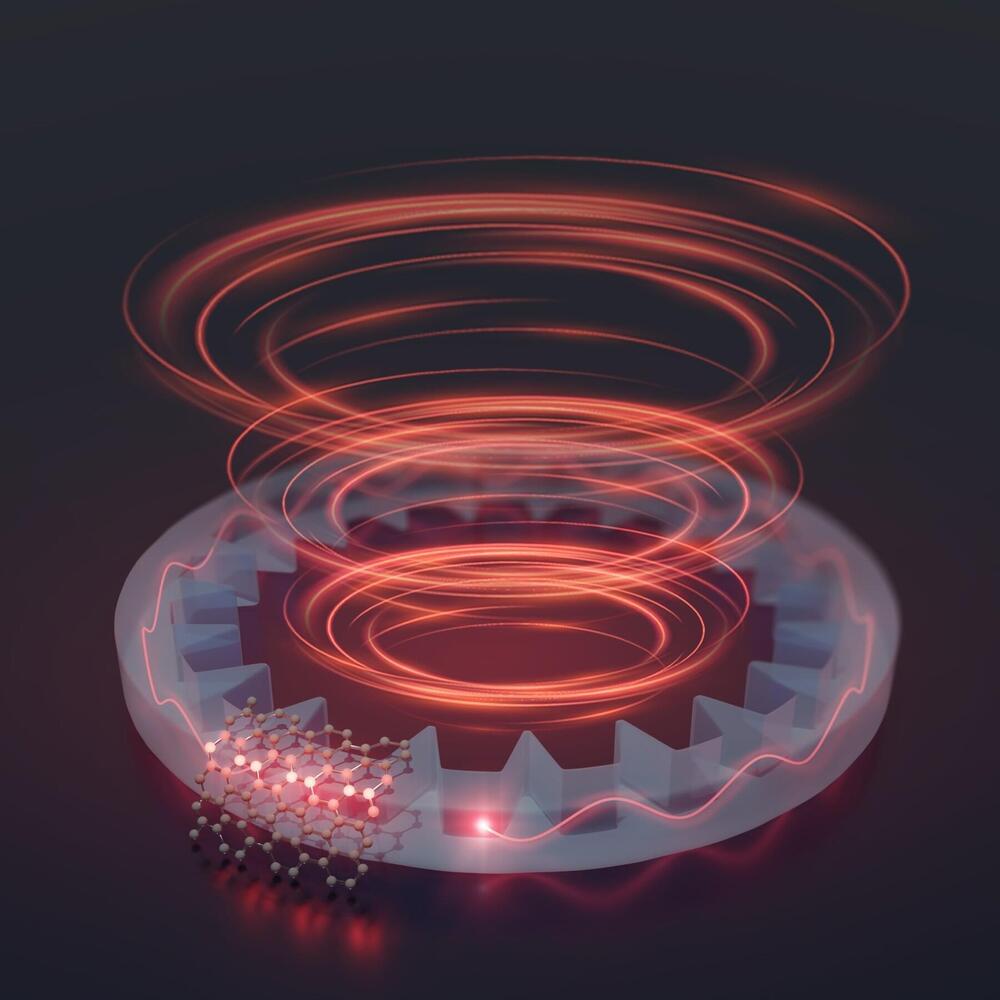

Quantum computers and communication devices work by encoding information into individual or entangled photons, enabling data to be quantum securely transmitted and manipulated exponentially faster than is possible with conventional electronics. Now, quantum researchers at Stevens Institute of Technology have demonstrated a method for encoding vastly more information into a single photon, opening the door to even faster and more powerful quantum communication tools.

Typically, quantum communication systems “write” information onto a photon’s spin angular momentum. In this case, photons carry out either a right or left circular rotation, or form a quantum superposition of the two known as a two-dimensional qubit.

It’s also possible to encode information onto a photon’s orbital angular momentum —the corkscrew path that light follows as it twists and torques forward, with each photon circling around the center of the beam. When the spin and angular momentum interlock, it forms a high-dimensional qudit—enabling any of a theoretically infinite range of values to be encoded into and propagated by a single photon.

Posthuman University

Posted in finance, transhumanism

I am registering Posthuman as a religion in UK, and MVT (although evidence based) as a “religious belief” — which seems consistent with.gov requirements, since the Posthuman Movement started 1988 in UK. Why shouldn’t rational folk benefit from the same financial benefits awarded to promoters of Supernaturalism and religious daftness? Perhaps Transhumans should also consider this, or jointly with https://Posthuman.org? Any USA citizens interested in this approach? https://www.gov.uk/…/charitable…/charitable-purposes…

Posthuman Psychology, MVT research, World Philosophy, Software development.

The eccentric billionaire has said he’ll ask for an exemption to US sanctions on Iran to provide Spacelink internet access to the country.

Summary: The pioneering “soleus pushup” effectively elevates muscle metabolism for hours, even when sitting.

Source: University of Houston.

From the same mind whose research propelled the notion that “sitting too much is not the same as exercising too little,” comes a groundbreaking discovery set to turn a sedentary lifestyle on its ear: The soleus muscle in the calf, though only 1% of your body weight, can do big things to improve the metabolic health in the rest of your body if activated correctly.

Researchers said the reason for this is because of “remarkable advances” in medical research and cancer prevention, detection, diagnosis and treatment. Between August 1, 2021 and July 31, 2022, for example, the Food and Drug Administration approved eight new anticancer therapeutics, 10 already approved therapeutics for use for new cancer types and two new diagnostic imaging agents.

“We have now a revolution in immune therapies. And when you put that together with the combination of targeted therapies, chemo and radiation therapy, we now have patients that would have died within two years of a diagnosis living 15, 20, 25, 30 years, essentially cured of their malignancies,” AACR President Lisa Coussens said.

However, this progress is not equal, and many populations “continue to shoulder a disproportionate burden of cancer,” the report says.

Engineered living materials promise to aid efforts in human health, energy and environmental remediation. Now they can be built big and customized with less effort.

Bioscientists at Rice University have introduced centimeter-scale, slime-like colonies of engineered bacteria that self-assemble from the bottom up. They can be programmed to soak up contaminants from the environment or to catalyze biological reactions, among many possible applications.

The creation of autonomous engineered living materials —or ELMs—has been a goal of bioscientist Caroline Ajo-Franklin since long before she joined Rice in 2019.

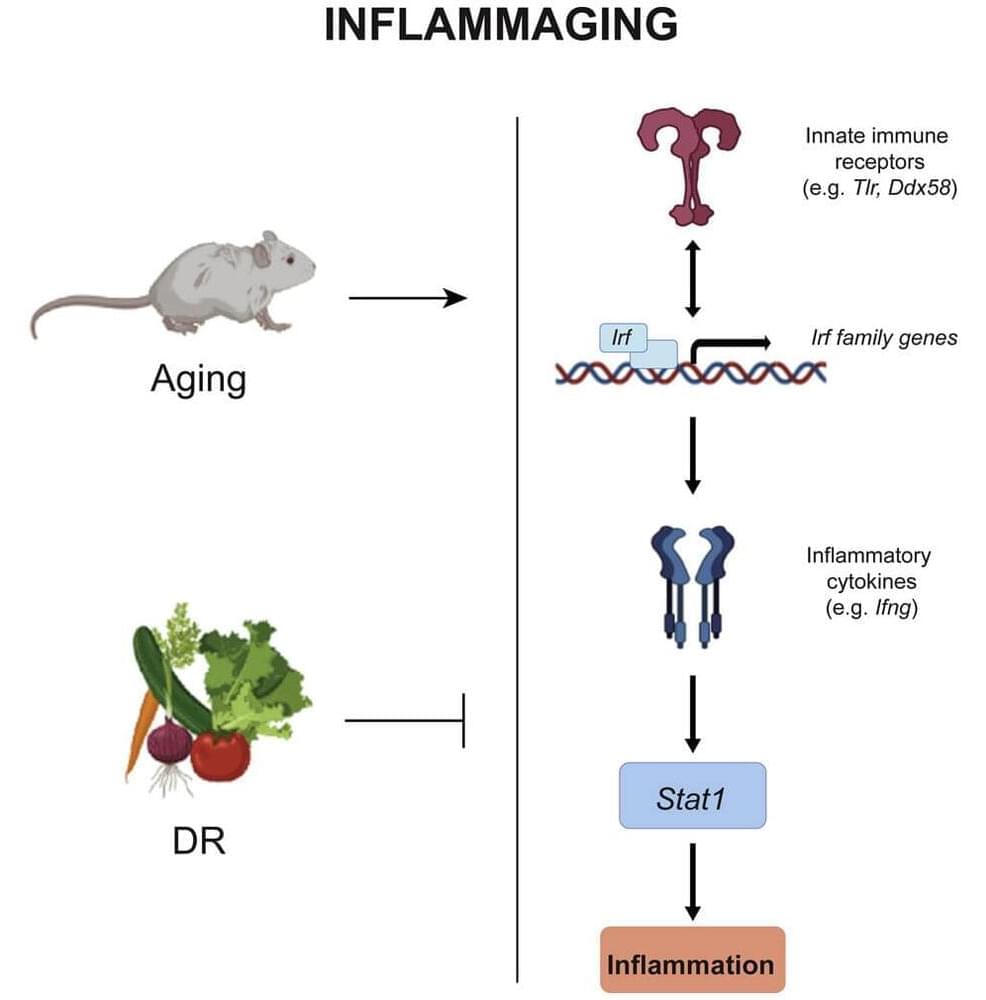

Mild, persistent inflammation in tissue is considered one of the biological hallmarks of the aging process in humans—and at the same time is a risk factor for diseases such as Alzheimer’s or cancer. Prof. Francesco Neri and Dr. Mahdi Rasa of the Leibniz Institute on Aging—Fritz Lipmann Institute (FLI) in Jena have succeeded for the first time in describing at the molecular level the regulatory network that drives the general, multiple-organ inflammatory response. Moreover, they were able to show that dietary restriction can influence this regulatory circuit, thereby inhibiting inflammation.

Inflammation is an immune response of the body that is, in itself, useful: our immune system uses it to fight pathogens or to remove damaged cells from tissue. Once the immune cells have done their work, the inflammation subsides: the infection is over, the wound is healed. Unlike such acute inflammations, age-related chronic inflammation is not local. The innate immune system ramps up its activity overall, resulting in chronic low-grade inflammation. This aging-related inflammation is also known as inflammaging.

Time. We can’t get enough of it. We are desperate to make it flow faster or slower, and yet we are reminded again and again to…

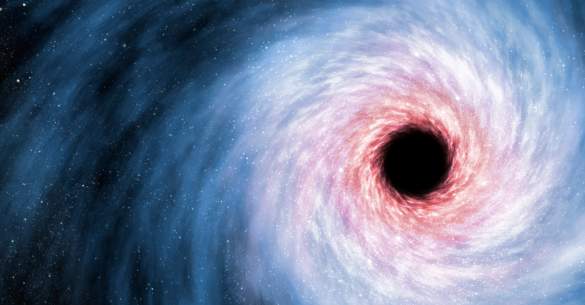

Astronomers have recently found the nearest known black hole to our solar system. According to scientists, the black hole is 1,570 lightyears away and ten times larger than our sun.

Known as Gaia BH1, the research was led by Harvard Society Fellow astrophysicist Kareem El-Badry, with the Harvard-Smithsonian Center for Astrophysics (CfA) and the Max Planck Institute for Astronomy (MPIA).

In addition, El-Badry worked with researchers from CfA, MPIA, Caltech, UC Berkeley, the Flatiron Institute’s Center for Computational Astrophysics (CCA), the Weizmann Institute of Science, the Observatoire de Paris, MIT’s Kavli Institute for Astrophysics and Space Research, and other universities.