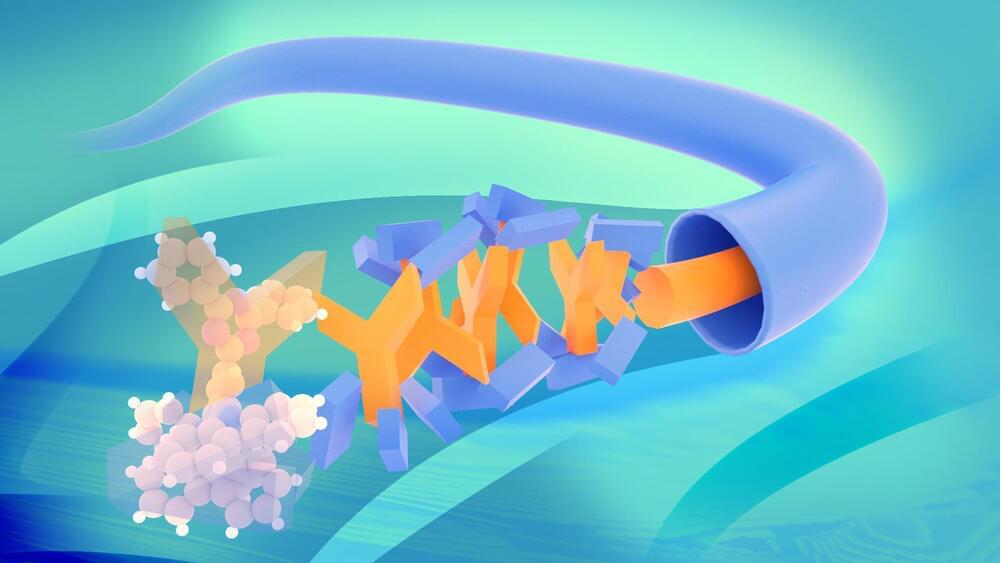

Organic light-emitting diodes (OLEDs) are now widely used. For use in displays, blue OLEDs are additionally required to supplement the primary colors red and green. Especially in blue OLEDs, impurities give rise to strong electrical losses, which could be partly circumvented by using highly complex and expensive device layouts. A team from the Max Planck Institute for Polymer Research has now developed a new material concept that potentially allows efficient blue OLEDs with a strongly simplified structure.

From televisions to smartphones: organic light-emitting diodes (OLEDs) are nowadays finding their way into many devices that we use every day. To display an image, they are needed in the three primary colors red, green and blue. In particular, light-emitting diodes for blue light are still difficult to manufacture because blue light—physically spoken—has a high energy, which makes the development of materials difficult.

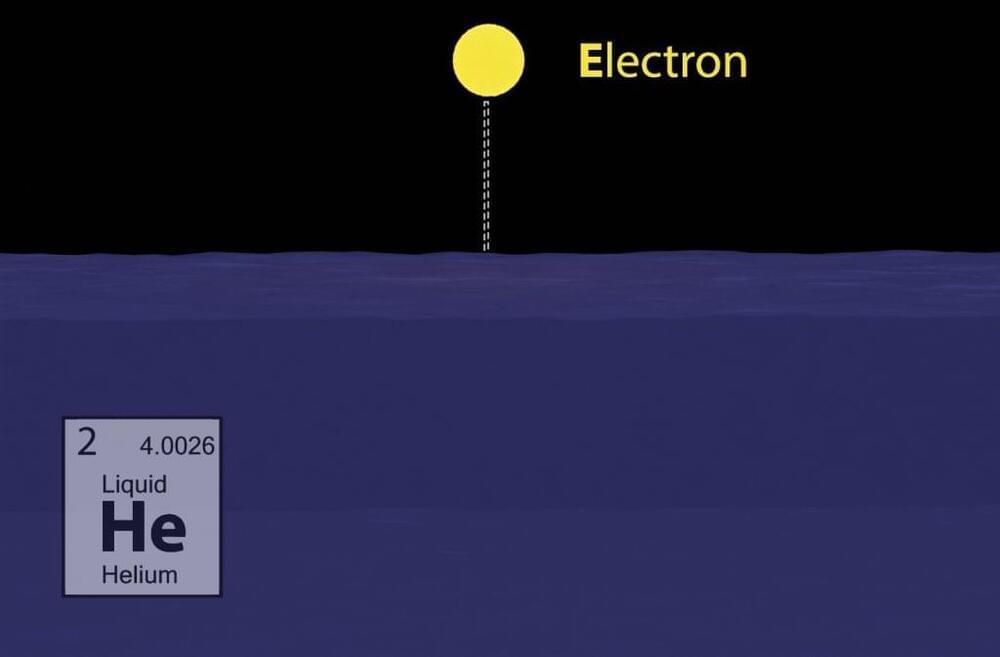

Especially the presence of minute quantities of impurities in the material that cannot be removed plays a decisive role in the performance of these materials. These impurities— oxygen molecules, for example—form obstacles for electrons to move inside the diode and participate in the light-generation process. When an electron is captured by such an obstacle, its energy is not converted into light but into heat. This problem, known as “charge trapping”, occurs primarily in blue OLEDs and significantly reduces their efficiency.