😀

A Paris-based startup has created a genetically engineered houseplant that can literally clean the air within your home. The plant builds off the natural purifying properties that houseplants already offer. So, while it adds some color to whatever room you put it in, it’s also actively keeping the air cleaner than 30 air purifiers.

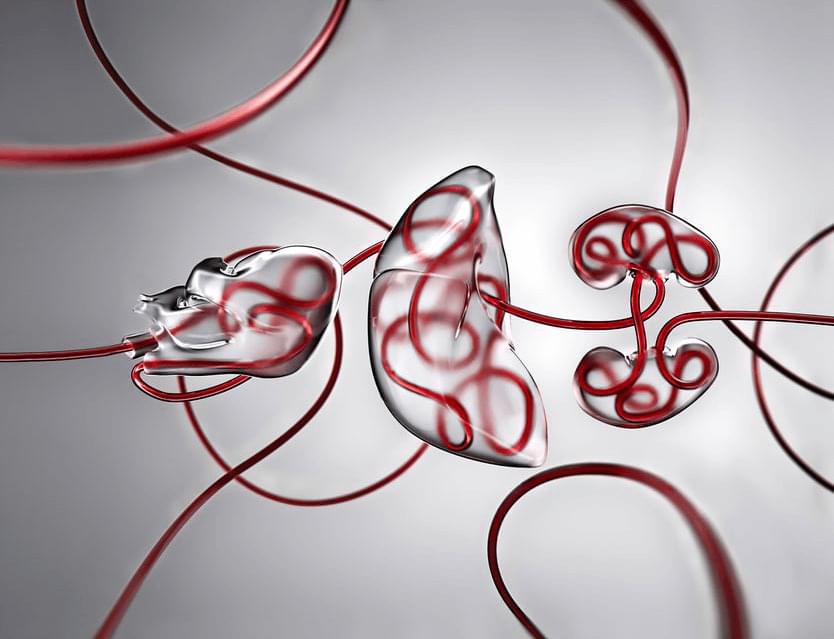

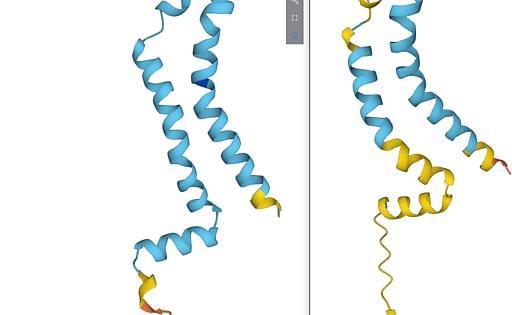

The company, called Neoplants, modified both a pothos plant as well as its root microbiome to pump the plant’s natural air-cleaning properties up quite a bit. Called Neo P1, the genetically engineered houseplant recently hit the market, and you can purchase it right now.

Plants can offer quite a bit to your home. Not only can they boost your mood and help reduce anxiety, according to researchers, but they can also clean the air thanks to their natural air-purifying properties. With this genetically engineered houseplant, though, you’re getting more than that basic level of purifying. In fact, Neoplants say that the Neo P1 is 30 times more effective than the top NASA plants.