There are many reasons why cancer is so difficult to cure, largely including the sheer complexity of the disease. Find out more here.

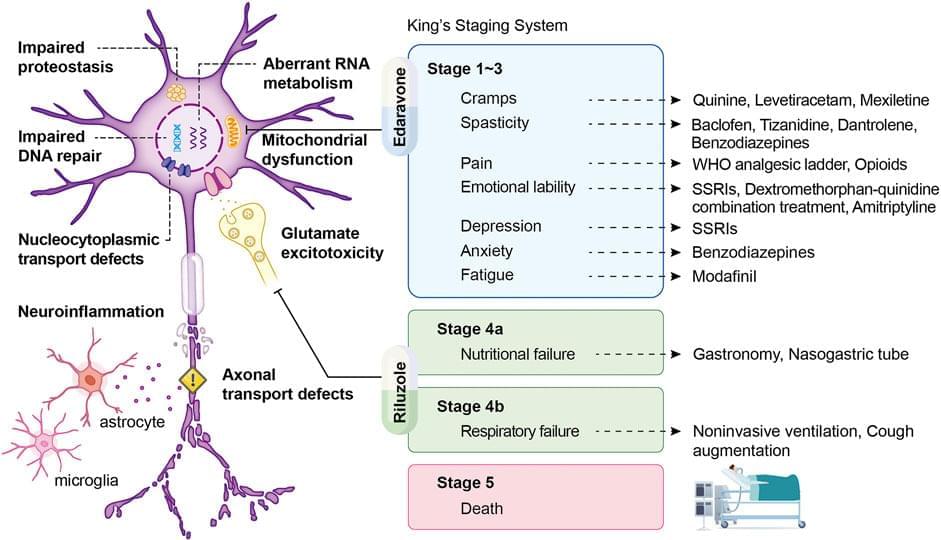

Amyotrophic lateral sclerosis (ALS) is a fatal neurodegenerative disease characterized by progressive upper and lower motor neuron (MN) degeneration with unclear pathology. The worldwide prevalence of ALS is approximately 4.42 per 100,000 populations, and death occurs within 3–5 years after diagnosis. However, no effective therapeutic modality for ALS is currently available. In recent years, cellular therapy has shown considerable therapeutic potential because it exerts immunomodulatory effects and protects the MN circuit. However, the safety and efficacy of cellular therapy in ALS are still under debate. In this review, we summarize the current progress in cellular therapy for ALS. The underlying mechanism, current clinical trials, and the pros and cons of cellular therapy using different types of cell are discussed. In addition, clinical studies of mesenchymal stem cells (MSCs) in ALS are highlighted. The summarized findings of this review can facilitate the future clinical application of precision medicine using cellular therapy in ALS.

ALS is believed to result from a combination of genetic and environmental factors (Masrori and Van Damme 2020). ALS exists in two forms: familial ALS (fALS) and sporadic ALS (sALS). fALS exhibits a Mendelian pattern of inheritance and accounts for 5–10% of all cases. The remaining 90–95% of cases that do not have an apparent genetic link are classified as sALS (Kiernan et al., 2011). At the genetic level, more than 20 genes have been identified. Among them, chromosome 9 open reading frame 72 (C9ORF72), fused in sarcoma (FUS), TAR DNA binding protein (TARDBP), and superoxide dismutase 1 (SOD1) genes have been identified as the most common causative genes (Riancho et al., 2019). Beyond genetic factors, the diverse pathological mechanisms of ALS-associated neurodegeneration have been discussed (van Es et al., 2017). The clinical symptoms of ALS are heterogeneous, with main symptoms including limb weakness, muscle atrophy, and fasciculations involving both upper and lower MNs.

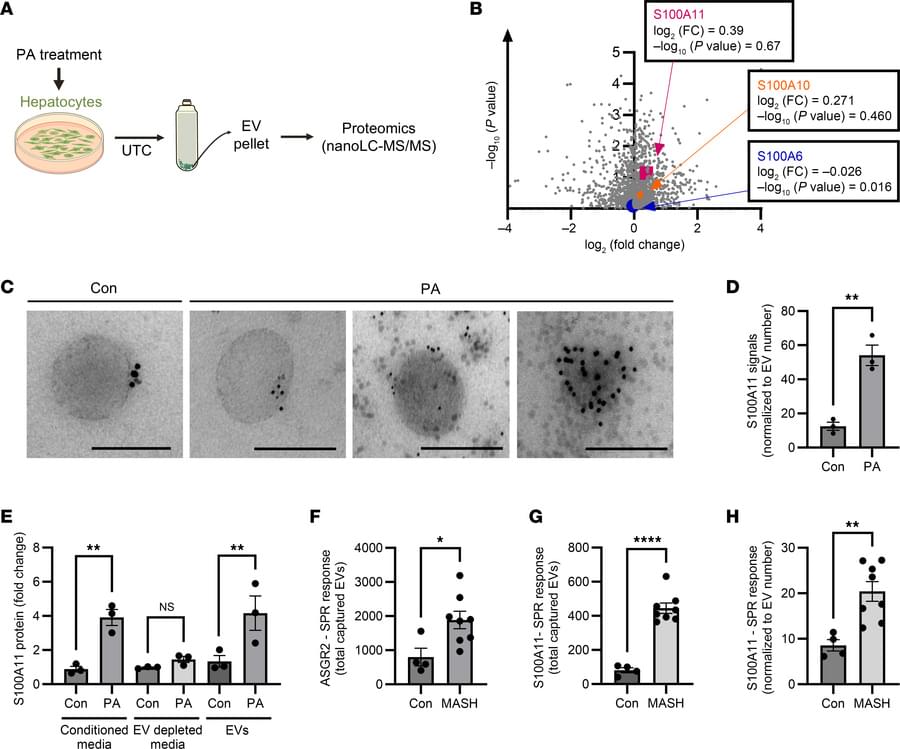

Harmeet Malhi & team discover ER-stress mediated S100A11 upregulation drives progression of fatty liver disease, revealing a new target for future treatments:

The figure shows reduction within the high-fat,-fructose, and-cholesterol,-lipotoxicity-influenced enhancer (FFC-LIE) mouse groups compared with FFC-scramble controls.

Address correspondence to: Harmeet Malhi, Division of Gastroenterology and Hepatology, Mayo Clinic, 200 First Street SW, Rochester, Minnesota 55,905, USA. Phone: 507.284.0686; Email: [email protected].

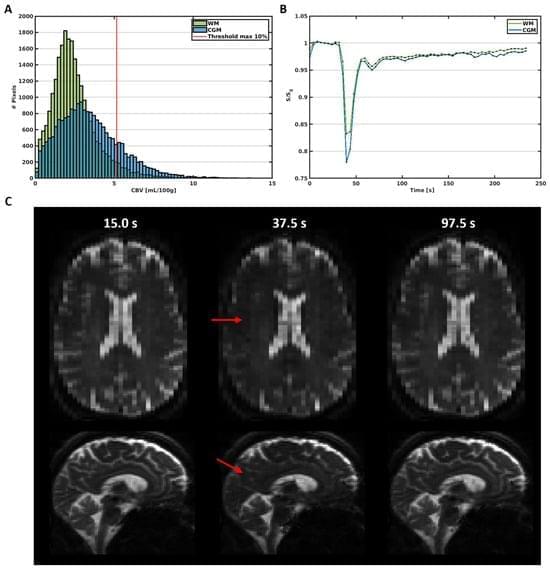

Perfusion measures of the total vasculature are commonly derived with gradient-echo (GE) dynamic susceptibility contrast (DSC) MR images, which are acquired during the early passes of a contrast agent. Alternatively, spin-echo (SE) DSC can be used to achieve specific sensitivity to the capillary signal. For an improved contrast-to-noise ratio, ultra-high-field MRI makes this technique more appealing to study cerebral microvascular physiology. Therefore, this study assessed the applicability of SE-DSC MRI at 7 T. Forty-one elderly adults underwent 7 T MRI using a multi-slice SE-EPI DSC sequence. The cerebral blood volume (CBV) and cerebral blood flow (CBF) were determined in the cortical grey matter (CGM) and white matter (WM) and compared to values from the literature. The relation of CBV and CBF with age and sex was investigated.

The MIT researchers have spent the past few years developing the Synthetic Survival Control causal inference framework, which enables them to answer complex “when-if” questions when using available data is statistically challenging. Their approach estimates when a target event happens if a certain intervention is used.

In this paper, the researchers investigated an aggressive cancer called nodal mature T-cell lymphoma, and whether a certain prognostic marker led to worse outcomes. The marker, TTR12, signifies that a patient relapsed within 12 months of initial therapy.

They applied their framework to estimate when a patient will die if they have TTR12, and how their survival trajectory would be different if they do not have this prognostic marker.

Recent technological and scientific advances have opened new possibilities for neuroscience research, which is in turn leading to interesting new discoveries. Over the past few years, many groups of neuroscientists worldwide have been trying to map the structure of the brain and its underlying regions with increasing precision, while also probing their involvement in specific mental functions.

As mapping the human brain in detail is often challenging and requires significant resources, many studies focus on other mammals, particularly mice or other rodents. Most mouse brain atlases delineated to date map the density of neurons or other brain cells (i.e., how many cells are packed in specific parts of the brain). In contrast, fewer works also tried to map the shape of neurons in the mouse brain and interactions between them.

Researchers at Fudan University and Southeast University recently set out to map dendrites (i.e., branch-like extensions of neurons via which they receive signals from other cells) in the mouse brain. Their paper, published in Nature Neuroscience, unveils groups of structures in the mouse brain that influence how neurons function and connect to other neurons, also known as microenvironments.

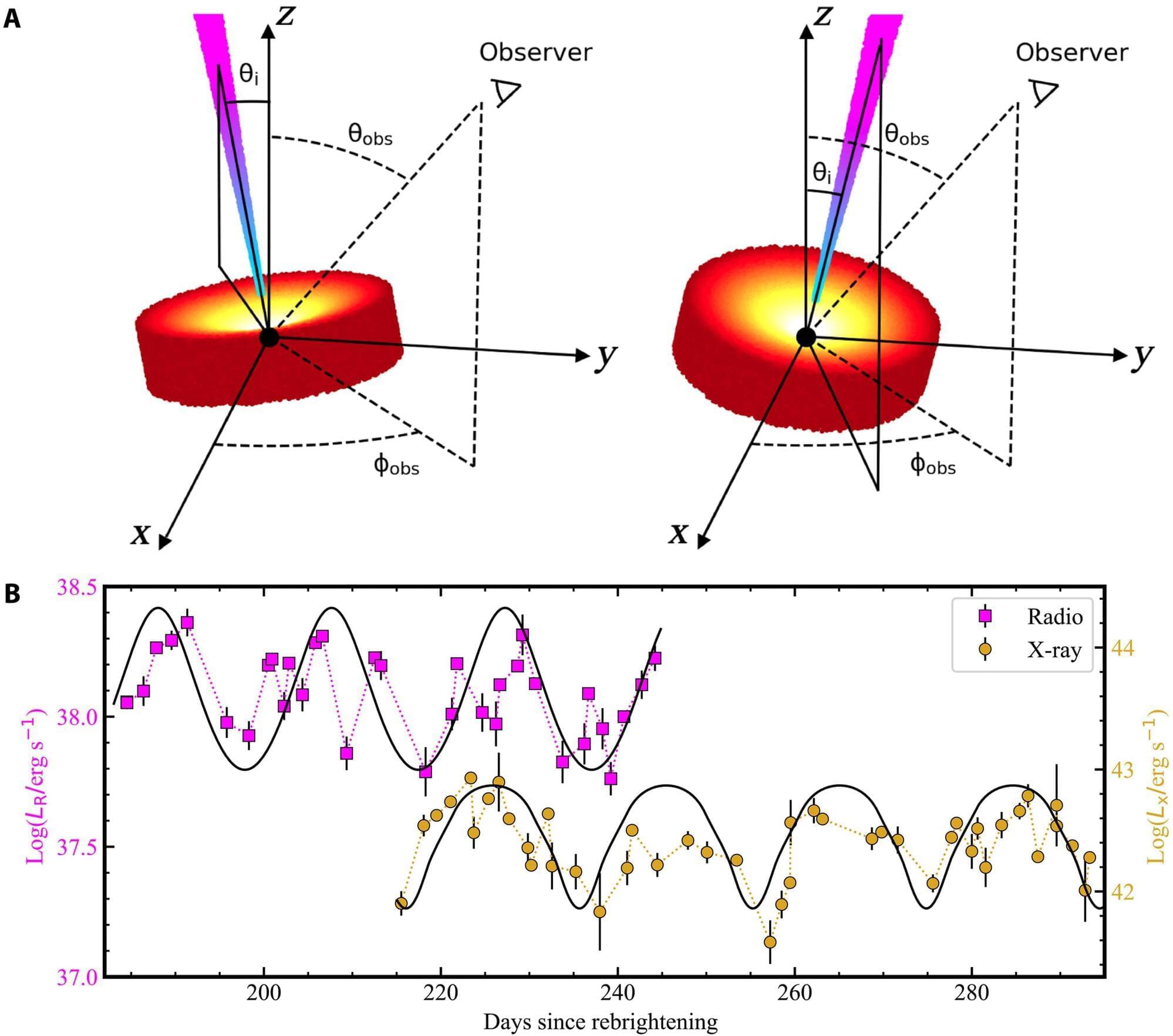

The cosmos has served up a gift for a group of scientists who have been searching for one of the most elusive phenomena in the night sky. Their study, presented in Science Advances, reports on the very first observations of a swirling vortex in spacetime caused by a rapidly rotating black hole.

The process, known as Lense-Thirring precession or frame-dragging, describes how black holes twist the spacetime that surrounds them, dragging nearby objects like stars and wobbling their orbits along the way.

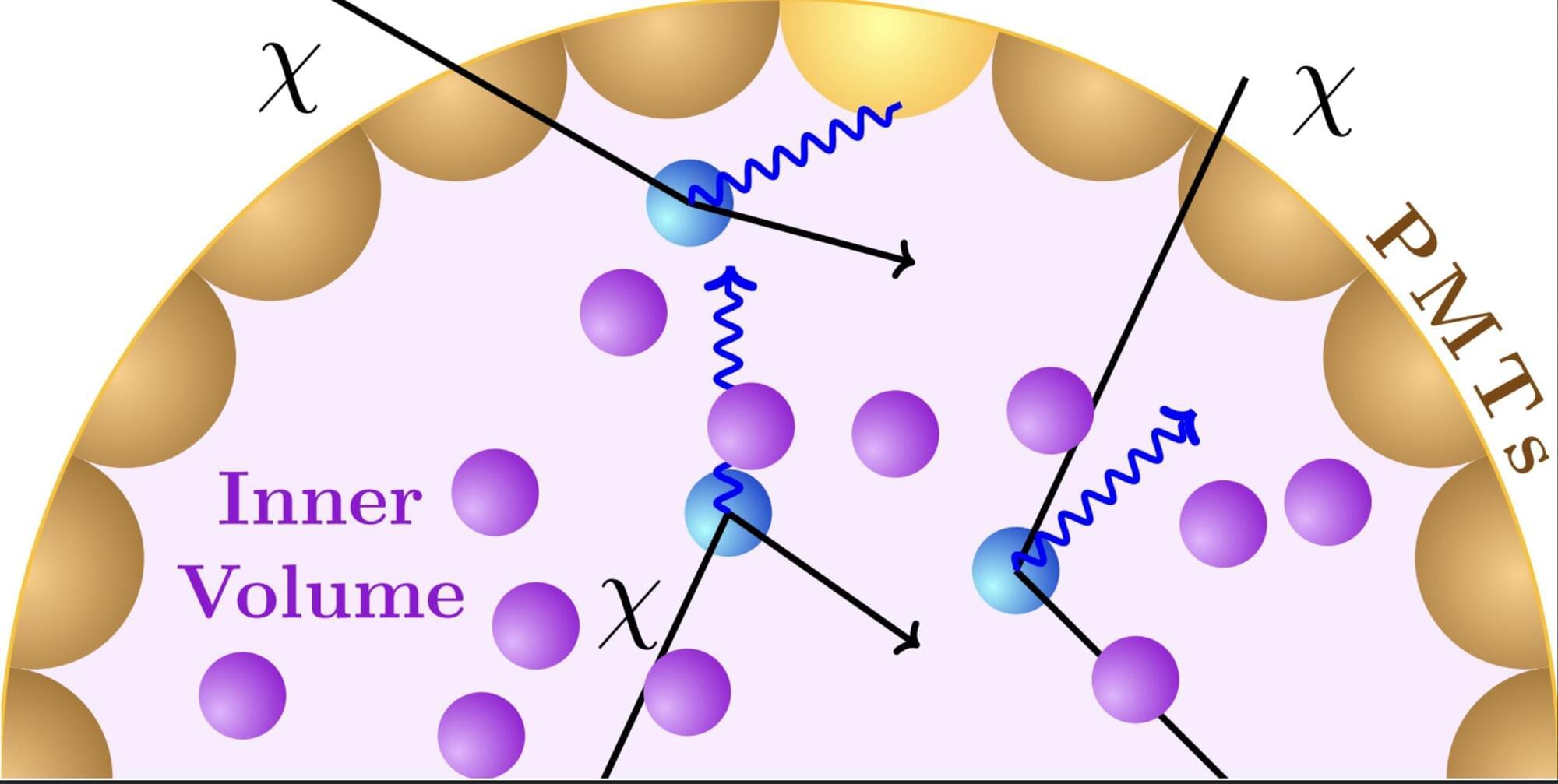

Dark matter is an elusive type of matter that does not emit, reflect or absorb light, yet is estimated to account for most of the universe’s mass. Over the past decades, many physicists worldwide have been trying to detect this type of matter or signals associated with its presence, employing various approaches and technologies.

As it has never been directly detected before, the composition and properties of dark matter remain mostly unknown. Initially, dark matter searches focused on the detection of relatively heavy particles. More recently, however, physicists also started looking for lighter particles with masses below one giga-electron-volt (GeV), which would thus be lighter than protons.

Researchers at SLAC National Accelerator Laboratory and The Ohio State University recently showed that signatures of these sub-GeV dark matter particles could also be picked up by neutrino observatories, large underground detectors originally designed to study neutrinos (i.e., light particles that weakly interact with regular matter).