A battery built for satellites brings grid-scale storage down to Earth.

In what may be one of Earth’s craziest forms of mimicry, researchers have discovered a new species of rove beetle that grows a termite puppet on its back to fool real termites into feeding it. The replica is so precise, it even mirrors the termites’ distinct body segments and has three pairs of pseudoappendages that resemble antennae and legs.

Rove beetles (family Staphylinidae) are already infamous in the animal kingdom as masters of disguise. Some, for example, have evolved to look like army ants, allowing the beetles to march alongside them and feed on their eggs and young.

The new beetle species (Austrospirachtha carrijoi)—found beneath the soil in Australia’s Northern Territory—emulates a termite by enlarging its abdomen, a phenomenon known as physogastry. Evolution has reshaped this body part into a highly realistic replica of a termite (as seen above), head and all, which rides on top of the rest of the beetle’s body. The beetle’s real, much smaller head peeks out from beneath its termite disguise, the authors report this month in the journal.

I WOULD SUSPECT IF WE CAN GET OFF THIS PLANET AND FIND SOMEWHERE MORE HABITABLE, THAT WOULD BE MORE PREFERABLE.

The world’s land masses are, a new super-charged climate model suggests, going to form into one giant supercontinent — and if humans manage to survive the shift, we will become like the inhabitants of Arrakis, the desert planet at the heart of the “Dune” series.

A new study led by researchers at the University of Bristol and published in the journal Nature Geosciences predicts that over the next 250 million years, the continents will shift to form what they’re calling “Pangea Ultima,” an uber-hot supercontinent that will be inhospitable to most mammals due to the conditions that made it.

Specifically, the environmental and geophysical researchers predict in their study that the volcanic activity from the tectonic shifting — and the subsequent rise in carbon dioxide to potentially more than double Earth’s current levels — would make most of the land on Pangea Ultima “barren,” according to a Nature summary of the study.

This is a risky bet, given the limitations of the technology. Tech companies have not solved some of the persistent problems with AI language models, such as their propensity to make things up or “hallucinate.” But what concerns me the most is that they are a security and privacy disaster, as I wrote earlier this year. Tech companies are putting this deeply flawed tech in the hands of millions of people and allowing AI models access to sensitive information such as their emails, calendars, and private messages. In doing so, they are making us all vulnerable to scams, phishing, and hacks on a massive scale.

I’ve covered the significant security problems with AI language models before. Now that AI assistants have access to personal information and can simultaneously browse the web, they are particularly prone to a type of attack called indirect prompt injection. It’s ridiculously easy to execute, and there is no known fix.

In an indirect prompt injection attack, a third party “alters a website by adding hidden text that is meant to change the AI’s behavior,” as I wrote in April. “Attackers could use social media or email to direct users to websites with these secret prompts. Once that happens, the AI system could be manipulated to let the attacker try to extract people’s credit card information, for example.” With this new generation of AI models plugged into social media and emails, the opportunities for hackers are endless.

Sure, you could just stick a ChatGPT sidebar in your browser. But what do we really want AI to do for us as we use the web? That’s the much harder question.

At some point, if you’re a company doing pretty much anything in the year 2023, you have to have an AI strategy. It’s just business. You can make a ChatGPT plug-in. You can do a sidebar. You can bet your entire trillion-dollar company on AI being the future of how everyone does everything. But you have to do something.

The last one of these was crypto and the blockchain a couple of years ago, and Josh Miller, the CEO of The Browser Company, which makes the popular new Arc browser, says he’s… More.

AI is coming for your online life… but nobody’s exactly sure how it’s going to work.

On the stand in US v. Google, the Microsoft CEO said he’d do just about anything to make Bing better. Google’s lawyers said he should have been doing that for decades.

Microsoft’s Bing search engine is not as good as Google. Believe it or not, it seems nobody — not even Microsoft CEO Satya Nadella — disputes that fact. But over hours of contentious testimony from Nadella during the landmark US v. Google.

Nadella, in a dark blue suit, took the stand early Monday morning after a few minutes of scheduling updates and a delay long enough that Judge Amit Mehta asked jokingly, Mr. Nadella didn’t go back to Seattle, did… More.

Microsoft was prepared to lose billions on an Apple deal.

Zoom’s selling a cheaper AI package than Microsoft 365 Copilot and Google Duet AI, and soon it can plug into a new ‘modular workspace.’

At Zoomtopia 2023 today, Zoom announced Zoom Docs, a collaboration-focused “modular workspace” that integrates the company’s Zoom AI Companion for generating new content or populating a doc from other sources — you know the drill by now.

Along with the Mail and Calendar offerings launched during last year’s event, Zoom Docs is another step toward a full office suite alternative to Google Workspace and Microsoft 365, which both have started to integrate AI-powered tools of their own, dubbed Duet AI and Copilot, respectively. The company says it will be widely… More.

Zoom’s new tool expands beyond the Zoom meeting.

Last week at its annual CloudWorld event in Las Vegas, Oracle showed that it, too, is going full throttle on generative AI–and that it has no plans to cower to its biggest rival Amazon Web Services (AWS.)Before we get into the CloudWorld event itself, it’s important to take a tiny step back to September 14 when the company announced a new partnership with Microsoft that puts Oracle database services on Oracle Cloud Infrastructure (OCI) in Microsoft Azure. The new Oracle Database@Azure makes Microsoft and Oracle the only two hyperscalers to offer OCI to help simplify cloud migration, deployment and management. Especially when you consider that the partners have achieved rate card and… More.

This year at Oracle CloudWorld, the company advanced its generative AI strategy across its cloud infrastructure, apps, and platforms. Exploring this year’s announcements.

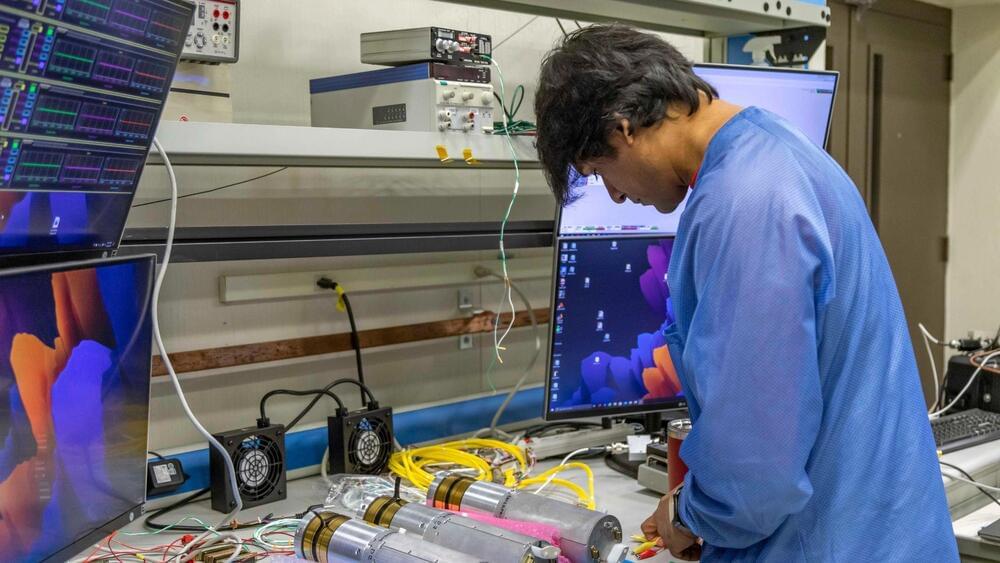

Three rockets will travel from the White Sands Missile Range in New Mexico to where the Moon directly aligns with the Sun during the annular solar eclipse on October 14, 2023.

In order to gain a better understanding of the Earth’s atmospheric dynamics and the effects of solar phenomena on our planet, NASA is launching a sounding rocket mission called Atmospheric Perturbations around the Eclipse Path (APEP).

Three rockets will embark on a journey from the White Sands Missile Range in New Mexico, flying to positions just outside the path of annularity, where the Moon directly aligns with the Sun during the annular solar eclipse on October 14, 2023.

NASA’’s mission plans to investigate sunlight’s effect on the ionosphere through instruments on the rockets that measure electric/magnetic fields, density, and temperature changes.

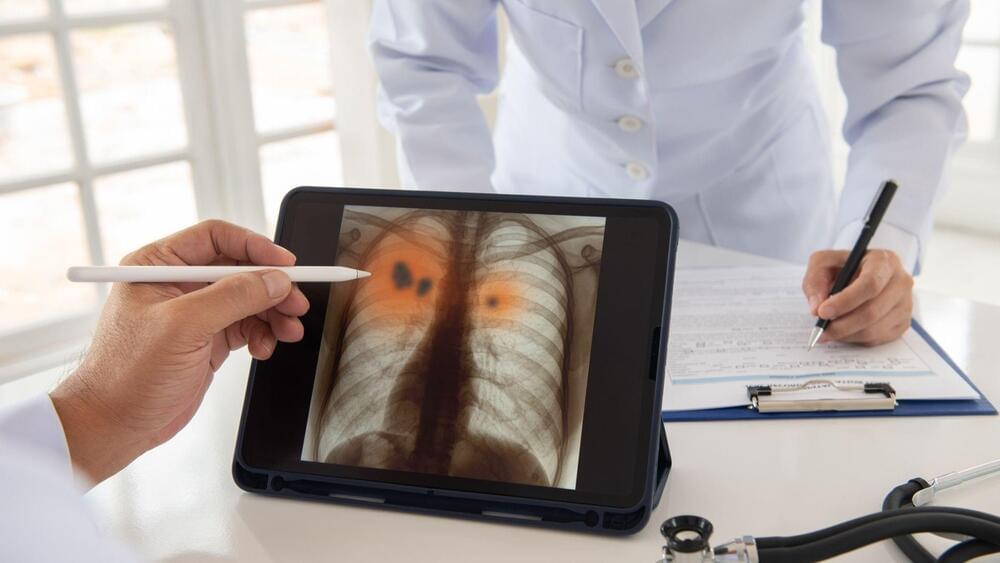

Lung cancer screening is crucial for decreasing the death count from the disease but the government can’t scan everyone’s lungs. Here is an AI that identifies people who actually need screening.

Lung cancer is the deadliest cancer type, killing over a million people annually across the globe. The disease is responsible for the highest number of cancer deaths in both men and women in the US.

In fact, the death toll from lung cancer among women and men is nearly triple that of breast cancer and prostate cancer, respectively.