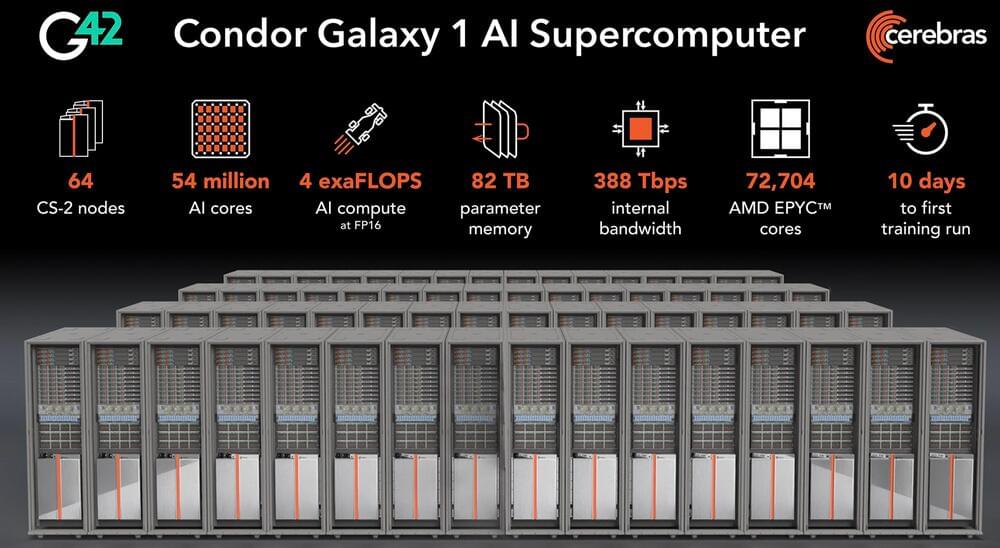

The only AI Hardware startup to realize revenue exceeding $100M has finished the first phase of Condor Galaxy 1 AI Supercomputer with partner G42 of the UAE. Other Cerebras customers are sharing their CS-2 results at Supercomputing ‘23, building momentum for the inventor of wafer-scale computing. This company is on a tear.

Four short months ago, Cerebras announced the most significant deal any AI startup has been able to assemble with partner G42 (Group42), an artificial intelligence and cloud computing company. The eventual 256 CS-2 wafer-scale nodes with 36 Exaflops of AI performance will be one of the world’s largest AI supercomputers, if not the largest.

Cerebras has now finished the first data center implementation and started on the second. These two companies are moving fast to capitalize on the $70B (2028) gold rush to stand up Large Language Model services to researchers and enterprises, especially while the supply of NVIDIA H100 remains difficult to obtain, creating an opportunity for Cerebras. In addition, Cerebras has recently announced it has released the largest Arabic Language Model, the Jais30B with Core42 using the CS-2, a platform designed to make the development of massive AI models accessible by eliminating the need to decompose and distribute the problem.