On the way to rejuvenation using AI and multidisciplinary knowledge!

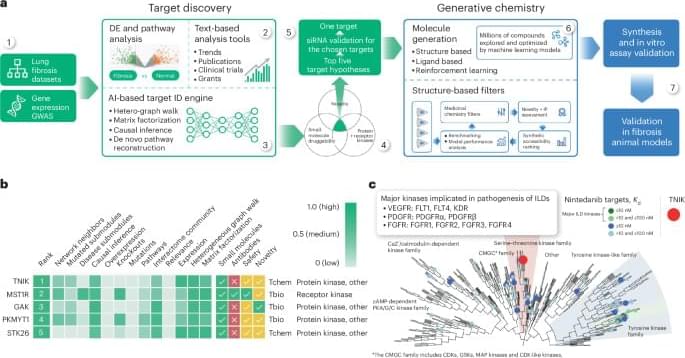

An AI-generated small-molecule inhibitor treats fibrosis in vivo and in phase I clinical trials.

A team of engineers, physicists, and data scientists from Princeton University and the Princeton Plasma Physics Laboratory (PPPL) have used artificial intelligence (AI) to predict—and then avoid—the formation of a specific type of plasma instability in magnetic confinement fusion tokamaks. The researchers built and trained a model using past experimental data from operations at the DIII-D National Fusion Facility in San Diego, Calif., before proving through real-time experiments that their model could forecast so-called tearing mode instabilities up to 300 milliseconds in advance—enough time for an AI controller to adjust operating parameters and avoid a tear in the plasma that could potentially end the fusion reaction.

DENVER—(BUSINESS WIRE)—Palantir Technologies Inc. (NYSE: PLTR) today announced that the Army Contracting Command – Aberdeen Proving Ground (ACC-APG) has awarded Palantir USG, Inc. — a wholly-owned subsidiary of Palantir Technologies Inc. — a prime agreement for the development and delivery of the Tactical Intelligence Targeting Access Node (TITAN) ground station system, the Army’s next-generation deep-sensing capability enabled by artificial intelligence and machine learning (AI/ML). The agreement, valued at $178.4 million, covers the development of 10 TITAN prototypes, including five Advanced and five Basic variants, as well as the integration of new critical technologies and the transition to fielding.

“This award demonstrates the Army’s leadership in acquiring and fielding the emerging technologies needed to bolster U.S. defense in this era of software-defined warfare. Building on Palantir’s years of experience bringing AI-enabled capabilities to warfighters, Palantir is now proud to deliver the Army’s first AI-defined vehicle” Post this

TITAN is a ground station that has access to Space, High Altitude, Aerial, and Terrestrial sensors to provide actionable targeting information for enhanced mission command and long range precision fires. Palantir’s TITAN solution is designed to maximize usability for Soldiers, incorporating tangible feedback and insights from Soldier touch points at every step of the development and configuration process. Building off Palantir’s prior work delivering AI capabilities for the warfighter, Palantir is deploying the Army’s first AI-defined vehicle.

Couple things. Mr Johnson is self aware and has a sense of humor about it. And another argument you can use against the overpopulation people is that there is currently one acre of habitable land available for every person on the planet.

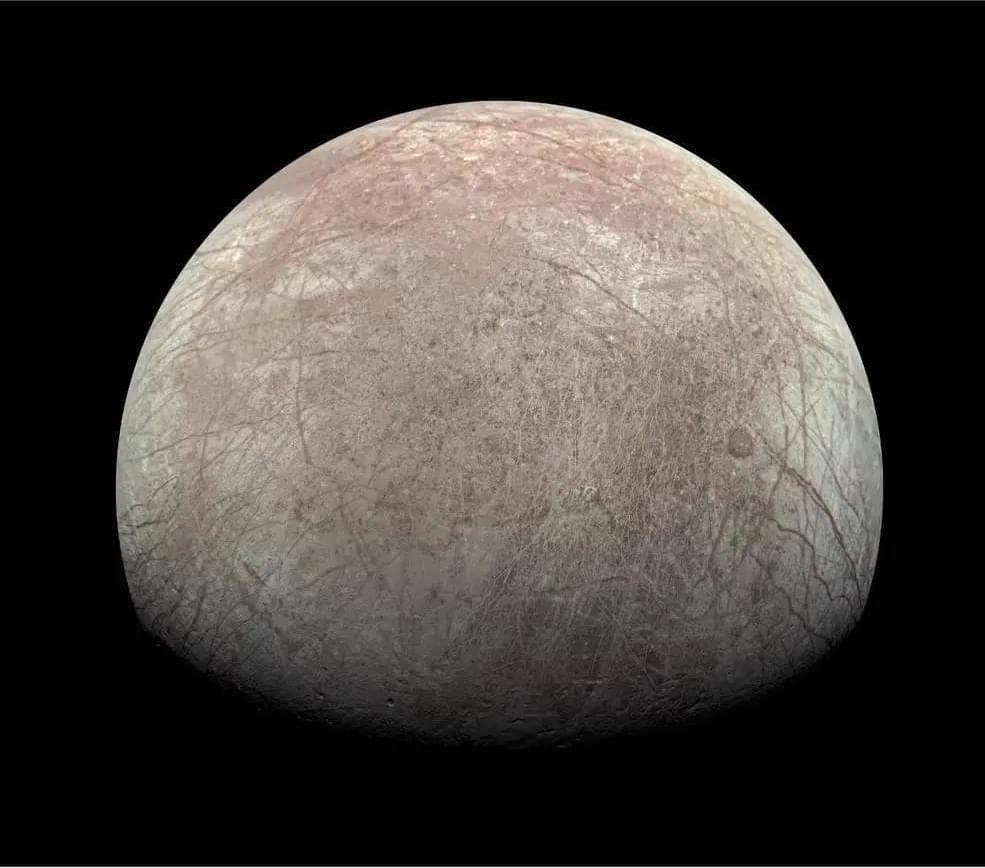

How much oxygen does Jupiter’s moon, Europa, produce, and what can this teach us about its subsurface liquid water ocean? This is what a study published today in Nature Astronomy hopes to address as an international team of researchers investigated how charged particles break apart the surface ice resulting in hydrogen and oxygen that feed Europa’s extremely thin atmosphere. This study holds the potential to help scientists better understand the geologic and biochemical processes on Europa, along with gaining greater insight into the conditions necessary for finding life beyond Earth.

For the study, the researchers used the Jovian Auroral Distributions Experiment (JADE) instrument onboard NASA’s June spacecraft to collect data on the amount of oxygen being discharged from Europa’s icy surface due to charge particles emanating from Jupiter’s massive magnetic field. In the end, the researchers found that oxygen production resulting from these charged particles interacting with the icy surface was approximately 26 pounds per second (12 kilograms per second), which is a much more focused number compared to previous estimates which ranged from a few pounds per second to over 2,000 pounds per second.

“Europa is like an ice ball slowly losing its water in a flowing stream. Except, in this case, the stream is a fluid of ionized particles swept around Jupiter by its extraordinary magnetic field,” said Dr. Jamey Szalay, who is a research scholar at Princeton University, a scientist on JADE, and lead author of the study. “When these ionized particles impact Europa, they break up the water-ice molecule by molecule on the surface to produce hydrogen and oxygen. In a way, the entire ice shell is being continuously eroded by waves of charged particles washing up upon it.”

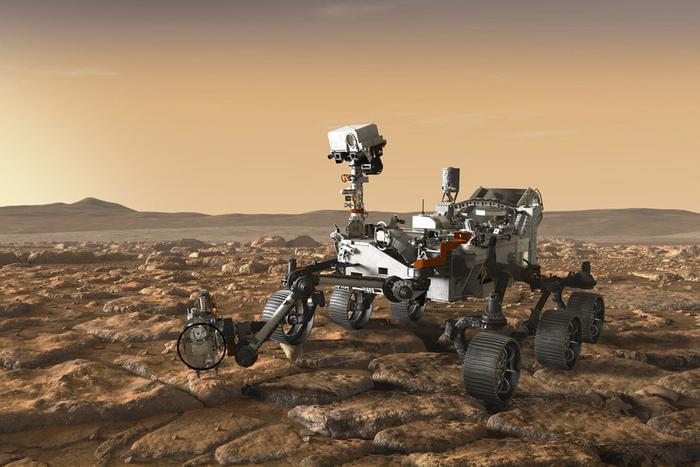

Can the ancient past of Mars be unlocked from knowing the orientation of rocks? This is what a study published today in Earth and Space Science hopes to address as an international team of researchers led by the Massachusetts Institute of Technology (MIT) investigated bedrock samples that were drilled by NASA’s Perseverance rover in Jezero Crater on Mars to ascertain the original orientation of the rocks prior to the drilling, with the orientation potentially providing clues about Mars’ magnetic field history and the conditions that existed on ancient Mars.

What makes this study unique is it marks the first time such a method is being conducted on another planet. Additionally, while orienting 3D objects is common on Earth, Perseverance is not equipped to perform such tasks. Therefore, this method had to be conducted using angles of the rover’s arm and using identifiers from the ground, as well. The team notes how this method could be applied to future in-situ studies, as well.

“The orientation of rocks can tell you something about any magnetic field that may have existed on the planet,” said Dr. Benjamin Weiss, who is a professor of planetary sciences at MIT and lead author of the study. “You can also study how water and lava flowed on the planet, the direction of the ancient wind, and tectonic processes, like what was uplifted and what sunk. So, it’s a dream to be able to orient bedrock on another planet, because it’s going to open up so many scientific investigations.”

“I do think science fiction is responsive to discoveries in science. I think it’s sort of reflective of what was going on in science at the time that it was written,” said Dr. Emma Johanna Puranen.

What can science fiction influence science facts, specifically pertaining to exoplanets? This is what a recent study published in the Journal of Science Communication hopes to address as an international team of researchers led by the St Andrews Centre for Exoplanet Science investigated how new scientific findings influence science fiction. This study holds the potential to help researchers and the public better understand the intricate connection between science fiction and science facts, specifically pertaining to exoplanets, and how this link can influence science communication going forward.

For the study, the team conducted a quantitative analysis using a Bayesian network, which is a common statistical model on 142 science fiction projects, including Star Trek, Star Wars, Dune, and Solaris, just to name a few, to ascertain how exoplanets are depicted in these projects. After running the Bayesian model, the researchers concluded that their findings indicated a trend towards scientific discoveries influencing science fiction. Additionally, these findings could influence science communication in terms of how exoplanets are depicted in science fiction going forward.

Examples of exoplanet depiction science fiction includes a myriad of Earth-like planets where humans can easily live and colonize. While this has been a staple of science fiction for many decades, specifically in Star Trek and Star Wars, this notion began to change once exoplanets started being discovered in the 1990s and quickly revealed the large amounts of non-Earth like exoplanets that populate our Milky Way Galaxy.

“If you look at the brain chemically, it’s like a soup with a bunch of ingredients,” said Dr. Fan Lam.

Can we map the brain to show its behavior patterns when a patient is healthy and sick? This is what a recent study published in Nature Methods hopes to address as a team of researchers at the University of Illinois Urbana-Champaign used a $3 million grant obtained from the National Institute of Aging to develop a novel approach to mapping brain behavior when a patient is both healthy and sick. This study holds the potential to help researchers, medical professionals, and patients better understand how to treat diseases.

“If you look at the brain chemically, it’s like a soup with a bunch of ingredients,” said Dr. Fan Lam, who is an assistant professor of bioengineering at the University of Illinois Urbana-Champaign and a co-author on the study. “Understanding the biochemistry of the brain, how it organizes spatiotemporally, and how those chemical reactions support computing is critical to having a better idea of how the brain functions in health as well as during disease.”

For the study, the researchers used a type of technology called spatial omics and combined this with deep learning to produce 3D datasets to unveil the brain’s myriad of characteristics down to the molecular level. Through this, the team has developed a novel method in monitoring brain activity when a patient is both healthy and sick, including the ability to identify complex neurological diseases.