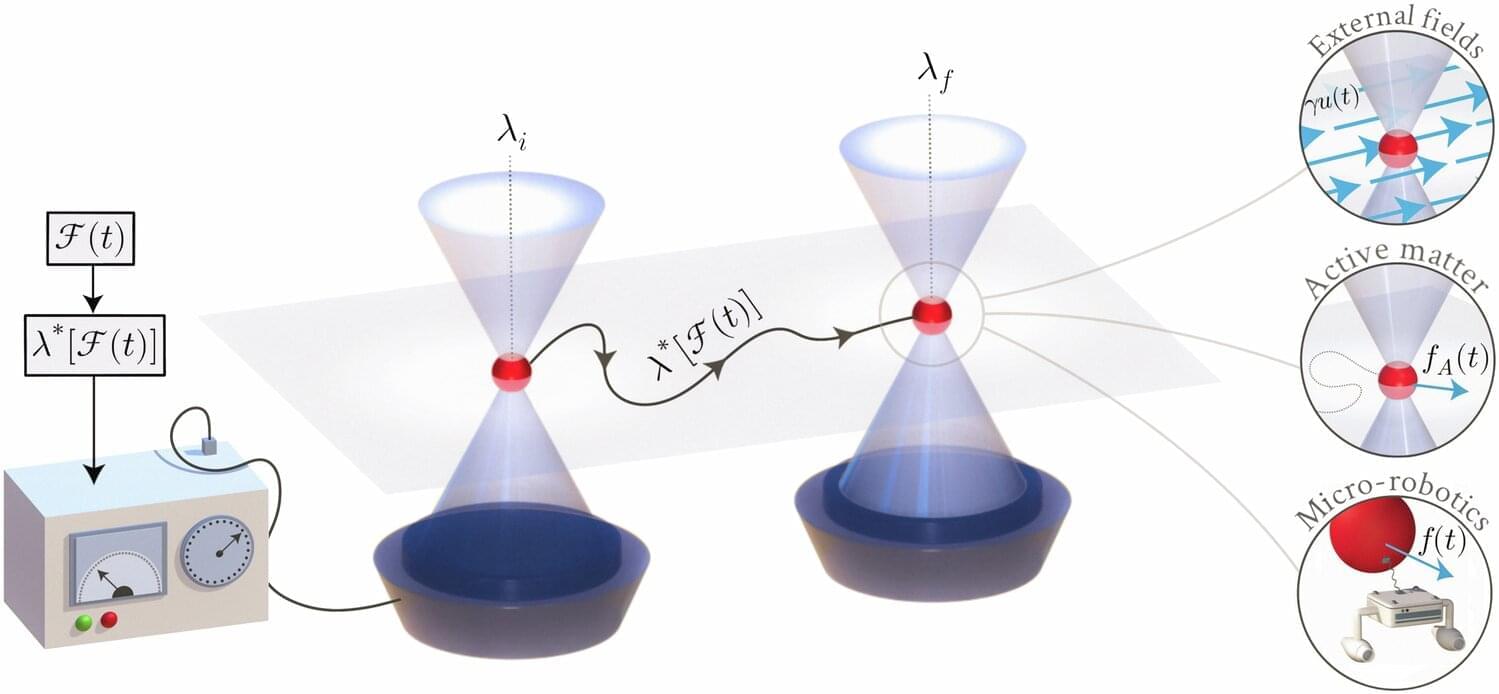

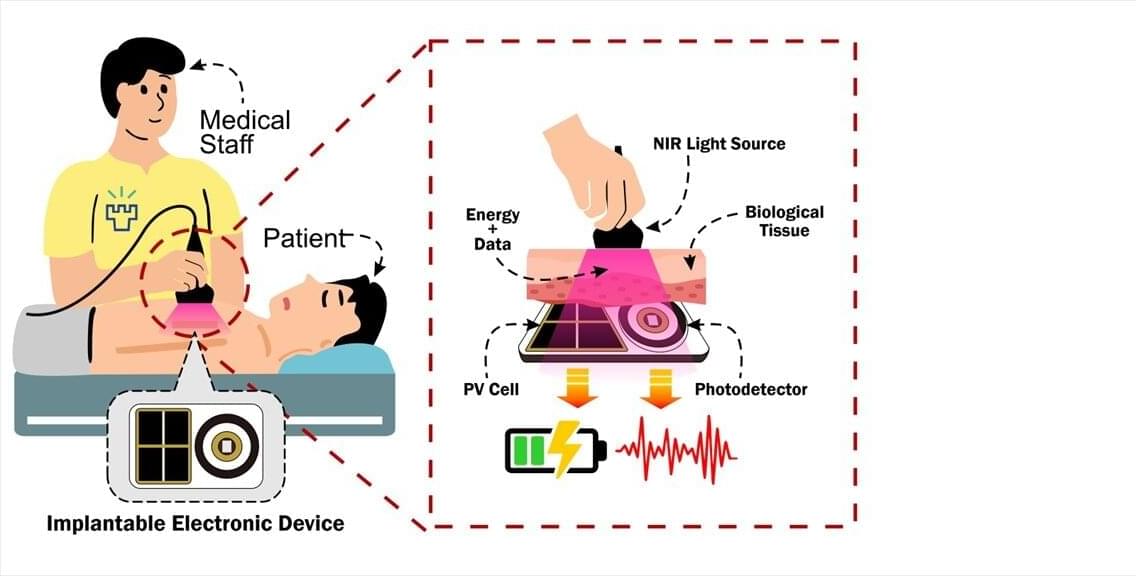

A new study from a research team at the Center for Wireless Communications Network and Systems (CWC-NS) at the University of Oulu has introduced an approach using near-infrared (NIR) light beyond light therapy to facilitate simultaneous wireless power transfer and communication to electronic implantable medical devices (IMDs). Previously, the research team demonstrated that NIR light for wireless communication is feasible, and now the team made progress by involving wireless charging capabilities using the same light.

Featured in Optics Continuum, the research outlines an approach that promises to enhance the performance and durability of IMDs while providing more secure, safer, more private, and radio interference-free communication. The published paper, authored by Syifaul Fuada, Mariella Särestöniemi, and Marcos Katz at the CWC-NS, has demonstrated research merit as it was designated an Editor’s Pick, highlighting articles of excellent scientific quality and representing the work occurring in a specific field.

The paper is a small part of Syifaul Fuada’s doctoral research. “This is the initial step that could open other ideas to advance the proposed approach,” Fuada says.