The study used a specialized photon source to transmit, store and retrieve quantum data, a major component of quantum data transmission.

Traveling for centuries in a multi generation space ark at a small percentage of the speed of light a hollowed out asteroid the world is hollow and I have touched the sky.

Video for “Planet Caravan” a song performed by Black SabbathNew channel : https://www.youtube.com/channel/UCqjB_4bCKg80mnY5TFuN9egI do not own the rights.

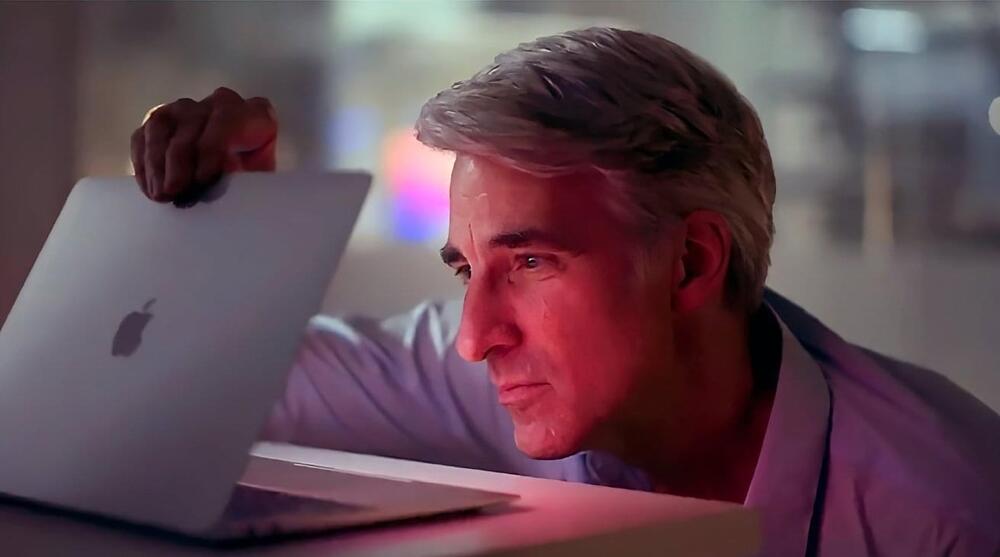

Despite years of working to develop AI systems, it wasn’t until Christmas 2022 when Craig Federighi played with Copilot that the company truly got behind the idea.

The conventional wisdom is that Apple is far behind the rest of the technology industry in its use and deployment of AI. That has seemed nonsensical since Apple has had Siri for almost 15 years, and a head of AI since 2018.

However, a new report from the Wall Street Journal claims that despite all these years working on Machine Learning, and despite having ex-Google AI chief John Giannandrea, it could be true that Apple is substantially behind. Reportedly, Giannandrea and his team have struggled to fit in with Apple, and to get AI implemented.

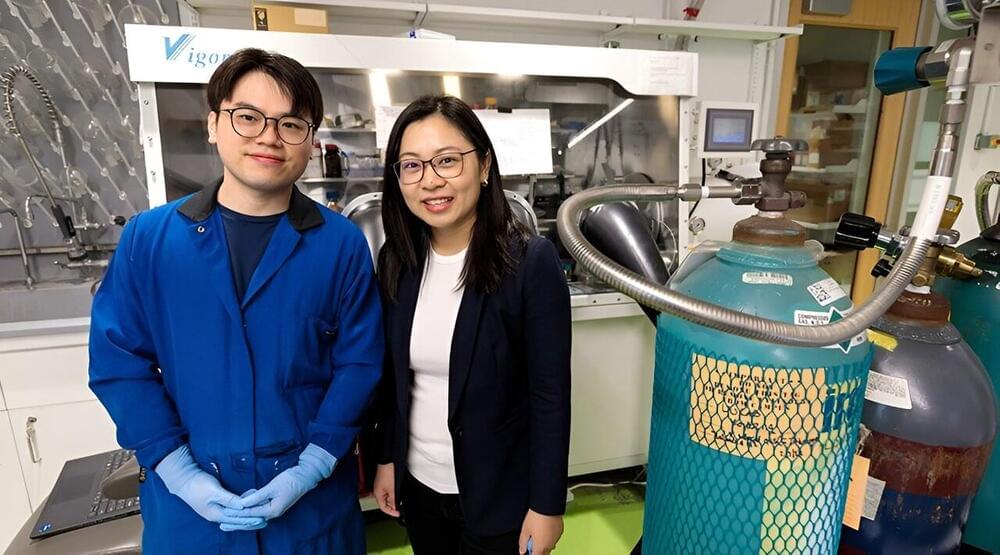

As the electric vehicle market booms, the demand for lithium—the mineral required for lithium-ion batteries—has also soared. Global lithium production has more than tripled in the last decade. But current methods of extracting lithium from rock ores or brines are slow and come with high energy demands and environmental costs. They also require sources of lithium which are incredibly concentrated to begin with and are only found in a few countries.

This is a series of videos that I decided to make on Georg Cantor’s groundbreaking works published in 1,895 and 1,897 titled Contributions to the Founding of the Theory of Transfinite Numbers.

This work could probably be counted among the most influential and significant works in mathematical history — Cantor’s transfinite numbers changed the face of mathematics completely (although, not to everyone’s pleasure). The impact of Cantor’s work can’t be underestimated.

In this series of videos I will go through the definitions of aggregate, cardinal numbers, simply ordered aggregates, ordinal types and ordinal numbers amongst others. I will also go through some of the properties of these objects including arithmetical operations of cardinal numbers and ordinal types and culminating in the arithmetic of the ordinal numbers of the second number class.

Virgin Galactic is using its SpaceShipTwo to launch the final commercial flight of VSS Unity. This is the 17th flight of the VSS Unity, before the company plans to upgrade the vehicle.

The commercial crew on this mission is composed of a researcher affiliated with Axiom Space, two private Americans, and a private Italian. The Virgin Galactic crew on Unity will be Commander Nicola Pecile and pilot Jameel Janjua.

The ‘Galactic 07’ autonomous rack-mounted research payloads will include a Purdue University experiment designed to study propellant slosh in fuel tanks of maneuvering spacecraft, as well as a UC Berkeley payload testing a new type of 3D printing.

Expected Takeoff: 10:30 a.m. Eastern Time.

⚡ Become a member of NASASpaceflight’s channel for exclusive discord access, fast turnaround clips, and other exclusive benefits. Your support helps us continue our 24/7 coverage. ⚡

But behind that wave of unreliable garbage, some amazing features emerge from using AI models. Apple has the chance to depict itself as the adult in the room, a company committed to using AI for features that make its customers’ lives better–not competing to do the best unreproducible magic trick on stage.

In doing so, it risks being seen as dowdy and behind. But if Apple can see beyond the latest tech-industry hype cycle–and it’s generally good at doing that–it can bet on iPhone users being more interested in real features than impractical nonsense.

Historically, Apple has been a company with a very strong philosophy about new technologies: they should be applied to solving the problems of real people. Most tech companies have historically had this backward: they take delivery of some whizzy new technology fresh off a manufacturer’s conveyor belt and shove it into a product. The result tends to be products that are solutions desperately searching for problems.