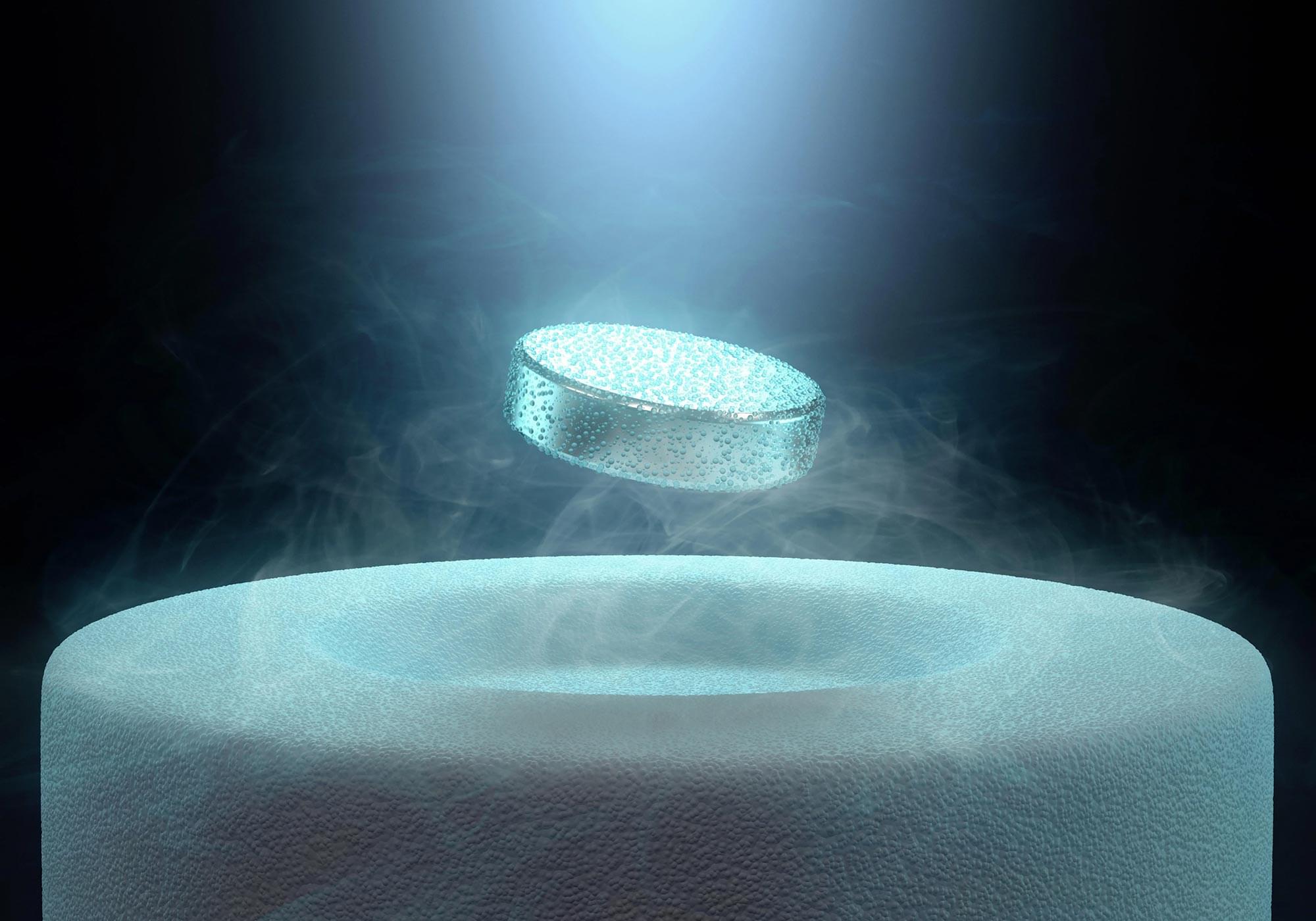

Superconductors are materials that allow electrical current to flow without any resistance, a property that typically appears only at extremely low temperatures. While most known superconductors follow established theoretical frameworks, strontium ruthenate, Sr₂RuO₄, has remained difficult to explain since researchers first identified its superconducting behavior in 1994.

The material is widely regarded as one of the purest and most thoroughly examined examples of unconventional superconductivity. Even so, scientists have not reached agreement on the exact nature of the electron pairing within Sr₂RuO₄, including its symmetry and internal structure, which are central to understanding how its superconductivity arises.