Whether it’s the smartphone in your pocket or the laptop on your desk, all current computer devices are based on electronic technology. But this has some inherent drawbacks; in particular, they necessarily generate a lot of heat, especially as they increase in performance, not to mention that fabrication technologies are approaching the fundamental limits of what is theoretically possible.

As a result, researchers explore alternative ways to perform computation that can tackle these problems and ideally offer some new functionality or features too.

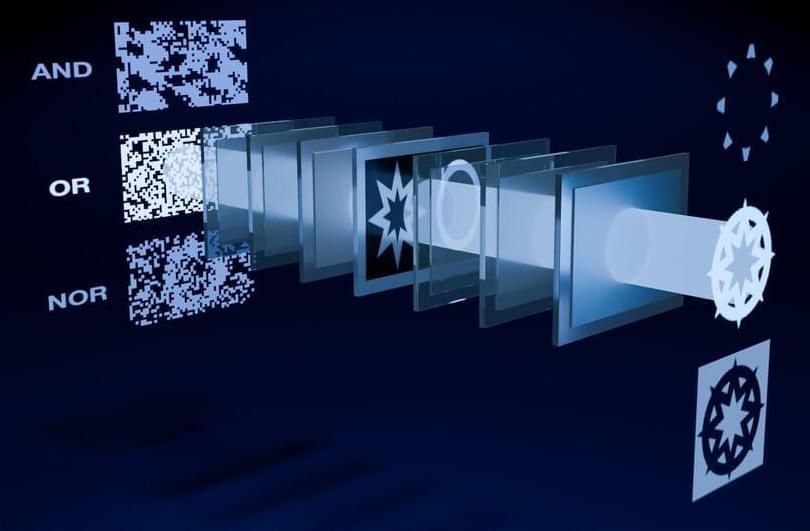

One possibility lies in an idea that has existed for several decades but has yet to break through and become commercially viable, and that’s in optical computing.