NASA astronaut Donald Pettit took a photo of stars from the International Space Station in 2003. It’s no longer “possible” to take it now.

The pulse of increased seismicity starting at around 10 a.m. Wednesday and another pulse starting at around 4 a.m. today. As of 8:45 a.m., Hawaiian Volcano Observatory officials reported the pulse was still ongoing.

According to Hawaiian Volcano Observatory, the seismicity and elevated ground deformation rates suggests magma may be slowly moving out of the summit storage region. Additional seismic pulses or swarms may occur with little or no warning and result in either continued intrusion of magma or eruption of lava.

Check out the Space Time Merch Store https://www.pbsspacetime.com/shopSign Up on Patreon to get access to the Space Time Discord!https://www.patreon.com/pbssp…

In a recent study published in Neuron, researchers discovered that microglia, the brain’s immune cells, use tunneling nanotubes…

Scheiblich et al. uncover a novel mechanism by which microglia use tunneling nanotubes to connect with α-syn-or tau-burdened neurons, enabling transfer of these proteins to microglia for clearance. Microglia donate mitochondria to restore neuronal health, shedding light on new therapeutic strategies for neurodegenerative diseases.

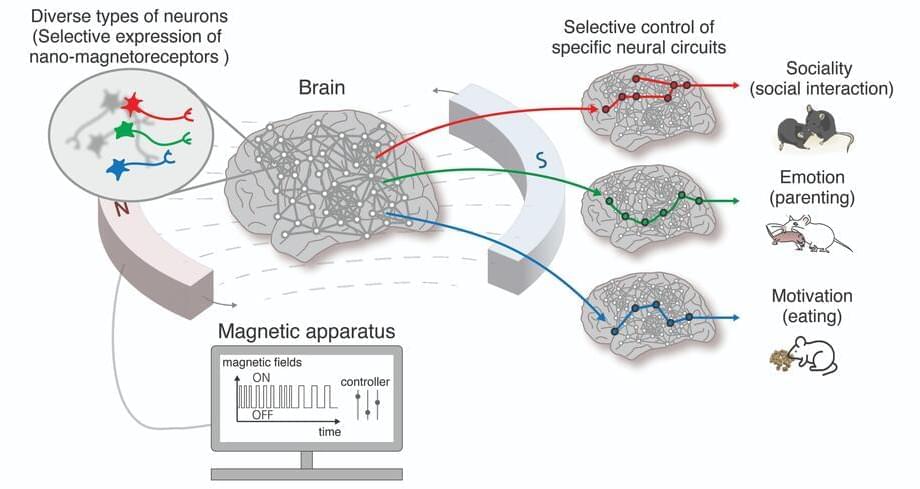

Nano-MIND Technology for Wireless Control of Brain Circuits with Potential to Modulate Emotions, Social Behaviors, and Appetite.

Researchers at the Center for Nanomedicine within the Institute for Basic Science (IBS) and Yonsei University in South Korea have unveiled a groundbreaking technology that can manipulate specific regions of the brain using magnetic fields, potentially unlocking the secrets of high-level brain functions such as cognition, emotion, and motivation. The team has developed the world’s first Nano-MIND (Magnetogenetic Interface for NeuroDynamics) technology, which allows for wireless, remote, and precise modulation of specific deep brain neural circuits using magnetism.

The human brain contains over 100 billion neurons interconnected in a complex network. Controlling the neural circuits is crucial for understanding higher brain functions like cognition, emotion, and social behavior, as well as identifying the causes of various brain disorders. Novel technology to control brain functions also has implications for advancing brain-computer interfaces (BCIs), such as those being developed by Neuralink, which aim to enable control of external devices through thought alone.

While magnetic fields have long been used in medical imaging due to their safety and ability to penetrate biological tissue, precisely controlling brain circuits with magnetic fields has been a significant challenge for scientists.

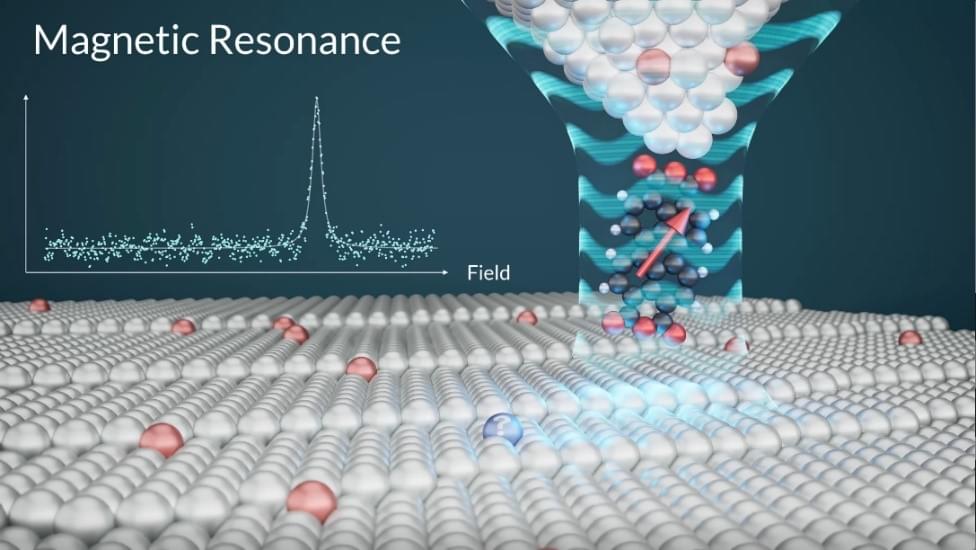

In a scientific breakthrough, an international research team from Korea’s IBS Center for Quantum Nanoscience (QNS) and Germany’s Forschungszentrum Jülich developed a quantum sensor capable of detecting minute magnetic fields at the atomic length scale. This pioneering work realizes a long-held dream of scientists: an MRI-like tool for quantum materials.

The research team utilized the expertise of bottom-up single-molecule fabrication from the Jülich group while conducting experiments at QNS, utilizing the Korean team’s leading-edge instrumentation and methodological know-how to develop the world’s first quantum sensor for the atomic world.

The diameter of an atom is a million times smaller than the thickest human hair. This makes it extremely challenging to visualize and precisely measure physical quantities like electric and magnetic fields emerging from atoms. To sense such weak fields from a single atom, the observing tool must be highly sensitive and as small as the atoms themselves.

In this thought-provoking exploration, we delve into the profound reflections of Edward Witten, a leading figure in theoretical physics. Join us as we navigate the complexities of dualities, the enigmatic nature of M-theory, and the intriguing concept of emergent space-time. Witten, the only physicist to win the prestigious Fields Medal, offers deep insights into the mathematical and physical mysteries that shape our understanding of reality. From the holographic principle to the elusive (2,0) theory, we uncover how these advanced theories interconnect and challenge our conventional perceptions. This journey is not just a deep dive into high-level physics but a philosophical quest to grasp the nature of existence itself. Read the full interview here: https://www.quantamagazine.org/edward…

#EdwardWitten #TheoreticalPhysics #StringTheory #QuantumFieldTheory #MTheory.

Become a member of this channel to enjoy benefits:

/ @artificiallyaware

The internet is going through a shift. There used to be a thing called a “follower.” It allowed publishers, creators, and anyone online to build a community around their work. But this piece of foundational architecture for human creativity and communication – the “follow” – has been threatened. In this talk, I put this internet-wide shift in historical context from the perspective of a creator, outline where the web will go in the future, and offer thoughts on what to do about it all as a modern creator on the internet.