Tiny carbon tubes beam out stronger light by stealing a boost from internal vibrations—a discovery that could revolutionize solar power and advanced electronics.

We just celebrated May the 4th, and now this.

You’re watching: 2025’s VOLONAUT AIRBIKE – The Jet-Powered Flying Bike That’s Actually Real!

Forget sci-fi… this is the future happening right now. The Volonaut Airbike isn’t just a concept or a CGI teaser — it’s a real, jet-powered flying bike that’s already tearing through the skies in 2025!

Unlike bulky drones with spinning blades, this beast lifts off with raw jet propulsion — no exposed rotors, no cockpit, and no nonsense. It’s built from carbon fiber and 3D-printed parts, making it ultra-light — 7x lighter than a motorcycle. The rider becomes part of the machine, steering it by body movement while a smart onboard flight computer keeps everything stable.

Created by Tomasz Patan, the genius behind Jetson ONE, the Volonaut Airbike is capable of reaching speeds up to 200 km/h (124 mph), soaring over forests, cliffs, and even deserts with mind-blowing agility.

In this in-depth interview, Joscha Bach shares his insights into AI: what it illuminates about consciousness, how it will develop, and what it means for humanity.

Is AI our only chance at achieving real understanding?

With a free trial, you can watch our full archive of Joscha Bach’s talks and debates at https://iai.tv/home/speakers/joscha-b… Introduction 00:08 What is Artificial General Intelligence, and how far away are we from creating it? 01:08 Do you consider AI humanlike now? 02:43 Why do you defend a computational perspective? 03:44 Is AI the method for the universe to understand itself? 04:26 How is AI transforming society now, and how will it transform society in the next few years? 05:20 Do you think we have the capacity to reconceive how our institutions will function in light of these changes? 06:17 How could AI help us solve the climate crisis, when our biggest problem is inaction? 08:24 Have we become less critical, as a species? 10:40 Would you agree that social media has been detrimental to our society? 12:58 How do you think AGI will be realised? 18:46 What are the differences between evolved systems and designed systems? 20:31 What did you think of the infamous open letter about AI safety? 24:24 How can we solve AI’s misalignment to human values? 25:43 Do you have hope for the future? 27:33 Do you think it’s possible to build a machine that understands? 30:32 Do you think that we are living in base reality? Join cognitive scientist and AI researcher Joscha Bach in this exclusive interview on the limits, risks, and future of AI. From the potential of Artificial General Intelligence to the alignment problem and the fundamental ways AI learns differently from humans, Bach explores whether AI might one day grasp reality on a deeper level than we can. He also examines the systemic failures of institutions in tackling the climate crisis and the COVID-19 pandemic, arguing that the internet’s potential for collective intelligence remains largely untapped. Might AI help us overcome these challenges, or does it merely reflect our own limitations? Interviewed by Darcy Bounsall. #ai #agi #artificialintelligence #artificialgeneralintelligence #consciousness #computerscience Joscha Bach is a cognitive scientist, AI researcher, and philosopher whose research aims to bridge cognitive science and AI by studying how human intelligence and consciousness can be modelled computationally. The Institute of Art and Ideas features videos and articles from cutting edge thinkers discussing the ideas that are shaping the world, from metaphysics to string theory, technology to democracy, aesthetics to genetics. Subscribe today! https://iai.tv/subscribe?utm_source=Y… For debates and talks: https://iai.tv For articles: https://iai.tv/articles For courses: https://iai.tv/iai-academy/courses.

00:00 Introduction.

00:08 What is Artificial General Intelligence, and how far away are we from creating it?

01:08 Do you consider AI humanlike now?

02:43 Why do you defend a computational perspective?

03:44 Is AI the method for the universe to understand itself?

04:26 How is AI transforming society now, and how will it transform society in the next few years?

05:20 Do you think we have the capacity to reconceive how our institutions will function in light of these changes?

06:17 How could AI help us solve the climate crisis, when our biggest problem is inaction?

08:24 Have we become less critical, as a species?

10:40 Would you agree that social media has been detrimental to our society?

12:58 How do you think AGI will be realised?

18:46 What are the differences between evolved systems and designed systems?

20:31 What did you think of the infamous open letter about AI safety?

24:24 How can we solve AI’s misalignment to human values?

25:43 Do you have hope for the future?

27:33 Do you think it’s possible to build a machine that understands?

30:32 Do you think that we are living in base reality?

Join cognitive scientist and AI researcher Joscha Bach in this exclusive interview on the limits, risks, and future of AI. From the potential of Artificial General Intelligence to the alignment problem and the fundamental ways AI learns differently from humans, Bach explores whether AI might one day grasp reality on a deeper level than we can. He also examines the systemic failures of institutions in tackling the climate crisis and the COVID-19 pandemic, arguing that the internet’s potential for collective intelligence remains largely untapped. Might AI help us overcome these challenges, or does it merely reflect our own limitations?

Interviewed by Darcy Bounsall.

PricewaterhouseCoopers (PwC) told 1,500 U.S. employees on Monday that their roles were eliminated with immediate effect—about 2 percent of its national headcount. In the company-wide e-mail obtained by industry blog Going Concern, leadership cited “historically low attrition” and a need to “align the firm for the future.” Publicly, a spokesperson framed the move as a […]

Good channel here.

The future of human longevity just got a $500billion booste thanks to Trump’s bold new AI vision! In this video, we explore the recently announced Stargate AI Infrastructure Project—a staggering $500 billion initiative unveiled by President Donald Trump on January 21, 2025, alongside tech titans OpenAI, Oracle, and SoftBank. Designed to build colossal data centers and supercharge AI development, could this ambitious plan hold the key to curing aging? We dive into the cutting-edge science, the potential of AI to revolutionize healthcare, and how Stargate might reshape humanity’s fight against time. Buckle up for a wild ride into the future!

🔍 What You’ll Discover:

The Stargate Project: Trump’s massive AI infrastructure gamble.

How AI could unlock breakthroughs in aging research.

The players: OpenAI, Oracle, SoftBank, and their bold vision.

The big question: Can this tech triumph over mortality?

JOIN LSN Patreon for exclusive access to news, tips and a community of like minded longevity enthusiasts: https://www.patreon.com/user?u=29506604

🔔 Like, subscribe, and hit the bell for more groundbreaking insights every week! Tell us in the comments: Do you think Stargate could be the key to curing aging? Let’s debate it!

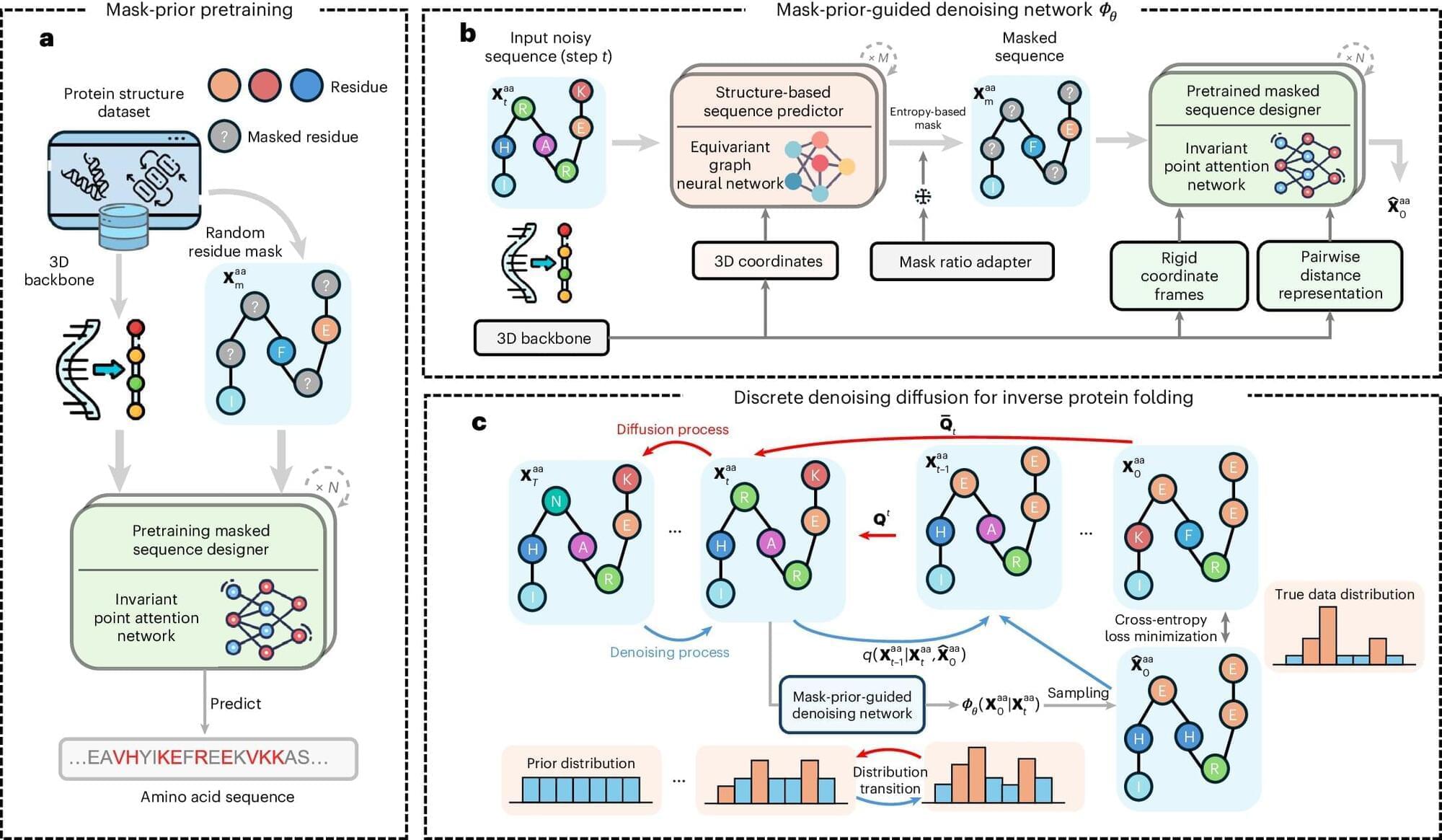

An AI approach developed by researchers from the University of Sheffield and AstraZeneca, could make it easier to design proteins needed for new treatments.

In their study published in the journal Nature Machine Intelligence, Sheffield computer scientists in collaboration with AstraZeneca and the University of Southampton have developed a new machine learning framework that has shown the potential to be more accurate at inverse protein folding than existing state-of-the-art methods.

Inverse protein folding is a critical process for creating novel proteins. It is the process of identifying amino acid sequences, the building blocks of proteins, that fold into a desired 3D protein structure and enable the protein to perform specific functions.

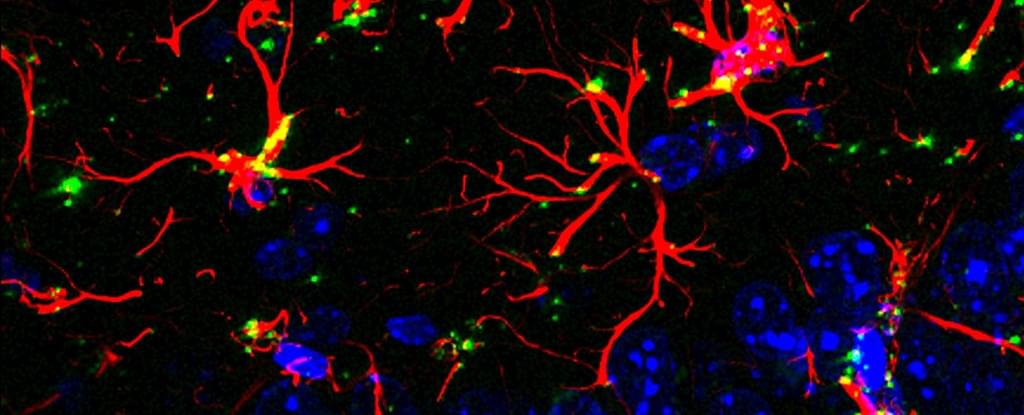

Scientists are looking at ways to tackle Alzheimer’s and dementia from all kinds of angles, and a new study has identified the molecule hevin (or SPARCL-1) as a potential way of preventing cognitive decline.

Hevin is a protein naturally produced in the brain by cells called astrocytes. These support-worker cells look after the connections or synapses between neurons, and it’s thought that hevin plays a role in this essential work.

In this new study, researchers from the Federal University of Rio de Janeiro (UFRJ) and the University of São Paulo in Brazil boosted hevin production in the brains of both healthy mice and those with an Alzheimer’s-like disease.

You stayed up too late scrolling through your phone, answering emails or watching just one more episode. The next morning, you feel groggy and irritable. That sugary pastry or greasy breakfast sandwich suddenly looks more appealing than your usual yogurt and berries. By the afternoon, chips or candy from the break room call your name. This isn’t just about willpower. Your brain, short on rest, is nudging you toward quick, high-calorie fixes.

There is a reason why this cycle repeats itself so predictably. Research shows that insufficient sleep disrupts hunger signals, weakens self-control, impairs glucose metabolism and increases your risk of weight gain. These changes can occur rapidly, even after a single night of poor sleep, and can become more harmful over time if left unaddressed.

I am a neurologist specializing in sleep science and its impact on health.

Cancer plasticity allows tumor cells to change their identity, evade therapies, and adapt to environmental pressures, contributing to treatment resistance and metastasis. New research is targeting this adaptability through epigenetic drugs, immune checkpoint inhibitors, and strategies to limit phenotypic switching.